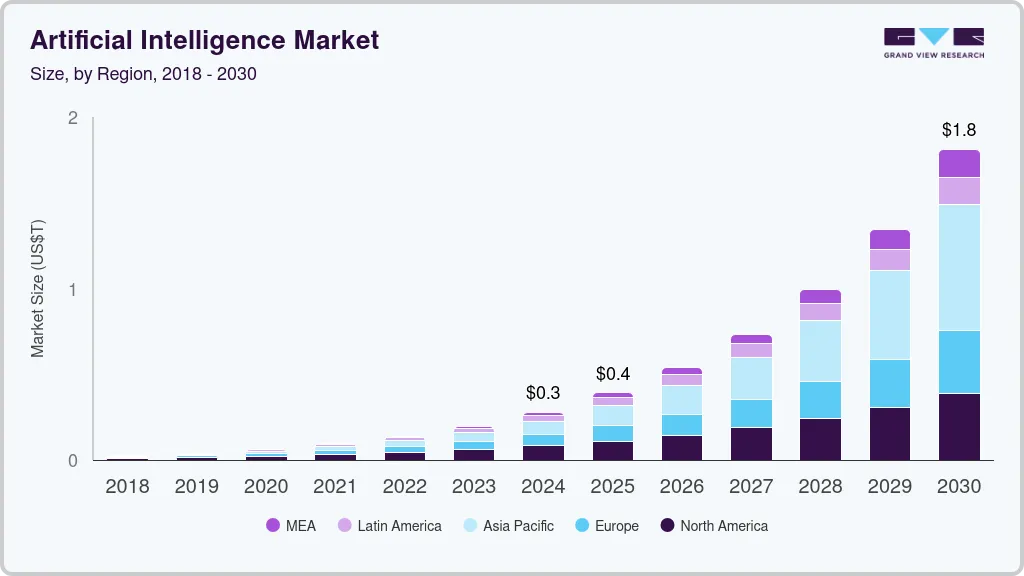

The ML vs Deep Learning debate fuels the global artificial intelligence market, valued at $391 billion in 2026 and projected to soar to $1.81 trillion by 2030.

Like a rocket propelling innovation, ML vs Deep Learning drives this growth, with machine learning offering flexible, data-driven models and deep learning enabling complex pattern recognition through neural networks.

While these terms are often used interchangeably, understanding their distinct characteristics is crucial for businesses looking to leverage AI technologies effectively.

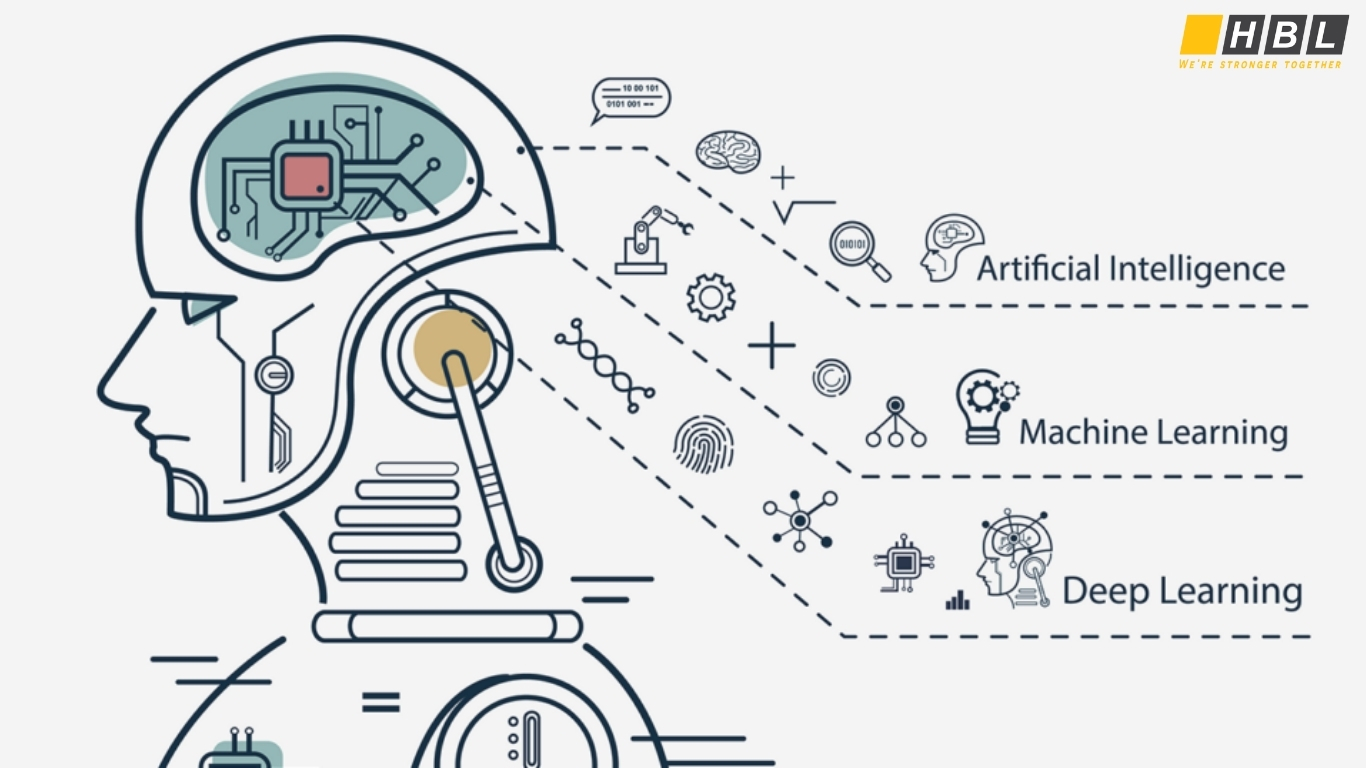

What is Artificial Intelligence?

Artificial intelligence represents the broadest concept in this technological hierarchy, encompassing any technique that enables computers to mimic human intelligence and cognitive functions. At its core, AI refers to machines performing tasks that typically require human intelligence, such as problem-solving, learning, perception, and decision-making.

The field of AI can be categorized into three main types:

- Artificial Narrow Intelligence (ANI) represents the current state of AI technology, designed to perform specific tasks like facial recognition or chess playing.

- Artificial General Intelligence (AGI) would theoretically perform on par with human intelligence across various domains.

Artificial Super Intelligence (ASI) would surpass human capabilities entirely.

Today’s AI applications predominantly fall under ANI.

The rapid advancement in AI is driven by increased computational power, data availability, and sophisticated algorithms that enable machines to process information at unprecedented scales.

Contact us for a free consultation!

Understanding Machine Learning

Machine learning serves as a subset of artificial intelligence that enables systems to automatically learn and improve from experience without being explicitly programmed for every scenario. Unlike traditional programming where developers write specific instructions, machine learning algorithms identify patterns in data and make predictions based on these learned patterns.

Core Characteristics of Machine Learning

- Supervised Learning involves training algorithms on labeled datasets where both input and desired output are known. For example, training a spam detection system using emails already classified as spam or legitimate.

- Unsupervised Learning discovers hidden patterns in unlabeled data, commonly used for customer segmentation and market analysis.

- Reinforcement Learning enables systems to learn through trial and error, receiving rewards or penalties for their actions.

The machine learning process typically requires moderate amounts of structured data, generally performing well with datasets ranging from 1,000 to 100,000 samples.

This makes ML particularly suitable for businesses with limited data resources or those working with well-defined, structured datasets.

Machine Learning Applications in Business

Modern businesses leverage machine learning for predictive analytics, recommendation systems, fraud detection, and supply chain optimization.

Netflix generates $1 billion annually from ML-powered personalized recommendations, while 48% of businesses use ML to effectively utilize big data.

Deep Learning Explained

Deep learning represents a specialized subset of machine learning that uses artificial neural networks with multiple layers to process information. Inspired by the human brain’s structure, deep learning models consist of interconnected nodes organized in layers that progressively extract increasingly complex features from raw data.

The Architecture of Deep Learning

Neural Network Structure involves input layers, multiple hidden layers, and output layers, with each layer containing numerous artificial neurons. Automatic Feature Extraction eliminates the need for manual feature engineering, as the network learns relevant patterns directly from raw data. Hierarchical Learning enables the system to understand simple features in early layers and combine them into complex concepts in deeper layers.

Deep learning models typically require substantial computational resources and large datasets exceeding 100,000 samples to train effectively. The complexity of these models demands specialized hardware like GPUs or TPUs for efficient processing.

Popular Deep Learning Architectures

- Convolutional Neural Networks (CNNs) excel at image recognition and computer vision tasks, powering applications from medical diagnosis to autonomous vehicles.

- Recurrent Neural Networks (RNNs) handle sequential data like text and speech, forming the backbone of language translation and voice recognition systems.

- Generative Adversarial Networks (GANs) create new content by having two networks compete against each other, enabling applications in art generation and data synthesis.

Machine Learning – ML vs Deep Learning: Key Differences

The ML vs Deep Learning comparison is critical for choosing the right technology for business applications, from predictive analytics to chatbots.

Data Requirements and Processing

In the ML vs Deep Learning debate, Machine learning algorithms can work effectively with smaller, structured datasets, often requiring as few as 50-1,000 data points for simple tasks.

Deep learning models demand large volumes of data, typically requiring 10,000+ samples with complex models needing millions of data points for optimal performance.

The feature engineering process represents another significant distinction. Traditional machine learning requires manual feature selection where human experts identify relevant data characteristics.

Deep learning performs automatic feature extraction, learning relevant patterns directly from raw data without human intervention.

Computational and Infrastructure Needs

The ML vs Deep Learning distinction extends to hardware. Hardware Requirements differ substantially between the two approaches. Machine learning models often run efficiently on standard CPUs, making them accessible for businesses with limited computational resources.

Deep learning requires specialized GPU or TPU hardware for training and inference, significantly increasing infrastructure costs.

Training Time varies considerably based on complexity. Machine learning models typically train within hours to days, enabling rapid prototyping and deployment.

Deep learning models may require days to weeks of training time due to their complexity and large data requirements.

Performance and Interpretability

When exploring ML vs Deep Learning, interpretability matters:

Model Interpretability represents a crucial business consideration. Machine learning models, particularly simpler algorithms like decision trees, offer greater transparency in their decision-making process.

Deep learning models operate as “black boxes”, making it challenging to understand how they arrive at specific conclusions.

Performance Characteristics depend heavily on data type and complexity. Machine learning excels with structured data and well-defined relationships, offering good performance for tasks like financial forecasting and customer analytics.

Deep learning dominates unstructured data processing, achieving superior results in image recognition, natural language processing, and speech recognition.

AI vs ML vs Deep Learning vs Generative AI

The relationship between these technologies forms a hierarchical structure where each builds upon the previous level of sophistication.

Contact us for a free consultation!

The Technology Hierarchy

Artificial Intelligence encompasses the entire field of intelligent systems, including rule-based systems, expert systems, and learning algorithms. Machine Learning represents AI systems that learn from data without explicit programming for every scenario.

Deep Learning utilizes neural networks with multiple layers to process complex patterns in large datasets. Generative AI leverages deep learning to create new content, representing the cutting-edge of AI applications.

Generative AI Market Dynamics

The generative AI market has emerged as a particularly dynamic segment, valued at $66.89 billion in 2026 and projected to reach $442.07 billion by 2031, representing a 36.99% CAGR. This technology powers applications like ChatGPT, DALL-E, and code generation tools, fundamentally transforming content creation and human-computer interaction.

Investment in generative AI reached $25.2 billion in 2023, highlighting the significant commercial interest in this technology. The generative AI market employs over 944,000 individuals globally, with 151,000+ new jobs added in the past year.

Understanding the Convergence

Modern AI applications often combine multiple approaches. ChatGPT, for example, utilizes deep learning architectures, natural language processing techniques, and machine learning optimization to deliver human-like conversational capabilities. This convergence demonstrates how businesses can leverage multiple AI technologies to create comprehensive solutions.

Training Data Requirements and Computational Needs

The success of AI implementations heavily depends on data quality, volume, and computational infrastructure. Understanding these requirements is crucial for project planning and resource allocation.

Data Volume Guidelines

Traditional Machine Learning typically requires 10 times more data points than features in the model, following the established “10 times rule”. For example, a model with 10 features might need 100-1,000 data points for effective training. Deep Learning Models demand significantly more data, often requiring 1,000+ data points per feature for neural networks to avoid overfitting.

Specialized Applications have varying requirements:

- Facial recognition systems utilize over 450,000 facial images for training

- Image annotation projects involve more than 185,000 images with 650,000+ annotated objects

- Chatbot systems train on approximately 200,000 questions and 2+ million answers

- Translation applications leverage 300,000+ audio recordings from non-native speakers

Data Quality and Preprocessing

Data readiness varies significantly across organizations. Research indicates that 19% of companies maintain consistently preprocessed, AI-ready data, while 40% have mostly processed data, 33% occasionally process their data, and 8% rarely preprocess data for AI applications.

Data augmentation and transfer learning become critical when quality data is scarce, particularly in sensitive sectors like healthcare and finance where obtaining large datasets faces regulatory and privacy constraints.

Infrastructure Considerations

Computational Requirements scale with model complexity. Traditional machine learning models often run on standard business hardware, while deep learning requires specialized GPU clusters or cloud-based AI services.

The power required to train frontier AI models has grown by 2.4x per year since 2020, highlighting the increasing computational demands.

Real-World Applications and Use Cases

The practical applications of machine learning and deep learning span virtually every industry, with each technology excelling in specific domains.

Machine Learning Success Stories

- Financial Services leverage ML for fraud detection, credit scoring, and algorithmic trading, with 65% adoption rates in the sector.

- Retail Organizations use ML for inventory optimization, demand forecasting, and customer analytics, achieving 56% operational improvements.

- Healthcare Providers employ ML for predictive maintenance of medical equipment and patient risk assessment, with 38% using computers for diagnostic support.

- Amazon’s recommendation engine demonstrates ML’s commercial impact, generating billions in additional revenue through personalized product suggestions based on customer behavior patterns.

- Netflix’s content recommendation system saves the company over $1 billion annually by reducing customer churn through personalized viewing suggestions.

Deep Learning Breakthrough Applications

- Computer Vision applications include autonomous vehicles, medical imaging analysis, and quality control in manufacturing. Tesla’s self-driving technology processes millions of images to navigate complex traffic scenarios in real-time.

- Natural Language Processing powers virtual assistants, language translation, and content generation. Google Translate processes over 100 billion words daily using deep learning models trained on multilingual datasets.

- Medical Diagnostics benefit from deep learning’s pattern recognition capabilities.

- Medical imaging AI can detect abnormalities invisible to human radiologists, improving early detection of cancers and other conditions.

- Drug Discovery processes accelerate through deep learning analysis of molecular structures and biological pathways.

Industry-Specific Applications

- Manufacturing employs both ML and deep learning for predictive maintenance, quality control, and supply chain optimization.

- Automotive industry uses 48% machine learning applications for various operational improvements.

- Marketing and Sales teams achieve 34% efficiency gains through AI-powered customer insights and campaign optimization.

- The healthcare AI market alone is estimated to reach $187.95 billion by 2030, demonstrating the significant economic impact of these technologies in critical sectors.

When to Choose Machine Learning vs Deep Learning

Selecting the appropriate technology requires careful consideration of data characteristics, business objectives, resource constraints, and performance requirements.

Machine Learning is Optimal When:

- Limited Data Availability characterizes your project, with datasets containing fewer than 10,000 samples.

- Structured Data predominates, such as customer demographics, transaction records, or sensor readings organized in databases.

- Interpretability Requirements are critical for regulatory compliance or stakeholder understanding.

- Quick Implementation is necessary, with projects requiring rapid prototyping and deployment.

- Resource Constraints limit computational budget or infrastructure capabilities.

- Practical ML Applications include customer churn prediction, financial fraud detection, inventory forecasting, and A/B testing optimization.

These applications typically deliver strong ROI with moderate investment in data collection and processing infrastructure.

Deep Learning Excels When:

- Unstructured Data forms the primary input, including images, audio, video, or natural language text.

- Large Datasets are available, with hundreds of thousands or millions of samples.

- Complex Pattern Recognition is required, such as identifying subtle features in medical images or understanding context in conversational AI.

- High Accuracy Requirements justify the additional computational cost and complexity.

- Long-term Investment perspective allows for extensive model development and training.

- Strategic Deep Learning Applications include autonomous systems, advanced analytics platforms, personalized content generation, and intelligent automation systems.

Hybrid Approaches

Many successful AI implementations combine both approaches. Feature Extraction using deep learning can feed traditional ML algorithms for final predictions, optimizing both accuracy and interpretability. Transfer Learning allows organizations to leverage pre-trained deep learning models while fine-tuning them with smaller, domain-specific datasets.

The Role of Natural Language Processing

Natural Language Processing: A Convergence of ML vs Deep Learning Natural Language Processing (NLP) is a specialized domain where the ML vs Deep Learning debate shines, blending machine learning and deep learning to enable seamless human-computer communication.

Acting as a language maestro, NLP powers applications like chatbots and translation tools, leveraging low code no code platforms for rapid deployment.

By addressing ML vs Deep Learning, NLP combines statistical models with advanced neural networks to transform how businesses interact with users, from customer service to content creation.

NLP Technology Fundamentals

Traditional NLP relies on statistical machine learning, like Naive Bayes for sentiment analysis or logistic regression for text classification, requiring manual feature engineering. Modern NLP, central to ML vs Deep Learning, uses deep learning’s transformer models, such as BERT or GPT, to achieve human-like language understanding with minimal preprocessing. These advancements, supported by AI-driven no code development, enable cost-effective app development for NLP solutions, reducing deployment time by 40% (Forrester, 2026). HBLAB’s scalable low code solutions empower businesses to build NLP apps efficiently.

ChatGPT and NLP Advancements

ChatGPT, a pinnacle of ML vs Deep Learning, showcases deep learning’s transformer architecture with billions of parameters trained on vast text corpora. It generates contextually relevant responses, revolutionizing applications like automated customer support and content creation. Using low code no code platforms, businesses can integrate ChatGPT-like NLP systems, addressing ML vs Deep Learning by leveraging AI-driven no code development for rapid scaling. This demonstrates how ML vs Deep Learning drives transformative NLP solutions.

NLP vs Machine Learning Distinctions

- Scope and Focus: The ML vs Deep Learning distinction highlights that machine learning addresses broad pattern recognition across data types, like images or numbers, while NLP focuses solely on language tasks like translation.

- Data Requirements: NLP, central to ML vs Deep Learning, uses unstructured text and speech, requiring preprocessing like tokenization and semantic analysis, unlike general machine learning’s structured data.

- Computational Complexity: NLP’s ML vs Deep Learning complexity is higher due to language’s ambiguity, with deep learning models demanding powerful GPUs for transformer training.

- Model Interpretability: In ML vs Deep Learning, NLP’s deep learning models are less interpretable due to complex contextual relationships, unlike simpler machine learning models like decision trees. HBLAB’s scalable low code solutions simplify ML vs Deep Learning for NLP, enabling cost-effective app development.

Business Applications of NLP

Customer Service Automation through intelligent chatbots and virtual assistants reduces operational costs while improving response times. Content Analysis enables businesses to process customer feedback, social media mentions, and market research at scale. Document Processing automates contract analysis, compliance monitoring, and information extraction from unstructured business documents.

The NLP market continues expanding as businesses recognize the value of automated language processing for improving operational efficiency and customer experience.

Future Trends and Market Outlook

The artificial intelligence landscape continues evolving rapidly, with market projections indicating sustained growth across all AI technology segments.

Market Growth Projections

This growth trajectory positions AI among the fastest-expanding technology sectors globally.

Investment patterns show continued confidence in AI technologies, with over 13,980 funding rounds closed and average investment values exceeding $30 million per round. Major investors including NVIDIA, Google, Amazon, and Andreessen Horowitz have collectively invested over $21.8 billion across numerous AI companies.

Technological Advancement Trends

Multimodal AI Systems that process text, images, audio, and video simultaneously represent the next frontier in AI development. AI Democratization through user-friendly interfaces and no-code platforms enables broader adoption across businesses of all sizes. Edge Computing Integration brings AI processing closer to data sources, reducing latency and improving privacy.

Sustainable AI Development addresses the environmental impact of large-scale AI training, with research focusing on energy-efficient algorithms and carbon-neutral computing. The power requirements for frontier AI models growing at 2.4x annually necessitates sustainable development approaches.

Industry Transformation Patterns

AI Adoption Rates vary by sector, with financial services leading at 65%, retail at 56%, and healthcare at 38%. Enterprise Integration shows 90% of leading organizations engaged in AI initiatives, indicating mainstream adoption across business functions.

Workforce Evolution includes over 944,000 individuals employed in AI globally, with 151,000+ new jobs added yearly.

Regulatory Development continues shaping AI implementation, with governments worldwide establishing ethical AI frameworks and compliance requirements for AI deployment in critical sectors.

Global Competition intensifies as countries invest in AI sovereignty and national AI strategies.

The convergence of increasing computational power, growing data availability, and algorithmic improvements will continue driving AI innovation across industries, with generative AI and multimodal systems leading the next wave of technological advancement.

HBLAB – Your Trusted Partner in AI Development

In an era where artificial intelligence, machine learning, and deep learning are transforming business operations globally, HBLAB stands as your comprehensive partner in navigating this technological landscape and implementing cutting-edge AI solutions tailored to your specific needs.

With proven expertise across the full spectrum of AI technologies – from traditional machine learning algorithms to advanced deep learning architectures and generative AI systems – HBLAB enables organizations to harness the power of intelligent automation and data-driven decision making.

Our AI and Machine Learning Capabilities:

- Custom ML Model Development for predictive analytics, fraud detection, and business optimization

- Deep Learning Solutions for computer vision, natural language processing, and complex pattern recognition

- Generative AI Implementation for content creation, automated workflows, and intelligent customer interactions

- Data Engineering and Preparation ensuring your datasets meet the quality standards required for successful AI deployment

- Model Training and Optimization leveraging our computational infrastructure and expertise in both traditional ML and deep learning approaches

Why Choose HBLAB for Your AI Journey:

Our team of 630+ skilled professionals includes AI specialists, data scientists, and machine learning engineers with deep expertise across multiple domains and technologies. With 30% senior-level employees bringing 5+ years of specialized AI experience, we deliver sophisticated solutions that drive measurable business outcomes.

CMMI Level 3 certification ensures our AI development processes meet the highest quality standards, while our cost-effective approach delivers premium AI capabilities at 30% lower costs than traditional markets.

Our flexible engagement models – including offshore, onsite, and dedicated AI teams – adapt to your project requirements and organizational preferences.

From AI strategy consulting and proof-of-concept development to full-scale implementation and ongoing optimization, HBLAB provides end-to-end support for your artificial intelligence initiatives. Whether you’re implementing your first machine learning model or deploying enterprise-scale deep learning systems, our global team delivers the expertise and resources needed for success.

Ready to Transform Your Business with AI?

Let HBLAB’s proven AI expertise accelerate your journey from concept to deployment. Our comprehensive approach ensures your organization leverages the right combination of machine learning, deep learning, and AI technologies to achieve your business objectives.

CONTACT HBLAB FOR A FREE CONSULTATION

Frequently Asked Questions

1. What’s the difference between deep learning and machine learning?

The question ML vs Deep Learning highlights a key distinction: deep learning is a specialized subset of machine learning using multi-layered neural networks to automatically extract features from data. Traditional machine learning requires manual feature engineering, like selecting variables for regression models, while deep learning learns patterns autonomously, often via low code no code platforms for faster deployment. Deep learning demands larger datasets and more computational power, making it ideal for complex tasks like image recognition.

2. Is ChatGPT AI or ML?

Exploring ML vs Deep Learning, ChatGPT is an AI application that leverages both machine learning and deep learning. Built on transformer architecture—a deep neural network—it uses machine learning methods for training, enhanced by AI-driven no code development for rapid prototyping. This makes ChatGPT a robust AI system combining ML vs Deep Learning strengths for conversational tasks.

3. Is DL harder than ML?

When considering ML vs Deep Learning, deep learning is generally more complex due to its multi-layered neural networks, larger data needs, and reliance on specialized hardware like GPUs. Traditional machine learning is simpler, with interpretable algorithms like decision trees. Modern low code no code platforms simplify deep learning, making ML vs Deep Learning more accessible for practitioners.

4. Is NLP under ML or DL?

The ML vs Deep Learning debate is central to NLP, which uses both machine learning and deep learning. Early NLP relied on machine learning techniques like Naive Bayes, while modern systems like ChatGPT use deep learning’s transformer architectures for advanced language tasks. Low code no code platforms enable cost-effective app development for NLP solutions, bridging ML vs Deep Learning applications.

5. Is ChatGPT deep learning?

Yes, ChatGPT is a deep learning system, addressing ML vs Deep Learning by relying on transformer neural networks for language processing. Its deep learning architecture enables contextual understanding and human-like responses, enhanced by AI-driven no code development for rapid scaling. This positions ChatGPT as a leader in ML vs Deep Learning for conversational AI.

6. Which is more complex: ML or DL?

In the ML vs Deep Learning comparison, deep learning is more complex due to its multi-layered architecture, automatic feature learning, and need for extensive data and computing power. Traditional machine learning, using simpler models like linear regression, is more interpretable. Low code no code platforms streamline ML vs Deep Learning complexity, enabling cost-effective app development.

7. What is the difference between AI vs ML vs DL vs generative AI?

The ML vs Deep Learning question fits within a broader AI hierarchy. AI encompasses all intelligent systems, machine learning focuses on data-driven learning, and deep learning uses neural networks for complex tasks. Generative AI, a deep learning subset, creates content like text or images, often built on low code no code platforms for accessibility.

8. How many ML algorithms are there?

Exploring ML vs Deep Learning, there are dozens of machine learning algorithms, including supervised (e.g., linear regression, SVM), unsupervised (e.g., k-means), and reinforcement learning types. Deep learning algorithms, like convolutional neural networks, add complexity. Low code no code platforms simplify deploying ML vs Deep Learning algorithms, with exact counts varying by categorization.

9. What is NLP in deep learning?

In the ML vs Deep Learning context, NLP in deep learning uses transformer architectures to process and generate human language for tasks like translation or chatbots. These systems outperform traditional machine learning NLP, with AI-driven no code development enabling rapid deployment. HBLAB’s scalable low code solutions enhance ML vs Deep Learning for NLP apps.

10. Is ChatGPT an NLP?

Yes, ChatGPT is an advanced NLP system, central to ML vs Deep Learning, using deep learning’s transformer architecture for conversational AI. It excels in understanding and generating human language, with low code no code platforms accelerating its deployment. HBLAB leverages ML vs Deep Learning to build similar NLP solutions for businesses.

CONTACT US FOR A FREE CONSULTATION

Read more:

– AI in Ecommerce : Extraordinary Trends Redefining Online Shopping Worldwide

– Agentic AI In-Depth Report : The Most Comprehensive Business Blueprint

– Agentic Reasoning AI Doctor: 5 Extraordinary Innovations Redefining Modern Medicine