Nearly 80% of companies have deployed generative AI—yet over 80% report zero material impact on earnings. Agentic AI promises to break this paradox.

The rapid evolution of artificial intelligence has created a confusing landscape for enterprise leaders. Two years ago, ChatGPT dominated headlines. Today, the industry conversation has shifted to agentic AI, a fundamentally different approach to solving business problems. Understanding the distinction between generative AI and agentic AI is no longer optional—it determines whether your organization joins the 5% capturing real ROI or remains stuck in the 95% running expensive pilots.

Quick Answer: Generative AI vs Agentic AI at a Glance

Generative AI generates new content (text, images, code) when prompted but lacks independence. It’s reactive, requiring explicit human instructions for each step. Think of it as a highly intelligent assistant that waits for direction.

Agentic AI autonomously plans and executes complex, multi-step tasks across systems without step-by-step guidance. It perceives goals, remembers context, breaks work into subtasks, and adapts in real-time. Think of it as a digital coworker that operates independently toward defined objectives.

The critical difference: GenAI responds; agentic AI acts. This distinction unlocks dramatically different business outcomes—and demands entirely different measurement frameworks, governance structures, and implementation strategies.

What is Generative AI? Capabilities and Limitations

Generative AI refers to artificial intelligence systems trained on massive datasets to create new content rather than merely analyze existing data. Since ChatGPT’s release in late 2022, GenAI has become ubiquitous: language models like GPT-4, Claude, and Gemini generate text; DALL·E and Midjourney create images; GitHub Copilot drafts code.

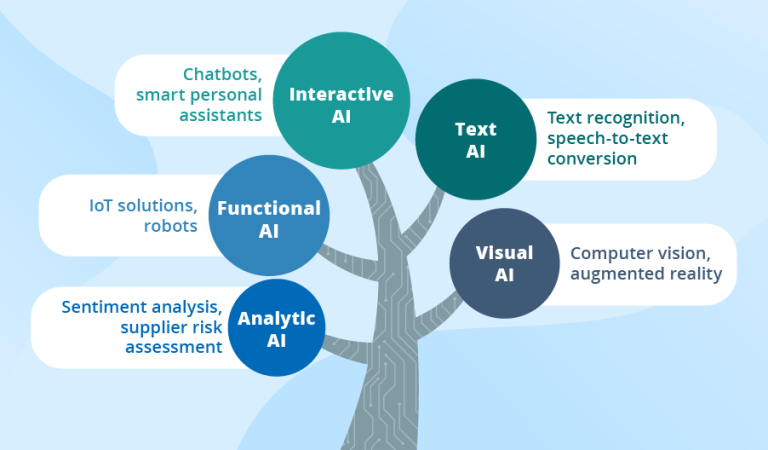

Core capabilities of generative AI include the ability to synthesize information from training data into human-like outputs, excels at drafting, summarizing, translating, and ideating. GenAI systems are accessible and easy to deploy, often via API or web interface, and are highly flexible across domains and task types.

However, inherent limitations matter in enterprise contexts. GenAI models are fundamentally reactive. They do not initiate action; they wait for a prompt. A chatbot doesn’t proactively reach out to customers. It responds when asked. GenAI systems can confidently produce fabricated information, incorrect citations, or plausible but false data—a critical problem in regulated domains like finance, healthcare, and legal services. Each conversation or query is largely independent. GenAI lacks memory of prior interactions across sessions, making it unsuitable for long-running processes requiring contextual continuity.

Early-generation language models struggled with complex, branching logic, sequential dependencies, and real-time adaptation. While newer models improve here, scaling to enterprise-grade workflows remains problematic. GenAI generates outputs based on statistical patterns but doesn’t observe outcomes, learn from results, or adjust plans—essential for autonomous operation in dynamic environments.

Today’s organizations use GenAI for employee copilots (Microsoft 365 Copilot reaches 70% of Fortune 500 firms), internal chatbots, content generation, code assistance, and summarization. But according to McKinsey’s 2025 research, fewer than 10% of vertical use cases escape pilot stage—precisely because GenAI alone cannot automate complete workflows.

What is Agentic AI? A New Paradigm for Autonomous Work

Agentic AI represents a fundamental shift: systems that act autonomously toward goals without explicit human instruction for each step. While agentic AI typically builds on generative models (like GPT-4) as its brain, it wraps that brain in additional layers: planning engines, memory systems, tool integrations, and feedback loops.

An agentic AI system can understand a goal, decompose it into subtasks, call external tools and APIs, observe results, adapt its approach, and iterate—all without waiting for human approval at each stage.

Core capabilities that define agentic AI include autonomous decision-making where systems make choices and take action toward goals. They handle multi-step planning and execution, breaking complex objectives into sequences and executing them. Agentic systems call databases, search engines, CRM systems, workflow tools, and business logic. They maintain persistent memory across sessions and learn from past interactions. They observe outcomes, refine plans, and improve performance over time through adaptive feedback loops. Finally, they monitor conditions and initiate actions without waiting for prompts.

Real-world examples of agentic AI in action demonstrate this power. A loan-processing agent automatically retrieves applicant data, analyzes credit risk, generates compliance documentation, and routes approvals—completing in hours what previously took weeks. A supply-chain agent continuously monitors demand signals, port disruptions, and supplier performance; dynamically reallocates inventory and reroutes shipments to minimize delays and costs. A customer-service agent detects common issues (delayed shipments, failed payments), initiates resolutions (issuing refunds, reordering items), and communicates directly with customers—handling 80%+ of cases autonomously.

Key Differences: Generative AI vs Agentic AI Side-by-Side

| Aspect | Generative AI | Agentic AI |

| Core Function | Generates content (text, images, code) | Autonomously executes multi-step tasks |

| Autonomy Level | Passive; requires explicit prompts | Proactive; acts toward goals independently |

| Interaction Model | Request → Response | Goal → Plan → Execute → Adapt → Outcome |

| Planning | None; generates single outputs | Decomposes goals into subtasks, sequences them, adapts dynamically |

| Memory | Limited to current session/conversation | Short-term (current task) and long-term (cross-session learning) |

| Tool Use | Rarely integrated with external systems | Deeply integrated; calls APIs, databases, workflows, business logic |

| Decision-Making | Generates suggested answers; humans decide | Makes decisions autonomously; escalates when uncertain |

| Error Handling | Prone to hallucinations; limited self-correction | Feedback loops enable iterative improvement; can validate outputs |

| Task Complexity | Best for discrete, single tasks | Designed for complex, multi-step, cross-system workflows |

| Real-Time Adaptation | Static based on training data | Continuous; learns from feedback and changing conditions |

| Typical Outcome | Content (email, report, code snippet) | Business transformation (process redesign, efficiency, new revenue) |

How Agentic AI Works: The Architecture Behind Autonomy

Agentic AI’s power emerges from four interconnected components working synergistically.

The LLM Core forms the brain at the foundation. At the foundation is a large language model—GPT-4, Claude, Llama, Mistral, or similar. This serves as the reasoning engine, enabling the agent to understand context, generate plans, and decide on actions. However, unlike GenAI systems, the LLM is not the end product; it’s one component in a larger orchestration.

Planning separates agentic from generative. Agentic systems use planning algorithms to break goals into executable subtasks. Two primary approaches exist. Planning without feedback (Chain-of-Thought, Tree-of-Thought) means the LLM generates an entire plan upfront in a single “thinking” step, then executes it. This is fast but rigid—if early assumptions are wrong, the whole plan derails. Planning with feedback (ReAct framework) means the agent generates an action, observes the outcome, refines its understanding, and adjusts the next step. This iterative loop is slower but far more robust in dynamic environments. McKinsey research shows that agents using observation-feedback cycles deliver 20–60% productivity improvements, vs. 5–10% for GenAI-assisted workflows.

Memory enables persistence and learning. Agentic systems maintain short-term memory (current task context, recent decisions) and long-term memory (historical patterns, learned strategies, user preferences). This enables contextual awareness across sessions, pattern recognition and learning, personalization and adaptation, and compliance-relevant audit trails. A purely stateless GenAI system cannot do this.

Read more in-depth insights about AI

Tools and APIs turn plans into action. An agent accesses external systems—search engines, CRM databases, financial platforms, approval workflows, communication channels. When the agent decides to retrieve customer data, verify inventory, or schedule a meeting, it calls the appropriate tool and integrates the result back into its reasoning loop.

These four components working together create a feedback loop: Reasoning informs planning; planning orchestrates tool use; tool outcomes populate memory; memory enriches future reasoning. This virtuous cycle enables genuine autonomy.

Advantages of Agentic AI: Beyond Productivity

While generative AI delivers efficiency (faster drafting, quicker summarization), agentic AI unlocks transformation—shifting how organizations compete and create value.

- End-to-End Process Automation doesn’t just speed up tasks; it redesigns workflows end-to-end. McKinsey’s banking case study shows a legacy system modernization budgeted at $600M was accelerated by 50% using AI agents in hybrid human-AI teams. Another example: a retail bank cut credit-memo turnaround by 30% faster through agents handling data extraction, analysis, and draft generation, while relationship managers focused on strategic decisions. Result: 20–60% productivity gains, vs. 5–10% for GenAI alone.

- Real-Time Decision-Making and Adaptability means agents continuously ingest data and adapt on the fly. A supply-chain agent detecting a port delay immediately reroutes shipments, reallocates inventory, and updates suppliers—all autonomously. A fraud-detection agent processes 100M+ transactions daily and identifies 95% of fraudulent activity while reducing false positives by 75%. Traditional systems require manual review; agentic systems adapt in milliseconds.

- Scaled, Personalized Customer Experience enables personalization at scale. In e-commerce, agents analyze user behavior in real-time, surface hyper-targeted recommendations, and handle support queries 24/7. H&M’s virtual shopping assistant resolved 70% of customer queries autonomously, boosted conversion rates by 25%, and slashed response time by 3×.

- Cost Reduction Through Continuous Operation is a major advantage. Unlike human teams, digital agents operate 24/7 without overtime or fatigue. IBM reports 67% of enterprise leaders cite “cost reduction through automation” as agentic AI’s primary benefit. A Dutch insurer automated 90% of automotive claims processing. A major bank’s Erica virtual assistant completed 1 billion+ transactions and reduced call-center volume by 17%. Annual impact: Organizations see 30–40% reduction in operational costs.

- New Revenue Streams and Business Models is where agentic AI truly differentiates. McKinsey notes that agents don’t just improve existing operations—they enable entirely new services. Examples include banks deploying 24/7 AI financial advisors as a premium service, manufacturers shifting from product sales to outcome-based service contracts (monitored by AI agents), and platforms monetizing bespoke domain-specific agents (legal reasoning, tax interpretation, procurement expertise) as SaaS. Market potential: Organizations using AI-first approaches credit over half their revenue growth to AI.

The Hidden Architecture: Understanding Agent Workflow Patterns That Work

While high-level capabilities matter, success depends on choosing the right workflow architecture for your use case. This is where many organizations stumble—and where agentic AI truly differentiates from simple automation.

Enterprise agentic workflows typically operate on one of several proven patterns. Understanding these patterns helps explain why certain agentic implementations succeed while others fail to deliver promised ROI.

The ReAct Pattern (Reasoning + Acting) forms the foundation for most effective agents. Instead of planning everything upfront, the agent observes an action’s outcome, reasons about what happened, and adjusts its next step. This observe-reason-adjust cycle repeats until the goal is reached. The advantage: flexibility. When market conditions shift, customer preferences change, or new data emerges, the agent adapts without requiring code changes. A loan-processing agent, for example, might discover during evaluation that new employment data became available; it automatically retrieves and incorporates that data rather than halting the workflow.

Constraint-Driven Planning ensures agents stay within governance boundaries. Instead of trusting agents to self-govern, this pattern embeds explicit constraints and compliance checklists that agents must satisfy. A regulated financial agent, for instance, can’t approve transactions above a threshold without human review; it can’t process loans from sanctioned jurisdictions; it must generate an audit trail for every decision. This pattern matters especially in healthcare, finance, and government where regulators demand explainability and accountability.

State Machine Orchestration brings determinism to complex workflows. Rather than letting agents improvise, this pattern defines explicit states (e.g., data-gathering, risk-assessment, approval-pending, executed), allowed transitions between states, retry logic for failures, and escalation rules. This makes workflows transparent, observable, and testable. When an agent fails, you know exactly which state it was in and why. This pattern underpins the most stable production deployments.

Multi-Agent Coordination becomes critical as workflows grow complex. Instead of one agent handling everything, a coordinator assigns subtasks to specialist agents in parallel. One agent extracts credit history; another assesses debt-to-income ratio; a third checks employment stability. Results synthesize back to the coordinator, which makes the final decision. This pattern mirrors how humans form teams and scales to handle far greater complexity than single-agent systems.

Feedback Loops and Human-in-the-Loop Systems ensure alignment with human values and business goals. This pattern embeds two types of feedback: fully automated (performance metrics, error rates, cost tracking) and human-guided (decision review, outcome validation, strategy adjustment). A customer-service agent, for example, automatically learns which solutions satisfy customers (positive feedback), but when satisfaction drops, a human reviews recent decisions to understand why. This hybrid approach maintains both speed and safety.

Organizations deploying agentic AI without understanding these patterns often treat agents as “black boxes” and are surprised when they operate unpredictably or fail to deliver expected outcomes. Conversely, enterprises that deliberately choose and implement the right pattern for their use case see dramatically higher success rates and faster ROI realization.

Download the full document now!

The Accountability Question: Who Decides When an Agent Is “Right”?

This question separates theoretical AI discussions from real enterprise deployment. And it’s where agentic AI hits its most profound challenge.

Consider a loan agent that autonomously approves a $500K mortgage for a customer. The agent assessed credit history, debt-to-income ratio, employment stability, and property value. All inputs suggested approval. Six months later, the customer defaults. Who is responsible? The agent developer who wrote the decision logic? The bank that deployed it? The data scientist who trained the model? The risk officer who approved the agent’s launch? The customer who took on unsustainable debt? The answer is unclear, and that ambiguity creates risk.

This accountability gap exists because agentic AI operates in a zone where causality becomes murky. With human decision-makers, responsibility flows clearly: the loan officer made the decision; the loan officer is accountable. With an agent, decision-making is distributed. Training data contributed patterns. The prompt engineer shaped behavior. The infrastructure provider’s systems affected latency and stability. The human-in-the-loop reviewer (if present) added another layer. When outcomes are good, credit multiplies across all contributors. When outcomes are bad, blame becomes impossible to assign.

Organizations solving this problem typically implement three overlapping mechanisms.

- Explicit Governance Frameworks define autonomy levels by use case. A claims-triage agent (low stakes—it only recommends which priority queue a claim enters) gets high autonomy. A loan-approval agent (high stakes—it can bind the company to $500K obligations) gets low autonomy and requires human review. A fraud-detection agent (medium stakes—it can flag accounts but not freeze them) gets medium autonomy. Encoding these autonomy levels into your governance model makes decision boundaries explicit.

- Decision Logging and Audit Trails create accountability through transparency. Every agent decision is logged: the inputs considered, the reasoning, the decision, the confidence score, the outcome. These logs serve multiple purposes. If an error occurs, you can trace exactly how it happened. If a customer complains, you can explain the decision rationale. If regulators audit you, you have documentation. If an agent repeatedly makes poor decisions, the logs reveal which component (data source, model behavior, prompt engineering) is failing.

- Clear Liability Frameworks establish who is responsible when agents fail. Does responsibility lie with the developer who built the model, the organization that deployed it, or the system operator who monitored it? Leading organizations codify this in contracts and governance policies. Liability assignments might vary: data scientists bear responsibility for model accuracy; operations teams bear responsibility for system reliability; business unit leaders bear responsibility for use-case appropriateness. Written clarity prevents disputes downstream.

Without these mechanisms, organizations risk deploying agentic AI that creates regulatory exposure, customer disputes, and internal political chaos when failures occur. The most successful enterprises treat accountability design as foundational—before agents go live—not as an afterthought.

The Ethical Frontier: Bias, Transparency, and Trust in Autonomous Decisions

Agentic AI amplifies both the promise and the peril of automation. While agents can process information faster and more consistently than humans, they inherit biases from training data, can perpetuate discrimination at scale, and operate with limited transparency into their reasoning.

– Bias Propagation at Scale is the most tangible ethical risk. Loan-approval agents trained on historical data will replicate historical lending discrimination—denying credit to certain neighborhoods or demographics at higher rates, not because the agent “chose” to discriminate, but because training data reflected decades of discriminatory practices. A recruiting agent trained on historical hiring data will systematically reject candidates from underrepresented groups. A risk-assessment agent in criminal justice will penalize defendants from communities over-policed in training data. These aren’t rare edge cases; they’re systematic properties of agents trained on historical data.

Solutions exist but require upfront investment. Organizations must audit training data for bias, actively rebalance data to represent all groups fairly, constrain agent decisions to ensure demographic parity, and continuously monitor agent outcomes for disparate impact. This is expensive and ongoing; it’s not a one-time fix.

– Transparency and Explainability challenges arise because agents often don’t know why they reached their decisions. An agent might deny a loan but provide opaque reasoning: “creditworthiness assessment complete.” What factors mattered most? Customers, regulators, and courts increasingly demand answers. But modern language models work through statistical pattern matching, not logical reasoning paths. Explaining the decision means reverse-engineering opaque mathematical operations.

Some organizations respond by embedding “explainability” directly into agents. Instead of using pure neural approaches, they layer explicit constraints (policy rules, threshold checks, decision trees) into agent workflows. When an agent denies a loan, it can articulate: “Debt-to-income ratio (52%) exceeds policy threshold (50%). Please reapply when debt decreases or income increases.” This is less flexible than pure neural approaches but dramatically more transparent.

– Trust Calibration is subtle but critical. Humans must trust agents enough to delegate authority to them, but not so much that they abdicate oversight. Under-trust (constant human review) defeats the purpose of automation. Over-trust (blind faith in agent decisions) creates catastrophic risk. The sweet spot requires agents to be reliable enough that humans can trust them, but transparent enough that humans can verify that trust is warranted.

Leading organizations achieve this by starting agents in low-stakes domains (document classification, data triage) where errors are recoverable, building trust through demonstrated reliability, then gradually increasing autonomy as agents prove themselves. They also maintain human-in-the-loop checkpoints for consequential decisions (loan approvals, personnel decisions, major financial commitments). This phased approach builds organizational confidence in agentic AI while maintaining safety guardrails.

Contact our team for a free consultation

Building and Scaling Agentic Agents: A Practical Framework

Moving from understanding agentic AI to actually deploying it requires a structured approach. Organizations that follow this framework see 60-70% faster deployment and lower total costs.

Phase 1: Opportunity Identification and Validation (Weeks 1-4) starts by mapping high-impact workflows. Not every process benefits from agentic AI. Look for workflows that are complex (multi-step, cross-functional), have high volume (impact scales), involve significant wait time (agents can parallelize), and experience high human error rates (agents can reduce mistakes). In banking, loan processing qualifies; in healthcare, appointment scheduling qualifies; in retail, inventory management qualifies.

For each candidate workflow, calculate impact. If an agent reduces processing time by 40% and you process 1,000 loans monthly, that’s 400 hours saved per month or ~5 FTE equivalents. Multiply by fully-loaded labor cost. Realistic impact: $300K-$1M annual benefit per use case.

Validate technical feasibility. Can you access the data the agent needs? Are systems API-accessible? Is the workflow deterministic enough for an agent to handle 80%+ of cases? Technical obstacles now inform budget later.

Phase 2: Pilot Design and MVP Development (Weeks 5-12) involves building a minimum viable agent. Don’t over-engineer. Your goal is learning, not perfection. Design the agent to handle 60-70% of cases autonomously and escalate the remainder to humans. Implement one of the proven workflow patterns (ReAct, constraint-driven, state machine) rather than inventing new approaches. Use existing LLM APIs rather than fine-tuning your own models.

Build the agent within an existing sandbox—separate from production. Test against 3-6 months of historical data. Measure accuracy, latency, and cost. If accuracy is below 80% or cost is prohibitively high, revisit the approach before scaling.

Phase 3: Governance and Risk Setup (Weeks 9-14, parallelize with Phase 2) establishes guardrails before launch. Define autonomy level: What decisions can the agent make unilaterally? What requires human review? What’s out of scope? Document decision boundaries clearly. Implement logging and monitoring. Build dashboards to track agent performance, error rates, and cost. Establish escalation procedures.

Conduct fairness and bias audits. Run the agent against diverse datasets. Do approval rates vary significantly by demographic? Do response times vary by input type? Are edge cases handled consistently? Surface and mitigate issues before production.

Phase 4: Production Launch and Optimization (Weeks 15-20) involves going live in a limited scope. Start with 10% of volume. Monitor obsessively. Watch for performance degradation, unexpected cost increases, customer complaints, or regulatory red flags. After 2 weeks of stable operation, increase to 30% of volume. After another 2 weeks, increase to 100%.

Simultaneously, begin collecting feedback. What cases did the agent handle well? What edge cases caused escalation? What prompted customer complaints? Use this feedback to refine agent behavior. Adjust prompts, constraints, or decision thresholds. Retrain if necessary.

Phase 5: Continuous Improvement and Scaling (Ongoing) focuses on long-term optimization. Performance naturally degrades over time as data distributions shift. Retrain quarterly. Monitor for drift. As the agent matures, gradually increase autonomy. Move decisions previously requiring human review to automatic processing.

Scale to adjacent use cases. Once you’ve perfected agent design in loan processing, apply the same framework to claim processing or credit decisioning. Reuse infrastructure, governance playbooks, and team expertise.

Emerging Trends and the 2025 Inflection Point

The agentic AI market is accelerating dramatically. The global market is projected to grow from $7.06–$7.55 billion in 2025 to $93–$199 billion by 2032–2034, representing a CAGR of 43–44%. For context, that’s 10x growth in less than a decade.

Key market drivers include enterprise adoption moving beyond pilots. 79% of organizations report some agentic AI adoption as of 2025; 96% plan to expand usage in 2025; 58% of companies have already integrated AI agents; 35% are actively exploring.

Vertical-specific agents are capturing value. Rather than horizontal copilots, focus is shifting to domain-specific agents in BFSI (19.45% market share): automated lending, fraud detection, advisor bots; healthcare: clinical workflow automation, image analysis, patient triage; supply chain: demand forecasting, route optimization, inventory orchestration; and manufacturing: predictive maintenance, production scheduling, quality control.

Multi-agent orchestration represents the next frontier. Single-agent systems are simple but limited. Leading organizations are building multi-agent ecosystems—specialized agents (data retriever, analyzer, validator, communicator) working in concert. This mirrors human team structures and enables far more complex workflows.

Governance and compliance are moving from afterthought to front-and-center. With the EU AI Act and equivalent regulations, enterprises are building agentic AI governance frameworks upfront, not retrofitting compliance later. This is now a competitive differentiator.

The business model shift is from software to outcomes. Agentic AI is enabling entirely new revenue models. Instead of selling software licenses, companies are selling outcomes (reduced claims processing time, faster loan decisions, optimized logistics). This fundamental shift will reshape SaaS and B2B software over the next 3–5 years.

Conclusion: The Strategic Imperative

Generative AI opened the door to AI democratization. Agentic AI is now walking through it—transforming not just how work gets done, but how organizations compete.

The strategic reality is stark: organizations must move beyond GenAI pilots to agentic AI transformation to capture long-term competitive advantage. This isn’t about choosing one over the other; it’s about deploying them sequentially and strategically. GenAI delivers quick wins; agentic AI delivers transformation.

The gap between organizations realizing AI value and those stuck in the 95% failing to move the needle is widening. That gap is the difference between treating AI as a tool and treating it as a catalyst for reinvention.

The time to start planning your agentic AI strategy is now. Whether you’re in BFSI, healthcare, retail, manufacturing, or government, there’s a process awaiting transformation. The organizations that act first will redefine their industries.

Follow our LinkedIn for the latest AI updates

If you’re ready to move from agentic AI exploration to strategic implementation—designing governance frameworks, selecting use cases, and scaling to production—the foundation matters. Expertise, proven methodologies, and cross-functional teams experienced in orchestrating this transformation are critical.

About HBLAB: Your Trusted AI Transformation Partner

HBLAB has spent over a decade at the forefront of AI-driven business transformation, helping enterprises navigate from early experimentation to scalable, production-grade deployments. With 630+ professionals, including 200+ senior architects and AI specialists, and CMMI Level 3 certification, HBLAB brings rigor, experience, and accountability to AI transformation projects that matter.

Since 2017, HBLAB has pioneered AI-powered solutions—from machine learning models to production systems. Today, the team is deep in the agentic AI space, helping organizations redesign core workflows, build governance frameworks, and scale autonomous agents across complex business processes. Flexible engagement models (offshore, onsite, dedicated teams) and cost-efficient delivery (typically 30% lower cost than onshore alternatives) have made enterprise-grade AI accessible to businesses of all sizes across APAC, Europe, and North America.

What sets HBLAB apart includes a proven track record of shipping 50+ production AI systems and scaling agentic solutions from proof-of-concept to enterprise operations. The team offers end-to-end expertise: from strategy and architecture to implementation, governance, and ongoing optimization. With cross-industry experience including BFSI, healthcare, retail, manufacturing, and logistics, HBLAB brings deep domain knowledge. The governance-first approach means HBLAB doesn’t just build agents—it builds them with compliance, transparency, and control built in.

Whether you’re assessing agentic AI readiness, designing your first autonomous agents, or scaling a multi-agent ecosystem, HBLAB can guide you through the strategy, architecture, and execution needed to turn agentic AI from a promising technology into measurable business value.

👉 Ready to transform your business with agentic AI?

FAQ: Your Agentic AI Questions Answered

Q: Is agentic AI just an advanced version of generative AI?

No. While agentic AI often uses generative models (like GPT) as a core component, agentic AI adds planning, memory, tool integration, and feedback loops—fundamentally different architecture enabling autonomy. Think of it as the difference between a smart calculator (GenAI) and a project manager (agentic AI).

Q: What’s the difference between ChatGPT and agentic AI?

ChatGPT is a generative AI system—it responds to your prompts with human-like text. Agentic AI systems go beyond response; they plan, act, remember, and adapt autonomously. ChatGPT waits for your input; an agentic system initiates work toward goals without waiting.

Q: Can agentic AI replace humans?

No. Leading organizations view agentic AI as augmentation, not replacement. Agents handle routine, data-heavy tasks; humans focus on strategic, judgment-based decisions. The most successful implementations redesign roles so humans and agents complement each other.

Q: Is agentic AI risky? What about hallucinations and errors?

Like GenAI, agentic systems can hallucinate—but agentic architectures mitigate this via feedback loops, validation checkpoints, and human-in-the-loop escalation for high-stakes decisions. This is why governance is critical. Properly designed agentic systems are more reliable than unguided GenAI deployments.

Q: How much does agentic AI cost? How long does implementation take?

Implementation typically takes 4-9 months; costs range from $1.5M–$5M depending on complexity and organizational readiness. Break-even is typically 12-18 months, with 3-year ROI of 150–300%+. Hidden costs often represent 70% of total investment, so accurate TCO planning is essential.

Q: Who’s leading in agentic AI?

Microsoft (integrating agents into Dynamics 365 and Copilot Studio), Salesforce (Agentforce), SAP (Joule), Google Cloud (Agentspace), and AWS (Bedrock Agents) are building agent platforms. Startups like Mistral AI, Anthropic, and others compete on foundational models. However, implementation winners are companies building domain-specific agents for finance, healthcare, supply chain—not just vendors selling agent platforms.

Q: Is agentic AI the next big thing?

Absolutely. While GenAI captured headlines, agentic AI is where real process transformation and ROI materialize. Analysts predict 2025 as “the year of the agent,” with 99% of enterprise developers exploring or building agents and 44%+ of IT executives planning investments. Agentic AI represents the next wave of automation and competitive differentiation.

CONTACT US FOR A FREE CONSULTATION

Read More:

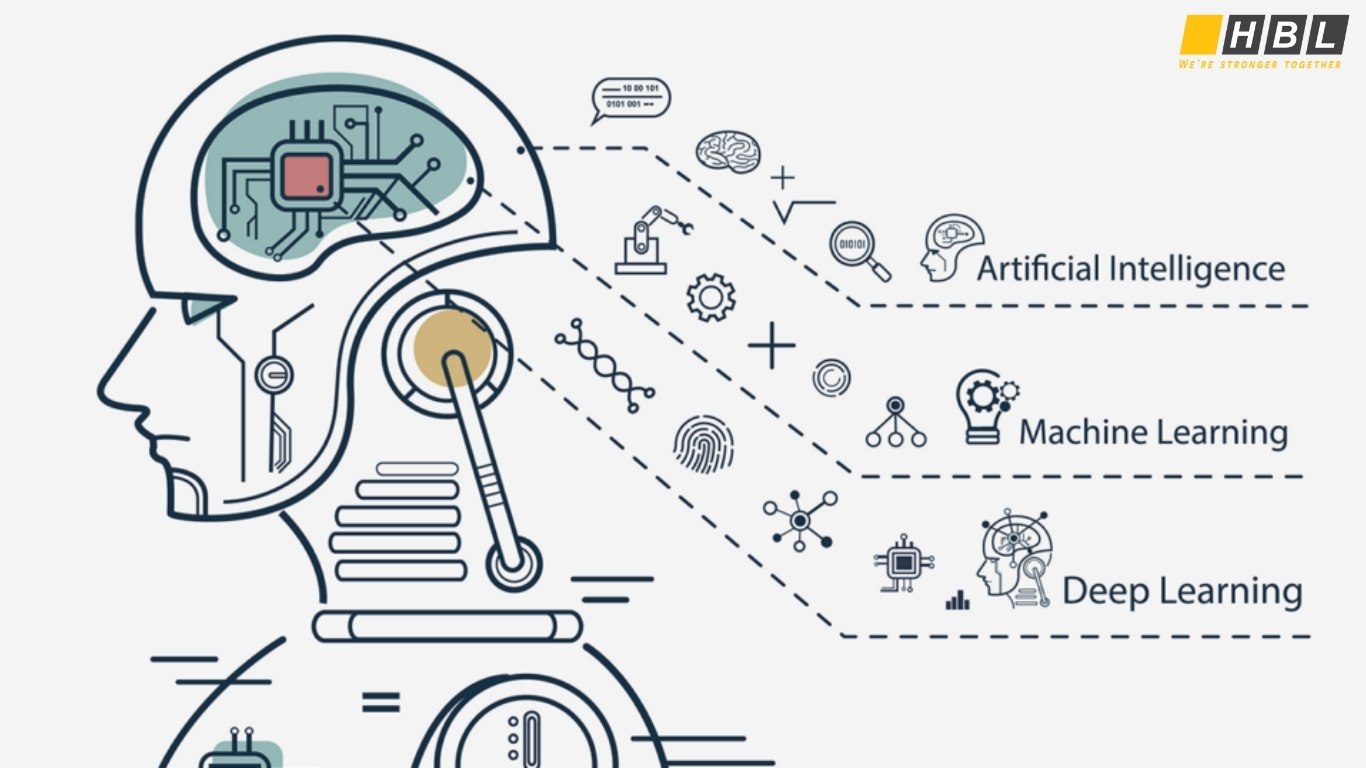

– AI and Machine Learning Trends 2025: The Solid Basis for Enterprise Transformation

– AI in Industrial Automation: The Definitive Guide Powering Smart Factories in 2025

– Machine Learning – ML vs Deep Learning: Understanding Excellent AI’s Core Technologies in 2025