85% of enterprises struggle with fragmented systems and data silos—but proper system development integration can unlock unprecedented agility, cut operational costs by 30%, and accelerate digital transformation across your entire organization.

In today’s digital landscape, organizations rely on dozens—sometimes hundreds—of applications, databases, and platforms to run their operations. A retailer might use inventory management, point-of-sale, CRM, and e-commerce systems. A financial services firm deploys core banking platforms, payment processors, analytics engines, and compliance tools. Yet these systems often operate in isolation, creating costly inefficiencies, data inconsistencies, and missed opportunities.

This is where system development integration becomes essential. System development integration bridges the gap between disconnected applications, enabling them to communicate, share data, and execute coordinated workflows seamlessly. Unlike quick fixes or point-to-point connections, true system development integration establishes a sustainable, scalable foundation that grows with your organization.

Quick Overview

System development integration connects separate software applications and data sources into a single, cohesive operating environment through middleware, APIs, and orchestration platforms. When executed properly, system development integration reduces manual work by 40%, improves data accuracy to 98%+, and enables organizations to deploy new capabilities 25-40% faster than teams managing fragmented systems.

What Is System Development Integration?

System development integration is the process of combining separate software applications, data sources, and IT infrastructure components into a single, coordinated system that works seamlessly together.

Rather than treating each application as an isolated island, effective system development integration creates bridges—through middleware, APIs, messaging systems, and orchestration layers—that enable these systems to communicate, share data, and execute synchronized workflows.

In the context of broadcasting and media production, system development integration solves the fundamental challenge:

how do you connect all equipment into a truly integrated system without requiring manual data entry, preventing conflicts, or creating rigid vendor lock-in?

This same principle applies across retail, banking, healthcare, manufacturing, and every modern enterprise.

System development integration matters fundamentally because data flows become seamless instead of requiring manual exports and imports. Workflows accelerate since processes that once required human intervention across multiple systems now execute automatically.

Business agility increases because organizations can adopt new tools, retire legacy systems, or pivot processes without wholesale rewrites. Costs decline as automation reduces manual labor, eliminates redundant systems, and prevents the expensive “one-to-one integration” trap that plagues organizations with fragmented technology stacks.

The global system development integration market reached USD 590 billion in 2025 and is projected to grow to USD 1.7 trillion by 2033, reflecting the explosive demand as enterprises accelerate digital transformation and adopt cloud-native architectures.

This growth underscores how critical system development integration has become to competitive survival.

How It Works: Architecture Foundations

Effective system development integration doesn’t happen through magic—it relies on well-defined architectural approaches and middleware technologies that act as connectors between systems. Understanding these patterns is essential to designing system development integration strategies that actually scale.

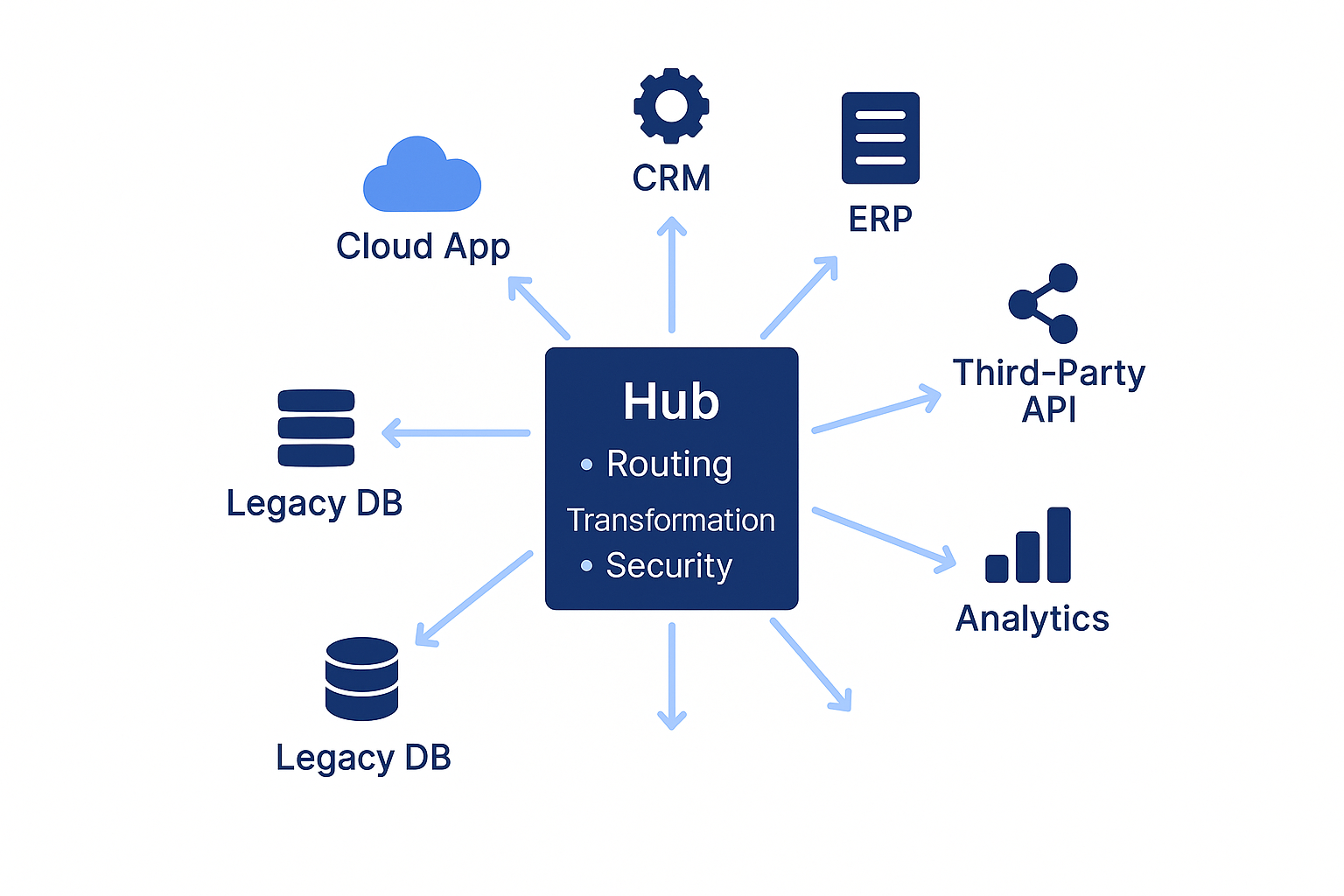

Hub-and-Spoke Architecture

In a hub-and-spoke model, a central integration hub (often called an Enterprise Service Bus or ESB) acts as a mediator between all systems. Each application connects to the hub through a single interface; the hub handles routing, data transformation, and protocol conversion.

This approach became popular because it dramatically reduces connection complexity. Instead of needing direct connections between every pair of systems (which creates exponential complexity), through a hub means each system connects only once.

This hub-and-spoke pattern for system development integration works well for mid-to-large organizations with a moderate number of systems and standard data transformations.

The central hub provides scalability and reduces overall complexity. However, the hub can become a bottleneck if not properly designed, creating a single point of failure if not implemented with redundancy.

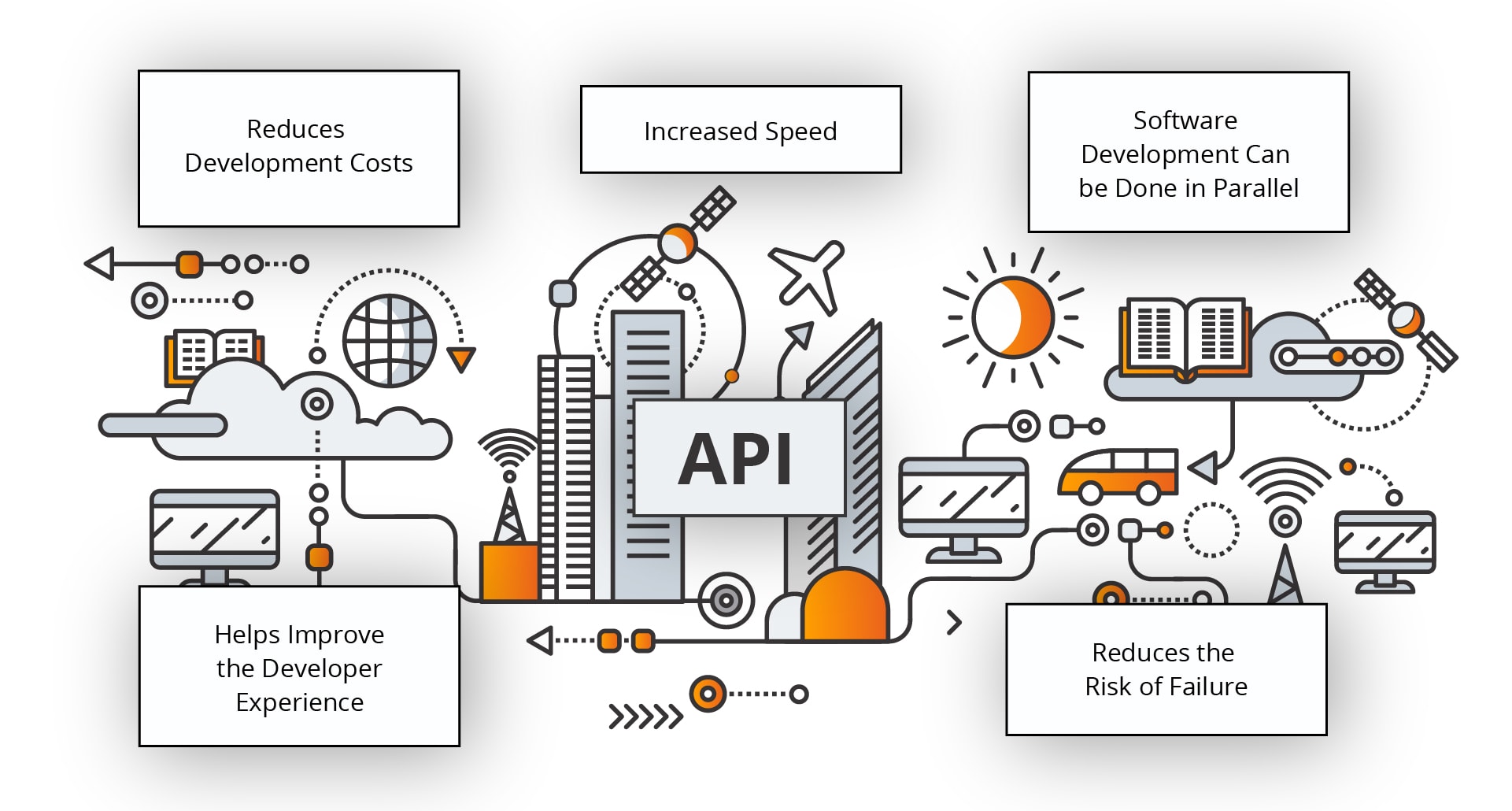

Microservices and API-First Approach

Rather than monolithic integration, microservices break functionality into small, independently deployable services that communicate via APIs. System development integration in a microservices architecture means each service owns its data and business logic; APIs define contracts between services. This has become increasingly popular for cloud-native organizations.

System development integration using microservices enables highly scalable, distributed environments where independent development teams each own services and deploy independently. This supports continuous deployment and makes it easier to retire or replace individual services without affecting the entire ecosystem. However, it increases operational complexity, requiring mature DevOps practices and sophisticated monitoring.

Event-Driven Integration (Publish-Subscribe)

Systems publish events (e.g., “Order Created,” “Inventory Updated”) to a message broker or event stream. Other systems subscribe to relevant events and react asynchronously. This decouples systems in both time and space, meaning components don’t need to be online simultaneously to participate in workflows.

Event-driven architecture enables loose coupling, high scalability, and real-time processing.

This pattern naturally fits IoT and streaming data scenarios. Organizations implementing event-driven integration benefit from natural separation of concerns—each system reacts to events without needing to know about the systems that produced them.

The Five Core Steps

Regardless of which architecture you choose for your initiative, the process typically follows this data flow. Understanding these steps helps ensure your efforts follow proven methodology.

- Extract

Retrieve data from the source system, whether through batch processes or real-time streaming. Your strategy defines extraction frequency based on business needs.

- Transform

This is where most system development integration projects invest significant effort. Map fields, validate data, apply business logic, and ensure format compatibility between systems. Complex business rules and legacy data formats mean transformation often accounts for 60-70% of effort.

- Route

Send data to the correct destination(s) based on predetermined rules. Your architecture determines how routing decisions are made—centrally through a hub, distributed across services, or event-based through topic subscriptions.

- Load

Store or integrate data into the target system. The loading System Development Integration strategy depends on whether updates need to be synchronous (immediate) or asynchronous (eventual).

- Monitor & Audit

Track success and log errors while maintaining audit trails for compliance. This includes comprehensive monitoring, because integration failures often cascade across multiple dependent systems.

From our experience at HBLAB, a critical insight is that transformation is where most projects face unexpected complexity.

Legacy data formats, evolving business rules, and data quality issues mean that transformation—the “T” in ETL—drives project timelines, costs, and success.

Key Benefits

When properly designed and implemented, system development integration delivers measurable business value that directly impacts your bottom line and competitive position.

Operational Efficiency Through Automation

System development integration automates manual, repetitive processes that drain resources and introduce errors. A retail connecting inventory, point-of-sale, and e-commerce systems means stock updates propagate instantly across all channels. This eliminates the manual synchronization that once required staff to export from one system, transform the data in spreadsheets, and import into another system. The result is 30-40% reduction in manual data entry and associated errors.

Organizations implementing comprehensive integration find that processes once requiring days now complete in hours. Customer data flows automatically from CRM to billing systems. Order information updates inventory without human intervention. These efficiencies compound across the organization.

Significant Cost Reduction

Building unique integrations for each new system is expensive: each connection requires custom code, testing, deployment, and ongoing maintenance. A hub-and-spoke or API-first approach to system development integration reduces this burden by treating integration as a reusable platform rather than point-to-point connections.

Organizations show that 25-35% reduction in integration costs compared to point-to-point approaches, with savings compounding as the system landscape grows. This allows teams to reuse integration patterns, data transformations, and monitoring approaches across multiple projects. Plus, fewer integration points means fewer places where things can break.

Faster Technology Refresh and Innovation

When systems are properly integrated, new features and capabilities deploy without waiting for manual data exchange or workarounds. Modern DevOps and continuous deployment pipelines move faster because system development integration has already solved the connectivity challenge.

Result: 25% reduction in development time for companies using modern integration platforms with CI/CD pipelines. Your technical teams spend time on business logic and innovation instead of building custom data connectors.

Unified Business Intelligence and Data Insights

Integrated systems provide a single source of truth for critical data. Analytics teams can query unified data lakes and generate reports that span customer, product, finance, and operational dimensions without manual data consolidation overhead.

Result: 40% improvement in decision-making speed and accuracy according to enterprise studies, because leaders make decisions based on current, consistent data rather than conflicting versions from disparate systems.

Enhanced Customer Experience

Real-time system development integration enables personalized, responsive customer interactions. A CRM system integrated with order management, billing, and support systems means customer service representatives see the complete customer journey instantly. They see purchase history, pending orders, outstanding invoices, and support tickets without toggling between systems.

Organizations report 15-20% improvement in customer satisfaction scores, because service quality depends on having complete information immediately available.

Real Challenges

System development integration is powerful but not without genuine obstacles. Understanding these challenges and proven solutions helps ensure your initiative succeeds.

Legacy System Compatibility Issues

Older systems—mainframes, outdated ERP platforms, custom-built applications—often use obsolete technologies, proprietary data formats, or closed APIs. These legacy systems can’t easily “speak” to modern cloud applications, making integration more complex. 40% of enterprise integration projects cite legacy system compatibility as the primary blocker, delaying initiatives and increasing costs.

The solution involves API wrapping—introducing an API gateway that sits in front of legacy systems, translating modern API calls into legacy system commands. This approach doesn’t require touching the legacy code itself. For longer-term success, containerization using Docker and Kubernetes can encapsulate legacy components, enabling them to coexist with modern microservices. Gradually refactoring high-impact legacy modules in modern languages accelerates progress without overnight rewrites.

From our experience at HBLAB, we’ve seen organizations reduce effort by 50% using API-first wrapper approaches on legacy ERP systems. This allows modern tools like cloud CRM and analytics platforms to connect without touching expensive, risky mainframe modifications.

Data Inconsistency and Format Mismatches

Different systems use different data models, creating complexity. One system’s “CustomerID” is an integer; another stores it as a string with a prefix. Date formats differ, field definitions conflict, and duplicate records exist across systems. This isn’t just an inconvenience—inaccuracies translate into poor reporting, inadequate customer experiences, failed automations, and regulatory non-compliance.

Solving this requires designing a unified data model that standardizes formats for critical entities across workflows. Implementing ETL (Extract-Transform-Load) for batch data means scheduled jobs extract, clean, deduplicate, and load data on a defined cadence. For integration requiring real-time data, ELT (Extract-Load-Transform) streams data first, then transforms in the target system for lower latency. Deploying master data management tools maintains authoritative customer and product records, with your platforms referencing MDM as the truth.

Security and Compliance

Every system development integration point is a potential security vulnerability. Data moves across multiple systems, networks, and potentially cloud boundaries; encryption, access control, and audit logging must be enforced consistently. Data breaches, failed compliance audits, and customer trust erosion result when your integration doesn’t prioritize security.

Security-by-design means encrypting data in transit (TLS 1.2+) and at rest (AES-256). Implement zero-trust architecture—assume all systems are untrusted; verify every connection, user, and data access. Use API rate limiting and authentication standards like OAuth 2.0 and mutual TLS to prevent unauthorized access to endpoints. Maintain audit logs tracking all data movements, transformations, and access for compliance and forensics. Deploy API gateways with security policies that enforce encryption, malware scanning, and threat detection across your architecture.

Scalability and Performance Bottlenecks

What works for 100 transactions per second may collapse at 10,000 TPS. Point-to-point system development integration approaches don’t scale; monolithic integration hubs become bottlenecks under load. Organizations expanding system development integration must plan for growth from the beginning.

Cloud-native, event-driven system development integration solves this through microservices—small, independently scalable services instead of monolithic hubs. Message queues and event streams like Kafka decouple systems temporally; requests queue up and process asynchronously without overloading targets. Deploying system development integration in cloud-native environments like Kubernetes enables auto-scaling based on load. Implementing caching with Redis reduces repeated queries to backend systems, improving throughput for system development integration pipelines.

Organizational Change and Adoption

System development integration projects often fail not for technical reasons but because stakeholders don’t understand the changes, resist new workflows, or lack training. Technical excellence means nothing if teams don’t adopt the system development integration capabilities you’ve built.

Communicate the “why” so teams understand how system development integration improves their daily work—faster reports, fewer manual tasks, better customer insights. Invest in training not just for technical teams but for business users who’ll interact with integrated systems. Pilot system development integration initiatives with departments most motivated to see change; their success stories drive broader adoption. Monitor and iterate post-launch; refinements often come from end-user insights, not technical teams.

Integration Trends Reshaping 2025

The integration landscape is evolving rapidly. Organizations staying competitive must understand these emerging trends.

AI-Driven and Intelligent Integration

Artificial intelligence is automating the most manual, error-prone aspects of integration: data mapping, field transformation, and error detection. Machine learning models learn from historical mappings, suggesting when “CustomerID in System A matches UserID in System B.” When new systems join your architecture, AI suggests mappings, reducing manual configuration time by 60-80%.

AI-powered anomaly detection identifies unusual data patterns that signal corruption or operational issues. Integration platforms with intelligent error handling learn which errors are critical (stop and alert) versus recoverable (queue and retry). AI can even suggest fixes: “Field X is missing; the last known value is Y; should we use Y provisionally while investigating?”

45% of enterprises have already integrated AI into their platforms, with adoption expected to surge 20% by year-end 2025. Organizations implementing AI-assisted efforts realize 25-30% faster project timelines, especially valuable for complex legacy system integrations.

Cloud-Native Solutions

Integration is shifting from on-premise middleware to cloud-native, multi-tenant SaaS platforms designed for hybrid (on-prem + cloud) and multi-cloud environments. Integration Platforms as a Service (iPaaS) offer what traditional Enterprise Service Buses cannot: elastic scaling, serverless execution (pay per integration run), and multi-cloud support.

70% of enterprises will rely on cloud-based solutions by 2025, up from 45% in 2023. Organizations are ditching heavy, maintenance-heavy ESBs in favor of lighter, faster iPaaS platforms. Cloud-native platforms provide low-code/no-code connectors for 500+ popular SaaS applications, automatically scale to handle traffic spikes, and operate across AWS, Azure, Google Cloud, and on-prem systems seamlessly.

Event-Driven Architecture

Organizations are moving from batch, scheduled integration (data syncs once per night) to event-driven, real-time architectures. When something happens in one system, dependent systems react instantly. A customer makes a purchase; loyalty points update immediately; personalized recommendations appear in real-time. This happens through event streaming platforms.

Real-time integration enables instant fraud detection (payments systems immediately identify suspicious patterns), IoT integration (billions of sensors stream data requiring millisecond processing), and modern customer experiences. 65% of enterprises are piloting or deploying event-driven approaches as of 2025, up from 35% in 2023.

Composable, Modular Architectures

Rather than building monolithic integration platforms, organizations adopt composable architectures with modular, reusable components assembled like Lego blocks. Teams standardize on reusable patterns—data validation, encryption, logging. This modular approach means assembling rather than building from scratch, accelerating project delivery.

Security-First Integration

Security is no longer an afterthought. Modern platforms build encryption, zero-trust authentication, and compliance monitoring into their foundation. Companies implementing security-focused practices report up to 30% reduction in data breach incidents. This matters because compromised integration can expose multiple organizations downstream.

DevOps and Continuous Delivery for Integration

Teams are applying DevOps principles to integration development: version control, automated testing, continuous deployment of integration code, and monitoring. Organizations using CI/CD report 40-50% faster project delivery. Integration becomes just another software development discipline, not a separate silo.

Unlock the full potential of system development integration!

Implementing: The Five-Phase Roadmap

Successful system development integration doesn’t happen overnight. Here’s a proven, phase-by-phase approach.

Phase 1: Assessment & Strategy

Begin your initiative by mapping existing systems, data flows, and pain points. Define clear business goals: reduce order processing time by 50%, eliminate manual data entry, prevent data inconsistencies. Identify quick wins and long-term priorities for your roadmap.

Stakeholder interviews with business and IT teams surface hidden complexity. System inventory documents all applications, databases, data flows, and current pain points. Requirements gathering clarifies which data needs to flow where, latency expectations, volume requirements, and reliability standards for.

Deliverables include a current state assessment, target architecture design (hub-and-spoke, microservices, event-driven, or hybrid), and roadmap with priorities. Define success metrics: instead of vague goals, commit to specific targets. “Improve efficiency” means nothing; “reduce inventory sync from 24 hours to 1 hour” is measurable success.

Phase 2: Pilot & Proof of Concept

Prove your approach works with real systems. Select 2-3 high-impact opportunities (often quick wins like connecting point-of-sale to inventory, or CRM to financial systems). Build with your target platform, test end-to-end data flow, validate error handling, confirm performance meets requirements, verify security controls function.

Pilot with one department or region before full rollout. Success means data flows with <0.1% error rate, latency meets requirements, security controls function properly, and cost per integration is understood. Lessons learned from pilots inform the next phase.

Phase 3: Scale & Standardization

Extend successful integration to additional systems, departments, and regions. Establish reusable patterns: standardize data transformation, error handling, logging, monitoring. Define governance : who can create integrations? What standards apply? How is change managed?

Train teams on your integration platform, patterns, and best practices. Deliver scaled production addressing 20-40+ systems. Document standards, create runbooks, and develop operational procedures.

Phase 4: Optimize & Innovate

Improve performance, reliability, and cost. Identify bottlenecks and optimize throughput. Right-size infrastructure to reduce costs. Introduce advanced features: AI-assisted mapping, real-time processing, event-driven capabilities.

Retire old integrations as new solutions stabilize. Decommission point-to-point connections and fragile legacy approaches as robust, modern alternatives prove themselves.

Phase 5: Sustain & Evolve

Maintain health through monitoring and incident response. Train teams continuously as your landscape evolves. Review your strategy annually—are new systems and technologies changing your requirements? Make incremental improvements driven by operational metrics and user feedback.

AI-Powered Integration: Reshaping Connectivity

Artificial intelligence is fundamentally changing how organizations design and operate integration. This isn’t hype—AI-powered integration is actively transforming the field.

Automated Data Mapping

Traditional integration required developers to manually map fields between systems. “CustomerID in System A maps to UserID in System B; EmailAddress maps to ContactEmail.” For complex efforts with hundreds of fields, this becomes tedious and error-prone. Manual mapping errors corrupt data downstream.

AI learns from historical mappings. Given a new system with fields like “cust_id, cust_email, cust_phone,” the platform suggests: “cust_id likely maps to CustomerID,” “cust_email to EmailAddress.” Accuracy exceeds 90% for common fields. Developers review and confirm rather than build from scratch, reducing mapping configuration time by 60-80%.

Anomaly Detection

Consider a retail integration syncing customer purchase data between point-of-sale and data warehouse. Normally, average transactions are $50-$200. One morning, data shows transactions of $50,000 each. Anomaly detection trained on historical patterns immediately flags this as unusual. The system alerts operators: “Potential data corruption detected.” Investigation reveals a decimal-point error caught before corrupting the warehouse.

Continuous data quality monitoring means organizations catch issues immediately rather than discovering problems weeks later when analytics reveal inconsistencies. AI-powered anomaly detection acts as an early warning system.

Intelligent Error Handling

Integration encounters errors constantly: required fields missing, systems temporarily unavailable, format incompatibilities. Traditional approaches either fail the entire batch (poor reliability) or let bad data through (poor quality).

Intelligent integration learns which errors are critical (stop and alert) versus recoverable (queue and retry later versus skip with logging). AI can even suggest fixes: “Field X is missing; last known value is Y; use Y provisionally while investigating?” This reduces manual interventions and accelerates resolution.

When System Development Integration Is the Right Choice

Not every organization needs a full system development integration program immediately. The key is knowing when integration will actually deliver a clear return.

Use system development integration when three or more systems must share data regularly and manual work is causing real pain. If teams are constantly exporting, cleaning, and re-importing data, or if you are adding and retiring systems frequently, a defined integration architecture helps you keep control and avoid rework.

Prioritize integration when real-time or near-real-time data is critical. Inventory, customer records, and financial transactions often cannot wait for nightly batch jobs, especially if you want consistent customer experiences across channels and stronger compliance and audit trails.

If you only have two systems with simple, occasional data exchange, a direct API link might be enough. In rare cases, replacing multiple legacy tools with one modern platform is faster than integrating them, but this tends to be costly and disruptive, so it should be carefully evaluated before skipping integration.

System Integration Testing: Ensuring Production Quality

Before any system development integration goes live, structured testing is essential. System Integration Testing (SIT) checks whether connected components behave correctly when they operate together, not just in isolation. It focuses on real data flows, system interactions, and how the overall solution performs under realistic conditions.

SIT typically validates that transformations are correct, data moves through the integration paths as designed, and expected behaviours occur when downstream services are slow or unavailable. It also covers performance targets, such as response time and throughput, and confirms that encryption, access control, and logging work as intended.

This testing should happen in an environment that closely mirrors production, including similar data volume and network conditions. Running SIT only in small development setups often hides scalability and timing issues that will appear when real users and real traffic hit the integrated system.

About HBLAB

HBLAB is a software development and integration partner that helps organizations connect systems, modernize legacy platforms, and deliver reliable digital products at scale. With 10+ years of delivery experience and a team of 630+ professionals, HBLAB supports end-to-end execution—from discovery and architecture to development, QA, integration testing, and ongoing operations.

For integration-heavy programs, HBLAB focuses on practical architectures (API-first, event-driven, and middleware-based approaches) designed to reduce complexity and keep future changes manageable.

HBLAB has achieved CMMI Level 3 certification, reflecting a structured delivery model and consistent process discipline for enterprise projects.

To help clients stay competitive in 2025, HBLAB also highlights its work in AI-powered solutions since 2017, including applying AI to automation, analytics, and intelligent workflows on top of modern integration foundations. HBLAB additionally notes collaboration with VNU’s Institute for AI, supporting applied research that can translate into real delivery outcomes.

👉 Looking for a partner to connect fragmented systems, accelerate releases, and reduce operational friction?

CONTACT HBLAB FOR A FREE CONSULTATION!

FAQ: Key Questions Answered

Q: What is system development integration in software development?

A: It is the practice of connecting separate applications, databases, and infrastructure so they operate as one coordinated system. Integration typically relies on middleware, APIs, messaging, and orchestration tools so data can move smoothly and processes can span multiple systems without manual intervention.

Q: What is the purpose of SIT (System Integration Testing)?

A: SIT ensures that integrated components work correctly when combined, not just individually. It validates end-to-end flows, checks that requirements are met, and reduces the risk of failures or data issues when the integrated solution is deployed to production.

Q: What does SI mean in consulting?

A: SI usually stands for Systems Integration. Consulting firms that offer SI services help clients design architectures, choose platforms, and implement integrations so different technologies and vendors’ products work together reliably.

Q: What are the four types of system development integration?

A: Common categories include vertical integration (within one business domain), horizontal integration (across departments), star or hub-based integration (many systems connected through a central hub), and point‑to‑point integration (direct links between pairs of systems, which can be hard to scale).

Q: What are the five steps in the process?

A: Most methods follow five stages: extract data from sources, transform and validate it, route it to the right targets, load it into those systems, and then monitor and audit the flows to keep quality and compliance under control.

Q: Is system development integration part of DevOps?

A: They overlap but are not the same. DevOps focuses on how teams build, test, and release software quickly and reliably; integration focuses on how systems talk to each other. In practice, DevOps pipelines often automate deployment and testing of integration components as well.

Q: What are the 7 phases of DevOps?

A: Many models describe the lifecycle as continuous planning, coding, building, testing, releasing, deploying, and monitoring, with feedback looping through all stages for ongoing improvement.

Q: Which is better—DevOps or SRE?

A: They address different aspects. DevOps is a cultural and process framework aimed at speed and collaboration, while Site Reliability Engineering (SRE) provides concrete practices for reliability, such as SLOs and error budgets. Mature organizations use both together rather than choosing one over the other.

Q: What is the difference between CI and CD?

A: Continuous Integration (CI) means merging changes frequently and running automated tests to catch integration issues early. Continuous Delivery or Deployment (CD) takes the tested code and pushes it to production or a production‑like environment in an automated, repeatable way.

Q: What is the future of integration in this area?

A: Key trends include AI‑assisted mapping and anomaly detection, wider use of cloud‑native integration platforms, event‑driven designs for real‑time data, and security baked into integration flows. Analysts expect the global integration market to grow strongly through the next decade as digital initiatives expand.

Q: What is the difference between an API and integration?

A: An API is a defined interface to a single system or service. Integration is the broader discipline of wiring multiple systems together using APIs plus logic for transformation, routing, error handling, security, and monitoring.

Q: What is another name for this type of integration work?

A: It is often called enterprise application integration (EAI), systems integration, middleware integration, or simply IT systems integration, depending on the organization and context.

Conclusion

Integration between systems has become a strategic requirement rather than a nice‑to‑have. Organizations that connect their core platforms, channels, and data sources gain faster processes, better insight, and more consistent customer experiences than those stuck with manual transfers and isolated tools.

The most successful programs focus on clear business outcomes, follow a phased roadmap, and combine solid engineering practices with good change management. If internal capacity is limited, working with an experienced integration partner can shorten timelines and reduce risk while keeping long‑term flexibility in mind.

Read more:

– Product Development: The Complete Guide to Strategy, Methodologies, and Tools

– Database Development Made Simple: A Friendly Guide

– A Complete Guide to Custom Software Development: Process, Costs and How to Choose the Right Partner