Machine learning models have transformed how organizations extract value from data, yet most remain trapped in experimental notebooks, never reaching production environments where they can deliver business impact.

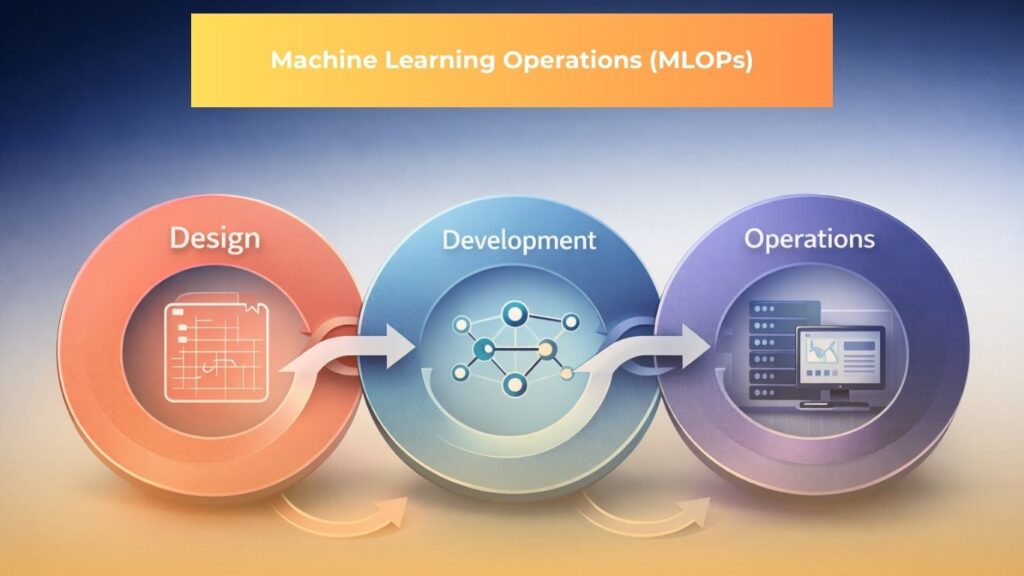

What is MLOps?

MLOps, short for machine learning operations, represents a set of practices designed to create an assembly line for building and running machine learning models.

The discipline enables organizations to automate tasks, deploy models quickly, and ensure seamless cooperation among everyone involved in the machine learning pipeline, including data scientists, ML engineers, and IT operations teams.

The term combines machine learning with DevOps principles, applying software engineering best practices to machine learning projects. MLOps aims to streamline the time and resources required to run data science models by incorporating continuous integration and continuous delivery methodology from DevOps.

This creates an automated assembly line for each step in creating a machine learning product.

Organizations collect massive amounts of data containing valuable insights into their operations and potential for improvement. Machine learning, a subset of artificial intelligence, empowers businesses to leverage this data with algorithms that uncover hidden patterns revealing actionable insights.

However, as ML becomes increasingly integrated into everyday operations, managing these models effectively becomes paramount to ensure continuous improvement and deeper insights.

Why Organizations Need MLOps?

Before MLOps, managing the ML lifecycle was a slow and laborious process, primarily due to the large datasets required in building business applications.

Traditional machine learning development involves significant resources, hands on time from data scientists, and disparate team involvement, often resulting in teams working in silos.

The machine learning workflow begins with data scientists asking the right questions and identifying features most relevant to the task at hand. Once an initial set of features is defined, teams must identify, aggregate, clean, and annotate known datasets that can train the model to recognize those features.

An inherent disconnect exists between data scientists developing models and the engineers who must operationalize them in production ready applications. Each group works in its own silo with unique mindsets, processes, concepts, and tool stacks.

MLOps addresses these challenges by:

- Creating standardized workflows that reduce the complexity and time of moving models into production

- Enhancing collaboration and communications across teams that are often siloed, including data science, development, and operations

- Streamlining the interface between research and development processes and infrastructure in general

- Operationalizing specialized hardware like GPUs, particularly in production environments

- Making infrastructure transparent to understand and monitor ML infrastructure and compute costs at all stages, from development to production

- Standardizing the ML process to make it more auditable for governance and regulation purposes

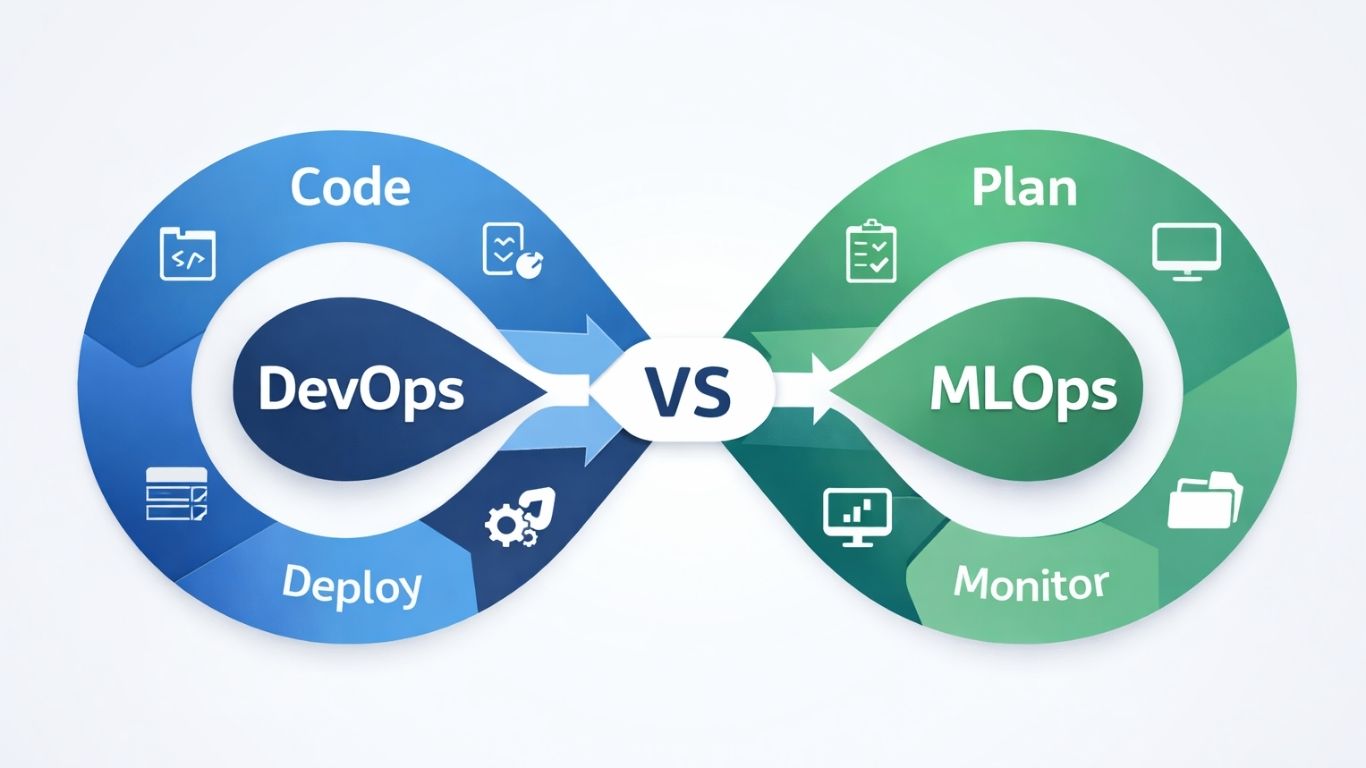

What Makes Machine Learning Different From DevOps

DevOps focuses on streamlining the development, testing, and deployment of traditional software applications, emphasizing collaboration between development and operations teams to automate processes and improve software delivery speed and quality.

MLOps builds upon these principles and applies them specifically to the machine learning lifecycle, going beyond code deployment to encompass data management, model training, monitoring, and continuous improvement.

Critical distinctions include:

Traditional software applications execute deterministic logic, while machine learning models incorporate probabilistic predictions that can change based on new data. This fundamental difference requires different testing, validation, and monitoring strategies.

Software code changes are typically versioned and deployed, but ML systems require versioning of data, models, and code simultaneously. The interplay between these three elements creates complexity that traditional DevOps tools were not designed to handle.

Software applications degrade primarily through bugs or infrastructure failures. Machine learning models can degrade silently through data drift, where the statistical properties of the production data diverge from the training data, even when the code and infrastructure remain unchanged.

DevOps emphasizes rapid iteration and deployment. MLOps must balance this velocity with the need for extensive experimentation, model validation, and performance monitoring that are unique to machine learning systems.

The infrastructure requirements differ substantially. While traditional applications may need CPU resources and storage, ML workloads often require specialized hardware like GPUs and TPUs for training, along with distributed computing frameworks to handle massive datasets.

Components of ML infrastructure

Data Ingestion and Management

Data ingestion capabilities form the core of any machine learning infrastructure. These capabilities collect data needed for model training, refinement, and application from a wide range of sources.

Tools enable data from disparate systems to be aggregated and stored without requiring significant upfront processing, allowing teams to leverage real time data effectively and collaborate on dataset creation.

Organizations must carefully consider where to conduct machine learning workflows. Requirements for on premises versus cloud operations can differ significantly.

During the training stage, focus should primarily be on operational convenience and cost considerations. Regulations and security relating to data are also important considerations when deciding where to store training data.

Storage infrastructure requires special attention. An automated ML pipeline should have access to an appropriate volume of storage according to the requirements of the models.

Data hungry models may require petabytes of storage. Teams need to consider in advance where to locate this storage, whether on premises or in the cloud.

Feature Engineering and Selection

Feature engineering represents one of the most critical stages in machine learning development. The process involves transforming raw data into refined features that machine learning algorithms can effectively process.

Strong feature engineering directly impacts model accuracy and performance.

Machine learning feature selection is a process that refines how many predictor variables are used in a model. The number of features a model includes directly affects how difficult it is to train, understand, and run.

Automated feature selection can be scripted to use one or more algorithmic methods such as wrapper, filter, or embedded approaches.

Feature stores provide a centralized repository for storing and managing features used in model training. These systems promote consistency and reusability of features across different models and projects, ensuring teams use the most relevant and up to date features for their machine learning initiatives.

Model Training and Selection Infrastructure

Model selection determines which model fits best for machine learning implementations based on model performance, complexity, maintainability, and available resources. The model selection process determines what data is ingested, which tools are used, which components are required, and how components are interlinked.

Training infrastructure must support the computational demands of modern machine learning. Hardware used for machine learning can have huge impact on performance and cost.

GPUs are typically used to run deep learning models, while CPUs are used to run classical machine learning models. In some cases where traditional ML uses large volumes of data, it can also be accelerated by GPUs using frameworks like NVIDIA RAPIDS.

The efficiency of the CPU or GPU for algorithms being used will affect operating costs, cloud hours spent waiting for processes to complete, and by extension, time to market.

When building machine learning infrastructure, teams should find the balance between underpowering and overpowering resources. Underpowering may save upfront costs but requires extra time and reduces efficiency.

Overpowering ensures hardware does not restrict capabilities but means paying for unused resources.

Machine Learning Pipeline Automation

Numerous tools can automate machine learning workflows according to scripts and event triggers. Pipelines are used to process data, train models, perform monitoring tasks, and deploy results.

These tools enable teams to focus on higher level tasks while helping to increase efficiency and ensure the standardization of processes.

Automation in machine learning workflows includes several critical components:

Hyperparameter optimization can be automated through the application of search algorithms such as random search, grid search, or Bayesian optimization

Model selection automation determines the right candidate model for implementations

Feature selection automation refines variables used in models through algorithmic methods

Pipeline automation also extends to data preprocessing, which involves encoding, cleaning, and verifying data before use. Automated tasks can perform basic data preprocessing before performing hyperparameter optimization and model optimization steps.

Advanced preprocessing can also be performed, including automation of feature selection, target encoding, data compression, text content processing, and feature creation or generation.

Testing and Validation Frameworks

Testing machine learning models requires integrating tooling between training and deployment phases.

This tooling runs models manually against labeled datasets to ensure results are as expected.

Thorough testing requires:

- Collection and analysis of both qualitative and quantitative data

- Multiple training runs in identical environments

- The ability to identify where errors occurred

To set up machine learning testing, teams need to add data analysis, monitoring, and visualization tools to infrastructure. Teams also need to set up automated creation and management of environments.

During setup, integration tests should be performed to ensure components are not causing errors in other components or negatively affecting test results.

Model evaluation assesses the performance of models on unseen data, which is critical to ensure models perform well in real world scenarios. Evaluation metrics such as accuracy, precision, recall, and fairness measures gauge how well the model meets project objectives.

These metrics provide a quantitative basis for comparing different models and selecting the best one for deployment.

Deployment and Inference Infrastructure

Deployment represents the final step teams need to account for in their architecture. This step packages the model and makes it available to development teams for integration into services or applications.

If offering machine learning as a service, deployment may also mean deploying the model to a production environment where it can take data from users and return results.

MLaaS deployment typically involves containerizing models. When models are hosted in containers, teams can deliver them as scalable, distributed services regardless of environment.

Container orchestration platforms manage the deployment, scaling, and operation of these containerized ML models.

During the deployment stage, focus should be on balancing between performance and latency requirements versus available hardware in the target location.

Models that need very fast response or very low latency should prioritize edge or local infrastructures and be optimized to run on low powered local hardware. Models that can tolerate some latency can leverage cloud infrastructure, which can scale up if needed to run heavier inference workflows.

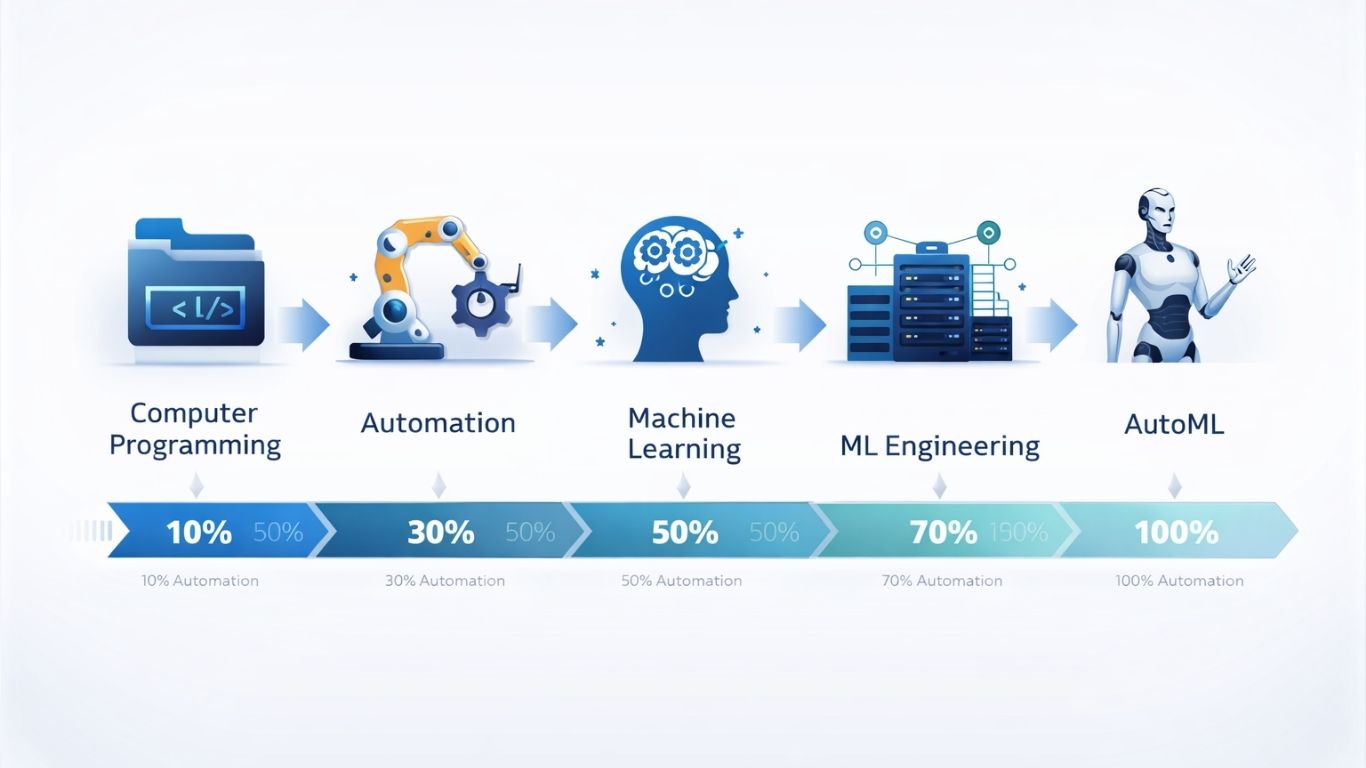

Automation Strategies

Automated machine learning is a process that automatically performs many of the time consuming and repetitive tasks involved in model development. It was developed to increase the productivity of data scientists, analysts, and developers, and to make machine learning more accessible to those with less expertise in data.

Machine learning automation is important because it enables organizations to significantly reduce the knowledge based resources required to train and implement machine learning models.

It can be effectively used by organizations with less domain knowledge, fewer computer science skills, and less mathematical expertise. This reduces pressure on individual data scientists as well as on organizations to find and retain those scientists.

AutoML can also help organizations improve model accuracy and insights while reducing opportunities for error or bias. This is because machine learning automation is developed with best practices determined by expert data scientists.

AutoML models do not rely on individual developers or organizations to implement best practices themselves.

Continuous Integration and Delivery for ML

In MLOps, continuous integration covers the creating and verifying aspects of the model development process. The goal of CI is to create the code and verify the quality of both the code and the model before deployment.

This includes testing on a range of sample data sets to ensure the model performs as expected and meets quality standards.

CI might involve:

Refactoring exploratory code in Jupyter notebooks into Python or R scripts

Validating new input data for missing or error values

Performing unit testing and integration testing in the end to end pipeline

Automation tools like Azure Pipelines in Azure DevOps or GitHub Actions can perform linting and unit testing.

Continuous delivery involves the steps needed to safely deploy a model in production. The first step is to package and deploy the model in pre production environments such as dev and test environments.

Portability of the parameters, hyperparameters, and other model artifacts is an important aspect to maintain as code is promoted through these environments. This portability is especially important when it comes to large language models and stable diffusion models.

Once the model passes unit tests and quality assurance tests, it can be approved for deployment in the production environment.

Continuous deployment further extends this by automatically deploying approved models without manual intervention, creating a fully automated pipeline from code commit to production deployment.

Model Retraining and Continuous Training

One of the unique aspects of MLOps compared to traditional DevOps is the need for continuous training. Unlike traditional software that remains static until deliberately updated, machine learning models can degrade over time as the statistical properties of real world data change.

Automated model retraining creates alerts and automation to take corrective action in case of model drift due to differences in training and inference data.

This involves monitoring model performance metrics, detecting when performance falls below acceptable thresholds, and automatically triggering retraining workflows with updated data.

The automation of retraining workflows must balance several considerations. Teams need to determine the frequency of model refresh, manage inference request times, and handle other production specific requirements in testing and QA.

Using CI/CD tools such as repos and orchestrators borrowed from DevOps principles helps automate the pre production pipeline.

Monitoring and Observability

Continuous monitoring of model performance, data quality, and infrastructure health enables proactive identification and resolution of issues before they impact production systems.

Machine learning monitoring and visualization are used to gain perspective on how smoothly workflows are moving, how accurate model training is, and to derive insights from model results.

Performance Monitoring and Drift Detection

Model monitoring continuously scrutinizes the model’s performance in the production environment. This helps identify emerging issues such as accuracy drift, bias, and concerns around fairness that could compromise the model’s utility or ethical standing.

Monitoring is about overseeing current performance and anticipating potential problems before they escalate.

Setting up robust alerting and notification systems is essential to complement monitoring efforts. These systems serve as an early warning mechanism, flagging any signs of performance degradation or emerging issues with deployed models.

By receiving timely alerts, data scientists and engineers can quickly investigate and address concerns, minimizing their impact on model performance and end user experience.

Data drift occurs when the statistical properties of the input data change over time. Concept drift happens when the relationship between input features and target variables changes.

Both forms of drift can cause model performance to degrade even when the underlying code remains unchanged. Automated drift detection compares the distribution of production data against training data distributions to identify when retraining becomes necessary.

Infrastructure and Resource Monitoring

Beyond model performance, teams must monitor the underlying infrastructure supporting ML workloads. This includes tracking compute resource utilization, memory consumption, storage capacity, and network performance.

For GPU accelerated workloads, monitoring GPU utilization, memory, and temperature becomes critical.

Resource monitoring enables teams to optimize costs by identifying underutilized resources and scaling infrastructure appropriately. It also helps prevent service disruptions by detecting capacity constraints before they impact model serving latency or throughput.

Explainability and Model Governance

As machine learning models increasingly drive critical business decisions, the ability to explain model predictions becomes essential.

Model explainability tools help teams understand which features most strongly influence predictions, enabling better debugging, validation, and compliance with regulatory requirements.

Model governance encompasses policies and guidelines that govern the responsible development, deployment, and use of machine learning models.

Such governance frameworks are critical for ensuring models are developed and used ethically, with due consideration given to fairness, privacy, and regulatory compliance. Establishing robust ML governance strategy is essential for mitigating risks, safeguarding against misuse of technology, and ensuring machine learning initiatives align with broader ethical and legal standards.

Collaborative Workflows for MLOPS

MLOps emphasizes breaking down silos between data scientists, software engineers, and IT operations. This fosters communication and ensures everyone involved understands the entire process and contributes effectively.

Collaboration tools enhance communication and knowledge sharing among diverse stakeholders in the MLOps pipeline.

Version Control for Data, Models, and Code

Using systems like Git enables teams to programmatically maintain a history of changes, revert to previous versions, and manage branches for different experiments.

However, machine learning systems require extending traditional version control to encompass data and models alongside code.

Data versioning tracks changes to datasets over time, enabling reproducibility of experiments and traceability of results. When a model is trained, the exact version of the data used for training must be recorded to enable reproduction of results or debugging of issues.

Model versioning manages different iterations of trained models, including the hyperparameters, training data versions, and code versions used to create each model.

This comprehensive versioning enables teams to compare model performance across iterations, roll back to previous versions when necessary, and maintain complete lineage from raw data to deployed models.

Agile Planning for Machine Learning Projects

Agile planning involves isolating work into sprints, which are short time frames for completing specific tasks. This approach allows teams to adapt to changes quickly and deliver incremental improvements to models.

Model training can be an ongoing process, and Agile planning can help scope projects and enable better team alignment.

Tools like Azure Boards in Azure DevOps or GitHub issues help teams manage Agile planning for ML projects. These platforms enable tracking of experiments, model iterations, and deployment milestones while maintaining visibility across cross functional teams.

Infrastructure as Code for ML Environments

Infrastructure as code enables teams to repeat and automate the infrastructure needed to train, deploy, and serve models. In ML systems, IaC helps simplify and define appropriate Azure, AWS, or Google Cloud resources needed for specific job types in code, with that code maintained in a repository.

This allows version control of infrastructure and enables changes for resource optimization, cost effectiveness, and performance tuning as needed.

IaC ensures development, staging, and production environments remain consistent, reducing the “works on my machine” problems that plague machine learning projects.

Production Challenges and Solutions: Real World Implementation Considerations

Productionizing machine learning is difficult. The machine learning lifecycle consists of many complex components such as data ingest, data prep, model training, model tuning, model deployment, model monitoring, explainability, and much more.

It also requires collaboration and handoffs across teams, from data engineering to data science to ML engineering.

Managing Compute Resources at Scale

The computational demands of machine learning workloads vary dramatically across different stages of the lifecycle.

Training large deep learning models may require clusters of GPUs running for days or weeks, while inference workloads may need to process thousands of requests per second with millisecond latency requirements.

MLOps practices enable teams to dynamically allocate and manage compute resources across these varying demands.

Resource allocation automation ensures:

- Efficient use of expensive GPU resources

- Automatic scaling to handle variable workloads

- Cost optimization through spot instances or preemptible compute

Network infrastructure is vital to ensuring efficient machine learning operations. All tools need to communicate smoothly and reliably. Teams also need to deliver and ingest data to and from outside sources without bottlenecks.

To ensure networking resources meet requirements, teams should consider the overall environment they are working in and carefully gauge how well networking capabilities match processing and storage capabilities.

Security and Compliance in ML Pipelines

Training and applying models requires extensive amounts of data, which is often sensitive or valuable. Big data is a big lure for threat actors interested in using data for malicious purposes like ransoming or stealing data in data breaches for black markets.

Additionally, depending on the purpose of the model, illegitimate manipulation of data could lead to serious damages.

For example, if models used for object detection in autonomous vehicles are manipulated to intentionally cause crashes, the consequences could be catastrophic.

When creating machine learning infrastructure, teams should take care to build in:

- Monitoring capabilities for detecting anomalies

- Encryption for data at rest and in transit

- Access controls to properly secure data

Teams should also verify which compliance standards apply to their data. Depending on results, teams may need to limit the physical location of data storage or processing, or remove sensitive information before use.

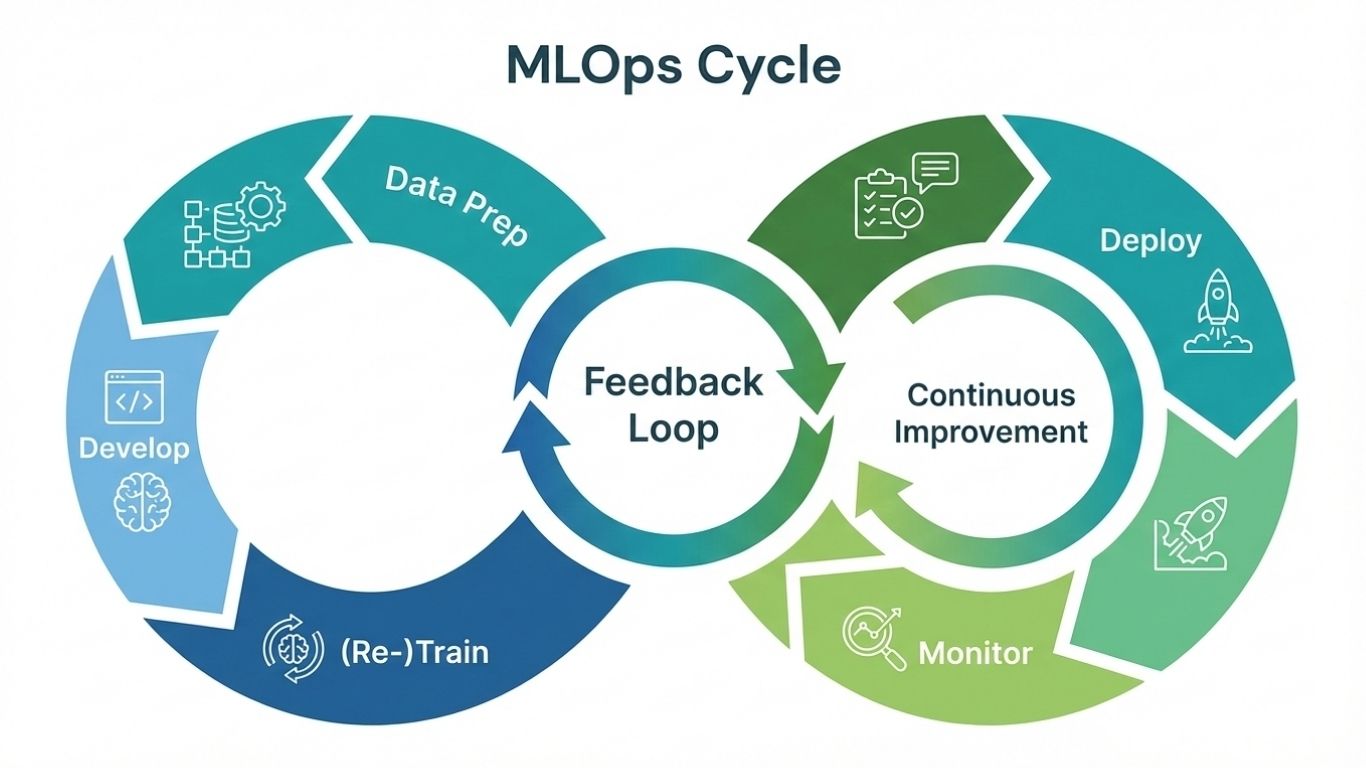

Closing the Loop Between Development and Operations

Establishing a critical feedback loop enables the data science team to continue improving model inference while ensuring updated models do not have a negative impact on application performance.

This requires seamless integration between monitoring systems, experiment tracking platforms, and deployment pipelines.

When monitoring systems detect performance degradation, they should automatically trigger notifications to data science teams and potentially initiate retraining workflows.

The results of retraining experiments should be systematically evaluated against current production models before deployment, with rollback capabilities in place if new models underperform.

Performance issues and new production data must be reflected back to the data science team so they can tune and improve the model. The updated model is then thoroughly tested by the operations team before being put into production.

The Path Forward: Implementing MLOps in Your Organization

Organizations at different maturity levels require different approaches to MLOps implementation. Understanding MLOps maturity levels helps teams assess their current state and identify areas for improvement.

Level 0: No MLOps represents where most organizations start. Models are deployed manually and managed individually, often by data scientists. This approach is inefficient, prone to errors, and difficult to scale as projects grow.

Level 1: ML Pipeline Automation introduces basic automation through scripts or CI/CD pipelines that handle essential tasks like data preprocessing, model training, and deployment. This brings efficiency and consistency to the process.

Level 2: CI/CD Pipeline Integration seamlessly integrates the ML pipeline with existing CI/CD pipelines, enabling continuous model integration, delivery, and deployment. This makes the process smoother and enables faster iterations.

Level 3: Advanced MLOps incorporates continuous monitoring, automated model retraining, and automated rollback capabilities. Collaboration, version control, and governance become vital aspects of a mature, fully optimized, and robust MLOps environment.

Accelerate Your MLOps Journey

Implementing MLOps at scale requires experienced teams who understand the intricate balance between data science innovation and production reliability.

HBLAB brings over a decade of expertise in building custom digital solutions that bridge this critical gap, helping organizations transform experimental ML models into robust production systems.

Our MLOps and AI capabilities include:

- Deep AI expertise since 2017, reinforced by strategic partnerships with leading research institutions, enabling us to tackle complex machine learning infrastructure challenges

- 700+ IT professionals with proven experience in building scalable ML pipelines, automated deployment systems, and comprehensive monitoring frameworks

- 30% senior-level talent (5+ years of experience) who bring battle-tested MLOps practices from enterprise deployments across diverse industries

- CMMI Level 3 certification ensuring process excellence in managing the full ML lifecycle—from data versioning to continuous training workflows

Whether you need to establish your first MLOps pipeline, scale existing ML infrastructure, or close the feedback loop between your data science and operations teams, HBLAB delivers 30% cost efficiency without compromising on quality or velocity.

Our flexible engagement models give you full control over tools, workflows, and product delivery while accelerating your path to production ML.

People Also Ask

What does MLOps mean?

MLOps stands for Machine Learning Operations. It’s a set of practices that combines machine learning with DevOps principles to automate the building, testing, and deployment of ML models into production environments. MLOps streamlines workflows across data scientists, ML engineers, and IT operations teams.

What is MLOps vs DevOps?

DevOps focuses on automating software development, testing, and deployment. MLOps extends these principles specifically to machine learning by adding data versioning, model training, monitoring for data drift, and continuous retraining. MLOps must handle three interdependent elements—data, models, and code—whereas DevOps primarily manages code.

Does MLOps require coding?

Yes, MLOps typically requires coding skills. You need to write scripts for data pipelines, model training automation, CI/CD workflows, and monitoring systems. Common languages include Python, SQL, and shell scripting. However, the depth depends on your specific role within the MLOps pipeline.

What is MLOps’ salary?

MLOps engineer salaries vary by location and experience. In the United States, entry-level positions start around $90,000–$120,000 annually, mid-level roles range from $130,000–$170,000, and senior positions exceed $180,000. Salaries are higher in tech hubs like San Francisco and New York.

Is MLOps difficult to learn?

MLOps has a moderate learning curve. You need foundational knowledge in machine learning, software engineering, and DevOps practices. If you already have experience in any of these areas, it becomes easier. The main challenge is integrating multiple disciplines rather than mastering any single one.

What are the 4 types of ML?

The four main types of machine learning are supervised learning (training on labeled data), unsupervised learning (finding patterns in unlabeled data), semi-supervised learning (combining labeled and unlabeled data), and reinforcement learning (training through rewards and penalties).

Is Python required for MLOps?

Python is the most widely used language in MLOps and is highly recommended. However, it’s not strictly required. You can work with Java, Scala, or other languages depending on your tech stack. That said, Python’s popularity in data science and ML tooling makes it the practical standard.

Is DevOps a dead-end job?

No. DevOps is a growing field with strong career prospects. The demand for DevOps and MLOps engineers continues to increase as organizations scale cloud infrastructure and automate deployment pipelines. Career paths include senior engineer, architect, or leadership roles.

Is Docker an MLOps tool?

Docker is a containerization platform used in MLOps but not exclusively an MLOps tool. It packages ML models and their dependencies into containers for consistent deployment across environments. Docker is one component within a broader MLOps infrastructure alongside orchestration tools, monitoring systems, and CI/CD pipelines.

READ MORE:

– Machine Learning Consulting: A Complete Guide for Businesses in 2026

– AI and Machine Learning Trends 2026: The Solid Basis for Enterprise Transformation