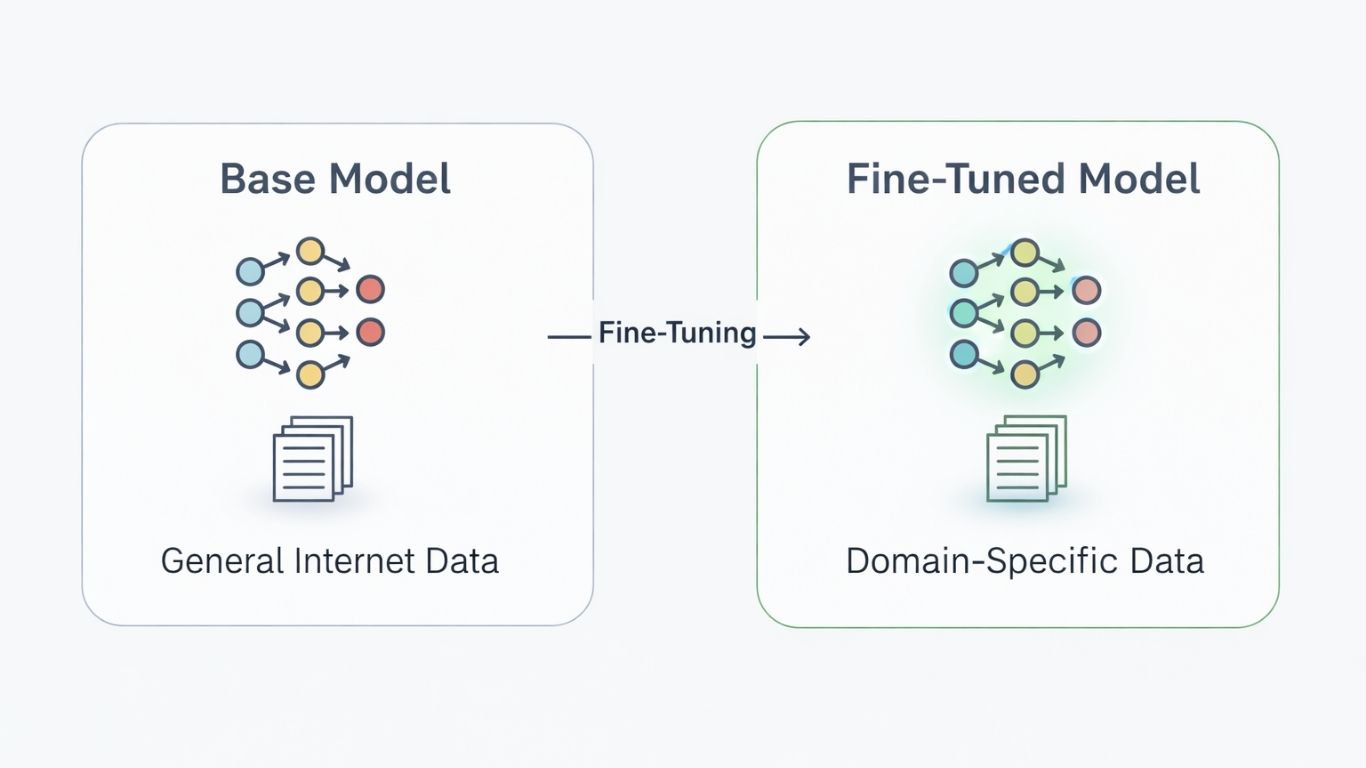

Large language models excel at general tasks but often fall short in specialized business applications. LLM fine-tuning addresses this by adapting pre-trained models to specific domains through targeted training on custom datasets.

It can transform a knowledgeable generalist into a domain expert without the astronomical costs of building from scratch.

What is Fine-Tuning?

Organizations continue training models like GPT-4 or Claude using specialized datasets containing domain-specific terminology, corporate policies, or industry knowledge. Similar to how agentic AI systems autonomously execute complex workflows, LLM fine-tuning enables models to operate independently within specialized domains.

However, fine-tuning isn’t a universal solution.

Microsoft research reveals significant limitations when injecting entirely new factual information into models. Their 2024 EMNLP study compared unsupervised fine-tuning against retrieval-augmented generation (RAG) across anatomy, astronomy, and current events.

RAG consistently won by substantial margins: 87.5% accuracy versus 58.8% for fine-tuning on current events knowledge with Mistral-7B.

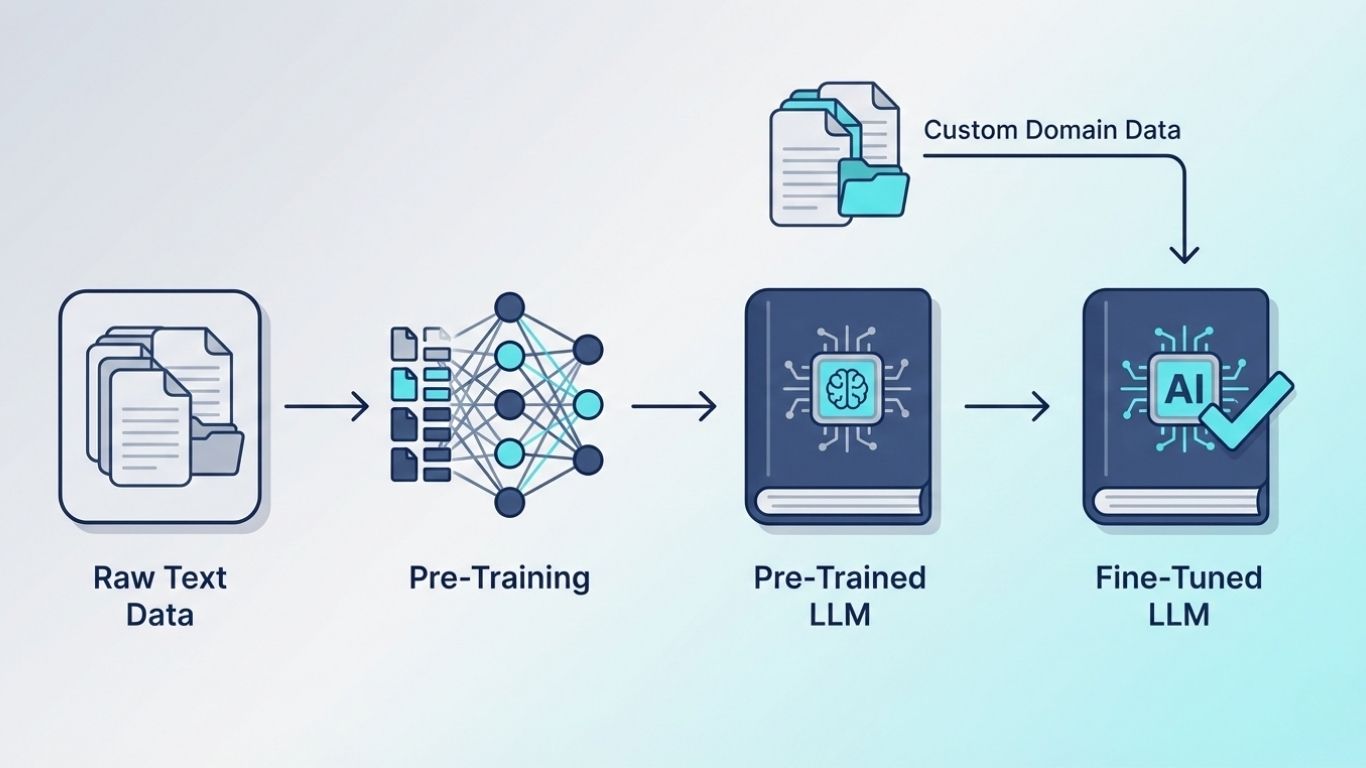

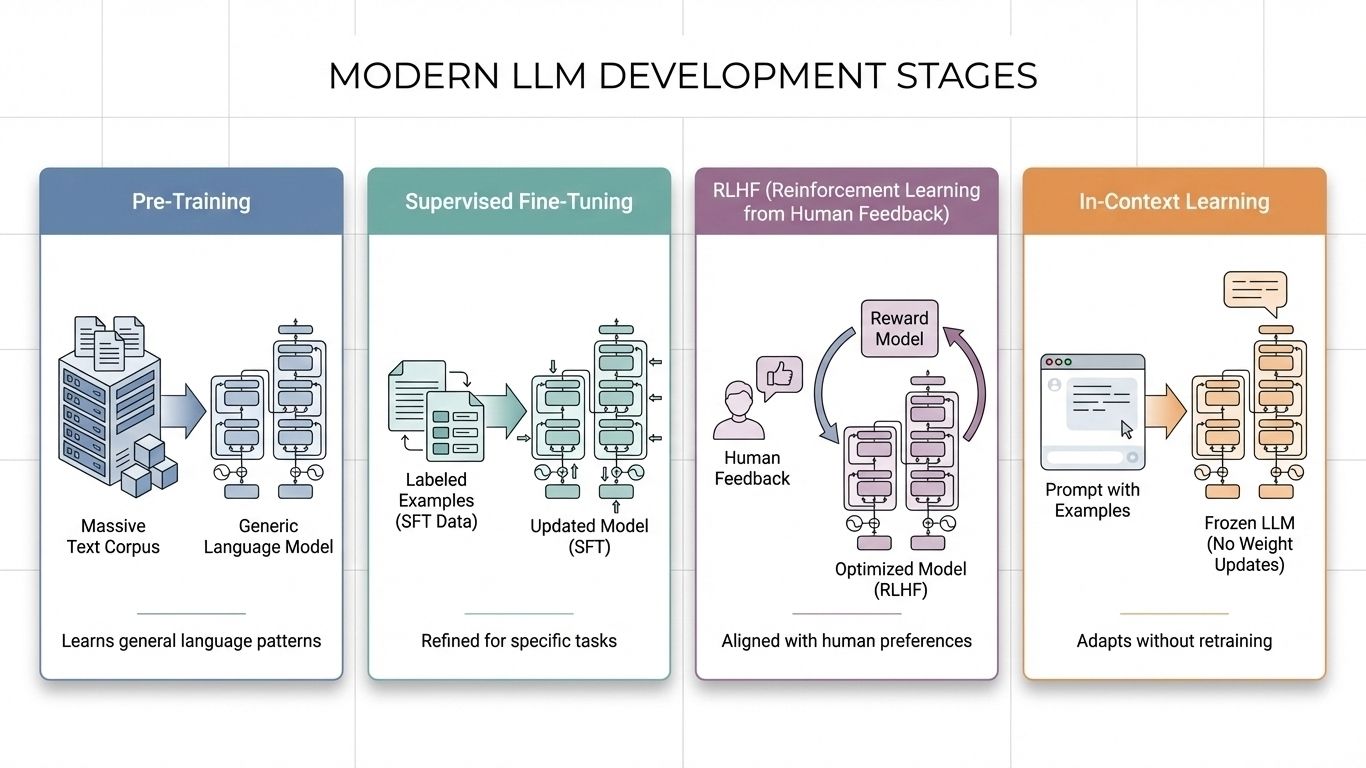

Understanding Fine-Tuning Mechanisms

LLM fine-tuning builds directly on pre-training. Models like GPT-4 or Llama contain billions of parameters learned from massive text collections, establishing general language understanding.

The fine-tuning process continues this learning with a crucial difference: instead of learning from the entire internet, the model trains on carefully selected datasets.

The Training Process

During pre-training, models minimize prediction loss by updating parameters θ to maximize next-token accuracy. When implementing LLM fine-tuning, we shift from unsupervised to supervised learning, providing labeled examples showing exactly what we want.

Learning Rate Considerations

LLM fine-tuning requires significantly lower learning rates than initial training. Initial pre-training might use rates around 3×10⁻⁴, but fine-tuning LLMs requires rates between 1×10⁻⁶ and 5×10⁻⁵—a 30-100x reduction. This conservative prevents catastrophic forgetting, where models lose existing knowledge while learning new information.

Why Fine-Tune Large Language Models

Domain Knowledge Deficits

General-purpose models trained on broad internet text lack deep expertise in specialized fields. Medical diagnostics, legal analysis, and financial modeling demand precision that generic training cannot provide.

LLM fine-tuning transforms these general models into domain specialists.

The Information Freshness Problem

Every pre-trained model has a knowledge cutoff date. A model trained in January 2025 knows nothing about February 2026 events. Microsoft research shows that LLM fine-tuning struggles with incorporating entirely new factual information—RAG often works better for updating models with fresh facts.

This challenge parallels the need for robust MLOps practices to maintain model performance over time.

Real-World Performance Gains

LLM fine-tuning delivers dramatic improvements when models train on domain-specific terminology.

- Healthcare applications see significant gains through medical terminology fine-tuning.

- Financial institutions use LLM fine-tuning to understand regulatory compliance requirements, reducing risks significantly.

- Legal firms leverage fine-tuned models to parse case law in minutes instead of hours.

Economic Efficiency

Training large language models from scratch requires months of time, millions in compute costs, and massive datasets. LLM fine-tuning leverages existing pre-trained knowledge, requiring only domain-specific datasets and days or weeks of training on modest hardware.

Types of Fine-Tuning Approaches

Supervised Fine-Tuning

Supervised LLM fine-tuning remains the most straightforward path to adapt large language models. This fine-tuning approach requires labeled datasets containing input-output pairs demonstrating exactly what you want the model to do. Hugging Face Transformers provides comprehensive tools for implementing supervised fine-tuning workflows.

Instruction Tuning emerged as a powerful variant of LLM fine-tuning. Instead of task-specific examples, you provide natural language task descriptions paired with correct responses. This instruction-based fine-tuning approach teaches models to follow diverse instructions, dramatically improving zero-shot capabilities.

Microsoft’s research uncovered critical limitations: instruction tuning effectively improves model behavior and output quality but doesn’t necessarily teach new factual knowledge.

Unsupervised Fine-Tuning

Continual pre-training treats the process as picking up where initial training left off. The model continues next-token prediction on domain-specific unlabeled datasets using significantly lower learning rates to prevent catastrophic forgetting.

Preventing Catastrophic Forgetting:

- Parameter-efficient methods like LoRA freeze most model weights while training small adapter modules

- Elastic Weight Consolidation identifies critical parameters and constrains their updates

- Regularization techniques penalize dramatic weight changes

Advanced Methods: RLHF and Beyond

Reinforcement learning from human feedback (RLHF) represents the cutting edge:

- Collect human evaluations of model responses

- Train a reward model predicting human preferences

- Optimize the language model using reinforcement learning

Direct Preference Optimization (DPO) simplifies the RLHF pipeline by directly optimizing on human preference data, achieving comparable results with reduced complexity.

How to Fine-Tune an LLM

>> Our Full Technical Guide on How to Deploy Large Language Models (LLMs)

Step 1: Choose a Pre-Trained Model

Select a foundation model that aligns with your task requirements. Consider model size, architecture, and domain relevance. For general tasks, GPT-2 or BERT work well. For specialized domains, explore models on Hugging Face that may have been pre-trained on similar data.

Key considerations:

- Model architecture suited to your task (encoder-only for classification, decoder-only for generation)

- Computational resources available for fine-tuning

- License restrictions for commercial use

- Base model performance on similar tasks

Step 2: Understand Your Base Model

Before beginning LLM fine-tuning, thoroughly understand your chosen model’s architecture, strengths, and limitations. Review the model card and documentation to identify:

- Input/output specifications and token limits

- Known biases or failure modes

- Recommended use cases and constraints

- Pre-training data composition

This understanding prevents surprises during fine-tuning and helps set realistic expectations for model performance.

Step 3: Define Your Fine-Tuning Strategy

Decide between full fine-tuning and parameter-efficient methods based on your resources and requirements. Full LLM fine-tuning updates all model weights, requiring substantial compute resources but offering maximum flexibility. Parameter-efficient approaches like LoRA update only a subset of parameters, reducing memory requirements by 80%.

Strategy selection factors:

- Available GPU memory and compute budget

- Dataset size and quality

- Required model customization depth

- Deployment constraints

Step 4: Prepare Your Training Dataset

High-quality data determines LLM fine-tuning success. Collect domain-specific examples relevant to your target task:

- For chatbots: conversation pairs demonstrating desired interactions

- For classification: labeled text samples with category annotations

- For summarization: document-summary pairs

- For code generation: code examples with natural language descriptions

Dataset preparation steps:

- Collect sufficient examples (typically 500-10,000+ depending on task complexity)

- Clean and validate data for accuracy

- Format data according to model requirements (JSON, CSV, or model-specific formats)

- Split data into training (80%), validation (10%), and test (10%) sets

- Balance class distributions to prevent bias

Step 5: Initialize Model Parameters and Configure Training

Load your pre-trained model and configure training parameters. Initialize all parameters from the pre-trained weights. For parameter-efficient methods, freeze base model weights and initialize only the trainable components.

Critical hyperparameters:

- Learning rate: 1×10⁻⁶ to 5×10⁻⁵ for fine-tuning (much lower than pre-training)

- Batch size: Balance between memory constraints and training stability

- Training epochs: Typically 3-10 epochs; monitor validation loss to prevent overfitting

- Warmup steps: Gradually increase learning rate at training start

- Weight decay: Regularization to prevent overfitting (typically 0.01)

Step 6: Execute the Fine-Tuning Process

Begin training your model using frameworks like Hugging Face Transformers or PyTorch. The LLM fine-tuning process iteratively updates model weights based on the difference between predictions and actual labels.

from transformers import Trainer, TrainingArguments

training_args = TrainingArguments(

output_dir="./llm-fine-tuned",

learning_rate=2e-5,

per_device_train_batch_size=8,

per_device_eval_batch_size=8,

num_train_epochs=3,

weight_decay=0.01,

evaluation_strategy="epoch",

save_strategy="epoch",

load_best_model_at_end=True,

)

trainer = Trainer(

model=model,

args=training_args,

train_dataset=train_dataset,

eval_dataset=eval_dataset,

compute_metrics=compute_metrics,

)

trainer.train()Monitor during training:

- Training and validation loss curves

- Gradient norms to detect instability

- Memory usage and training speed

- Early signs of overfitting (diverging training/validation metrics)

Step 7: Evaluate and Iterate

After LLM fine-tuning completes, comprehensively evaluate model performance on the held-out test set. Use task-specific metrics:

- Classification: Accuracy, precision, recall, F1 score

- Generation: BLEU, ROUGE, perplexity

- Question answering: Exact match, F1 score

- General capability: Retention on standard benchmarks (MMLU, etc.)

Iteration steps:

- Analyze failure cases to identify patterns

- Adjust hyperparameters based on validation performance

- Augment training data to address weaknesses

- Experiment with different fine-tuning approaches if needed

- Validate that original capabilities are preserved

This iterative refinement process continues until the fine-tuned model meets your performance requirements.

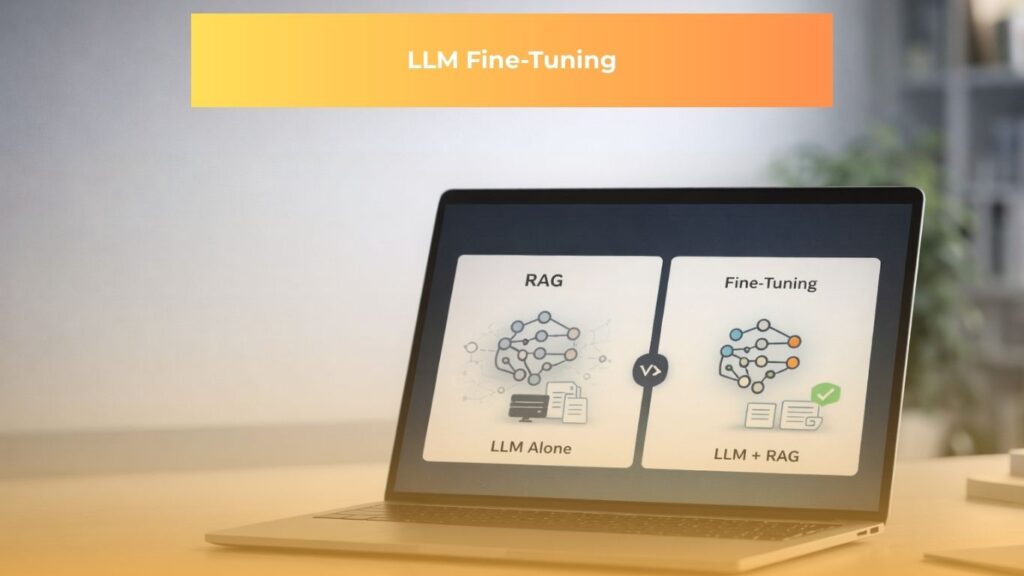

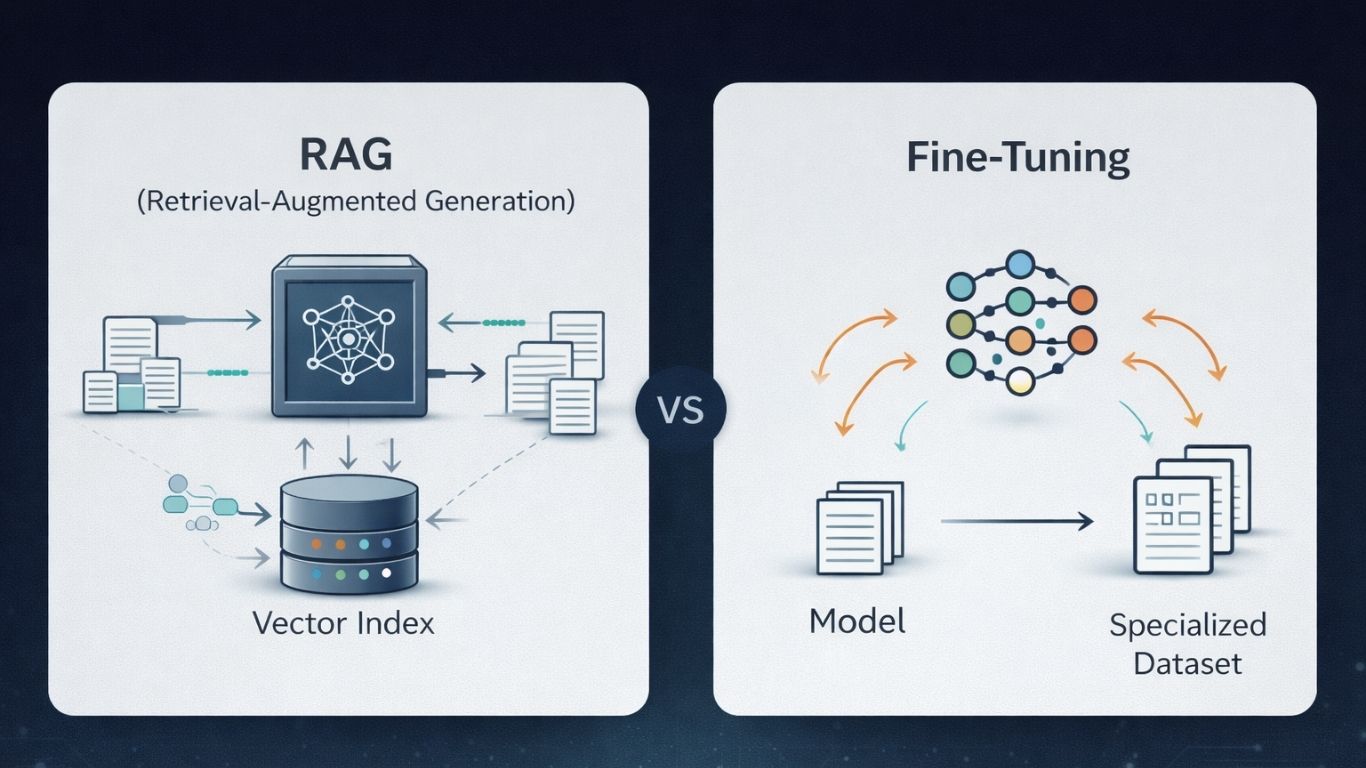

RAG vs Fine-Tuning: Critical Differences

Fundamentally Different Architectures

Fine-tuning modifies the model itself by continuing training on specialized datasets, embedding knowledge into model weights.

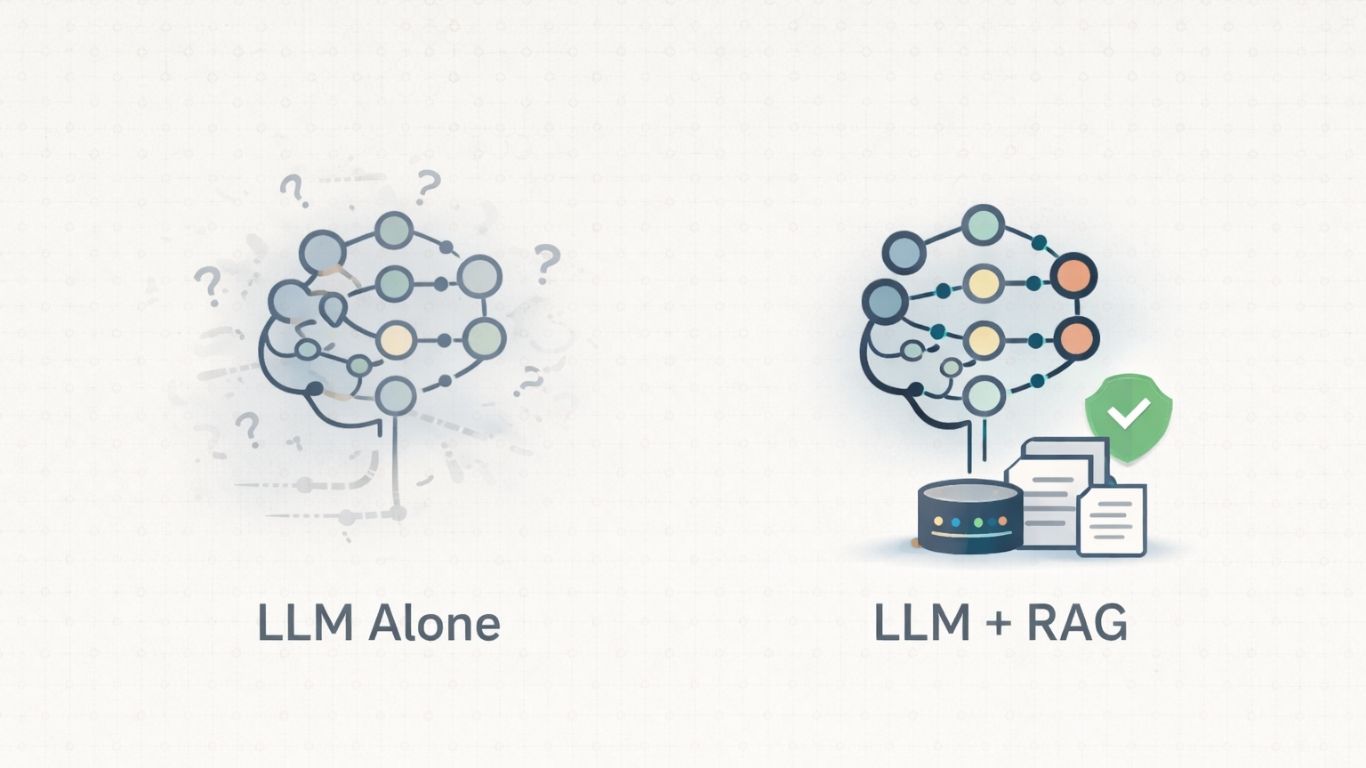

RAG keeps the model frozen, enhancing capabilities through external knowledge retrieval at inference time.

Knowledge Retention

Fine-tuning faces constant threat of catastrophic forgetting. RAG sidesteps this entirely—base model parameters never change, so there’s no forgetting.

When RAG Dominates

RAG wins decisively when you need to incorporate existing knowledge or entirely new facts. Retrieved documents provide grounding that prevents hallucination and enable access to information beyond training data.

When Fine-Tuning Wins

Fine-tuning excels when you need to modify model behavior, output style, or task-specific optimization patterns.

- Legal documents demand precise terminology and citation formats.

- Customer service chatbots maintaining consistent brand voice achieve better results through fine-tuning.

Combining Both Approaches

Microsoft tested fine-tuned models with RAG augmentation. Some combinations showed clear improvements, others showed no benefit or slight degradation. Success depends on specific use cases.

The Paraphrase Discovery: Exposing models to numerous paraphrases of the same fact significantly improves learning outcomes. Models seeing 10 paraphrased versions achieved markedly higher accuracy than those exposed to facts only once.

Parameter-Efficient Fine-Tuning Methods

The Memory Problem

Full fine-tuning needs memory for the model plus gradients, optimizer states, and forward activations during backpropagation. A 7-billion parameter model often exceeds consumer hardware capabilities.

LoRA: Low-Rank Adaptation

LoRA freezes pre-trained weights and inserts small adapter matrices into transformer layers. Weight changes get represented as the product of two smaller matrices, reducing trainable parameters by up to 10,000 times with minimal performance impact.

QLoRA: Quantized LoRA

QLoRA quantizes the base model to 4-bit precision while training adapters in higher precision. You can fine-tune 65-billion parameter models on a single 48GB GPU, achieving accuracy within 1% of full fine-tuning while using 80% less memory.

How to Choose Between RAG and Fine-Tuning

Understanding Your Data’s Nature

Static knowledge domains (historical events, established scientific principles) favor fine-tuning. Dynamic knowledge requiring frequent updates (news, stock prices, product catalogs) favors RAG.

Update Frequency

Daily or hourly updates: RAG becomes essential.

Quarterly or annual updates: Fine-tuning becomes viable.

Resource Constraints

Fine-tuning demands:

- GPU clusters or expensive cloud time

- ML engineering expertise

- Substantial time investment

RAG implementations need:

- Embedding models and vector databases

- Less specialized expertise

- Minimal training overhead

- Simpler update procedures

Knowledge Type

Factual recall tasks favor RAG:

- Product specifications

- Historical lookups

- Technical documentation

- Current events

Reasoning enhancement tasks favor fine-tuning:

- Domain-specific inference patterns

- Complex problem-solving requiring specialized logic

Decision Framework

Work through these questions systematically:

- How frequently does your knowledge base change?

- What resources (compute, expertise, time) do you have?

- Does your application prioritize speed or accuracy?

- Are you teaching new facts or changing how the model thinks?

- Can you achieve good results with simpler approaches first?

OpenAI’s fine-tuning emphasizes trying prompt engineering, prompt chaining, and function calling before investing in fine-tuning.

Start simple.

Add complexity only when simpler methods fall short.

Why Choose HBLAB for RAG and LLM Fine-Tuning

At HBLAB, AI is a proven capability built over nine years of focused research and enterprise collaboration. Our teams understand both cutting-edge large language model technologies and the practical constraints of your business, delivering solutions designed for reliability, governance, and long-term maintainability.

We combine two powerful approaches to build AI assistants that actually know your business.

Our M-RAG platform connects models directly to your internal knowledge bases, grounding every answer in real documents while eliminating hallucinations. Through enterprise-grade LLM fine-tuning, we shape model behavior including tone of voice, workflows, and domain-specific reasoning so the system speaks your language and fits your processes.

HBLAB delivers production-ready systems. Backed by 50+ AI experts, Kaggle-ranked leaders, and robust MLOps capabilities across AWS, Azure, and GCP, we handle everything from data preparation and model selection through RAG pipelines, parameter-efficient fine-tuning, and continuous monitoring.

We become your “One Team” AI partner, turning cutting-edge LLM technology into measurable business outcomes.

Conclusion

LLM fine-tuning transforms general-purpose language models into specialized experts. Organizations across healthcare, finance, legal, and technical sectors achieve measurable improvements through domain-specific training.

The RAG vs Fine-Tuning Verdict

RAG consistently outperforms unsupervised fine-tuning for incorporating new factual information, with gaps of 20-30 percentage points. Fine-tuning excels at behavior modification, style consistency, and specialized reasoning patterns.

Choose based on requirements:

- Dynamic knowledge → RAG

- Behavior modification → Fine-tuning

- Complex reasoning with current facts → Combine both

Contact us for a free consultation!

The Democratization of Fine-Tuning

Parameter-efficient methods transformed access. LoRA, QLoRA, and adapter tuning reduce memory requirements by 80%. Consumer GPUs can now fine-tune models that previously demanded expensive cloud infrastructure.

The Path Forward

Start by evaluating whether you actually need fine-tuning. Try prompt engineering and RAG first—they often achieve comparable results with far less effort.

When fine-tuning proves necessary:

- Invest in quality data preparation

- Start with parameter-efficient methods

- Evaluate comprehensively (task performance + general capabilities)

- Plan for ongoing maintenance

The technology continues evolving rapidly through multimodal capabilities, federated learning, and continual adaptation. But fundamental principles remain constant: understand your requirements, match methods to problems, and start simple.

Read more:

– Agentic AI In-Depth Report 2026: The Most Comprehensive Business Blueprint

– IT Outsourcing Trends 2026: Market Dynamics and Emerging Technologies