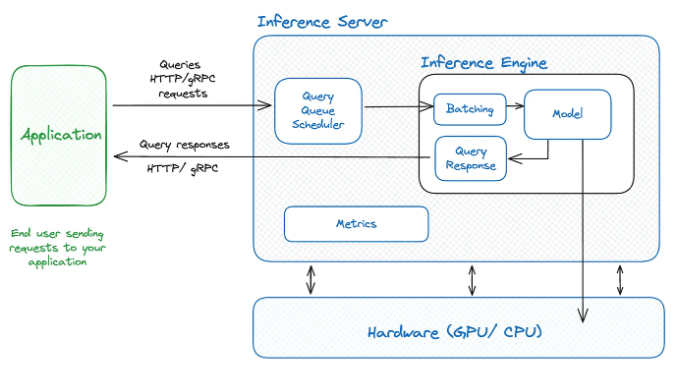

The deployment of large language models (LLM) presents many technical challenges, especially in the context of increasing complexity of the models. These models often require high-performance hardware, with large memory capacity to handle the massive amounts of data and parameters. This not only affects infrastructure costs but also demands thorough optimization during the model serving process. The section below presents a serving system architecture for LLM, designed to meet performance and scalability requirements in real-world environments.

End users will send queries to the application, and these queries are forwarded to an inference server, and then they are placed in a queue and managed by the schedule scheduler. This scheduler will determine the appropriate time to process each request based on the available hardware resources. In the inference server, the inference engine is responsible for aggregating requests into batching to optimize performance before they are processed and responded to the user.

1. Prefill and Decoding

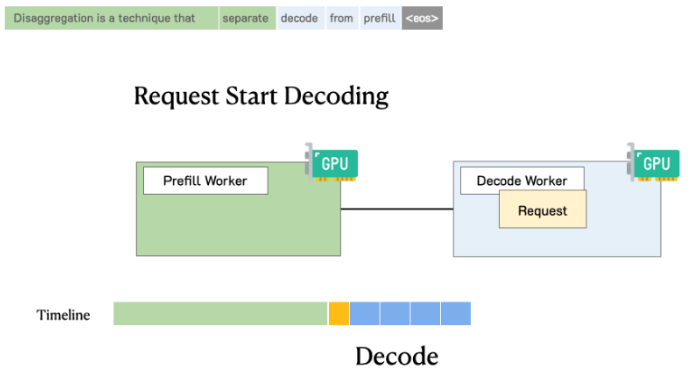

During the inference process of large language models (LLM), the generation of output does not occur simply or smoothly, but is divided into two main stages: prefill and decode. Each stage plays an important role and has its own technical characteristics, directly affecting the performance and processing speed of the entire system. Understanding the nature of these two stages is essential for optimizing the deployment process and serving the model.

1.1 Prefill

- During this process, the model processes the input text by converting the input data into tokens. It calculates the necessary intermediate representations such as KV cache, storing information about the input tokens.

- The main goal of the prefill process is for the model to be able to generate output. It requires a lot of computation and can be highly parallelized to efficiently process input data simultaneously. This stage often consumes a lot of computation and maximizes the power of the GPU.

1.2 Decoding

- During the decoding process, the model generates output tokens sequentially. Each new token is predicted based on the previous tokens generated and the information stored in the KV Cache from the prefill stage.

- The goal of this process is to generate coherent and contextually relevant tokens for the prompt. The generation of the next token will depend on the previous tokens. This phase is slower than prefill because it is done sequentially, leading to underutilization of the GPU’s parallelization capability. This phase will stop until it reaches the max token or generates a stop token.

2. Batching

GPU is a parallel architecture with powerful computing speed. Although it has strong computational capabilities, LLM still faces difficulties as it is mostly bottlenecked by the limited GPU memory bandwidth, with most being used to load parameters. Batch processing is one way to improve this situation. Instead of loading new parameters every time there is a new input string, we can load the model parameters once and then use them to process multiple input strings. This utilizes memory bandwidth more efficiently, leading to higher computational usage, greater throughput, and lower costs.

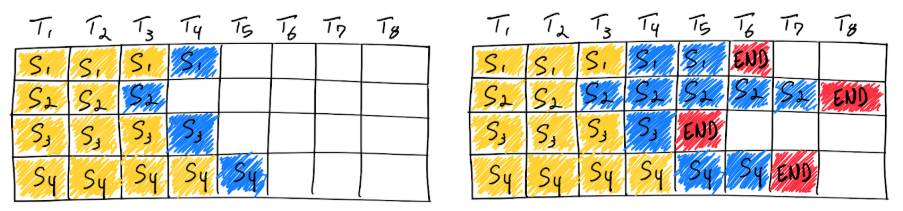

2.1 Navie Batching

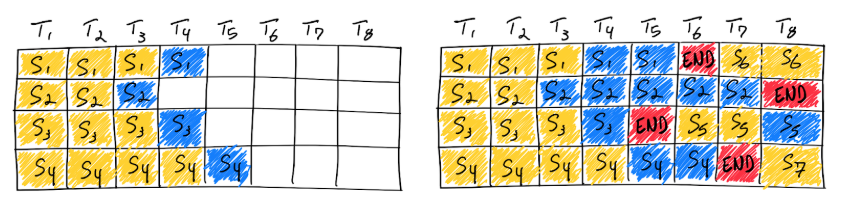

- This traditional approach has a fixed batch size and memory capacity used until the inference process is complete.Unlike traditional deep learning models, batch processing for LLM is challenging due to the regression nature during their inference. In common thinking, one might wish to request that it complete some requests in a batch early, but it is very difficult to free their resources and add new requests to the batch at different completion states. This means that the GPU will not be fully utilized because the lengths of the sequences in the batch are different. The above image illustrates this with the white cells of the requests that, after the generate process ends, are still not freed.For example, using batch to ask simple questions with the same input size of 128 tokens and output all being 16 tokens, naive batching will be effective. However, chatbot services like GPT have input lengths that are not fixed and output also does not have a fixed number of tokens. Using naive batching leads to inefficient GPU utilization, resulting in extremely high usage costs.

2.2 Continue Batching

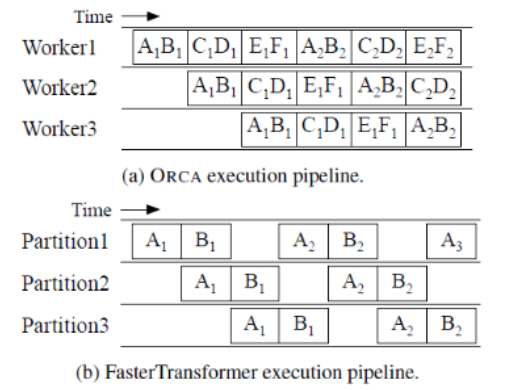

- With the major issue of naive batching like this, ORCA has proposed a new approach to solve this problem. Instead of waiting for every sequence in a batch to complete token generation, ORCA implements scheduling at the iteration level where the batch size is determined for each iteration. As a result, after a sequence in a batch is completed, a new sequence can be inserted in its place, keeping the GPU usage high.

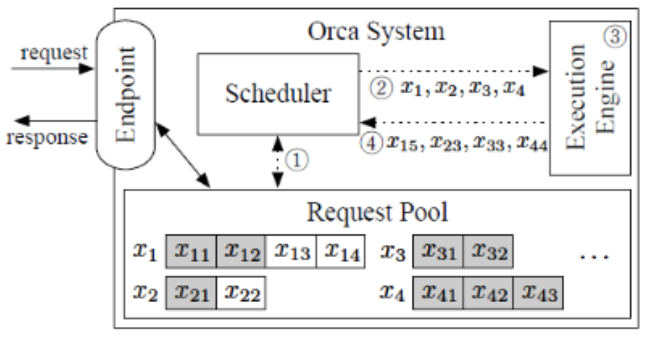

- Iteration-level scheduling : ORCA provides endpoints to handle inference requests. The role of this scheduler is to select requests from the pool, schedule execution tools to run one iteration of the model on those requests, receive output tokens from the tool, and update the pool accordingly. The tool performs tensor operations with parallelization capabilities across multiple GPUs and machines. The scheduler dynamically decides which requests will be executed in one iteration, allowing flexibility in handling incoming requests. When a request is completed, it will be removed from the pool and the response will be sent to the endpoint.

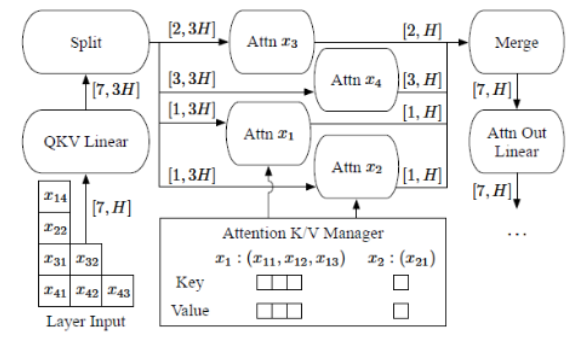

- Selective batching : Helps enhance flexibility in executing split batch in model processing by applying selective split batch instead of applying it uniformly across all tensors. Operations like attention matrix multiplication and normalization, selective batching will flatten into a single 2D structure. This approach is based on a per-token basis so it allows tokens from different requests to be processed as if they were part of the same request. However, with attention operations, requiring unique KV pairs for each request, the split batch is divided and each request is processed individually. After the attention operation is completed, the outputs are merged into a single tensor for subsequent operations.

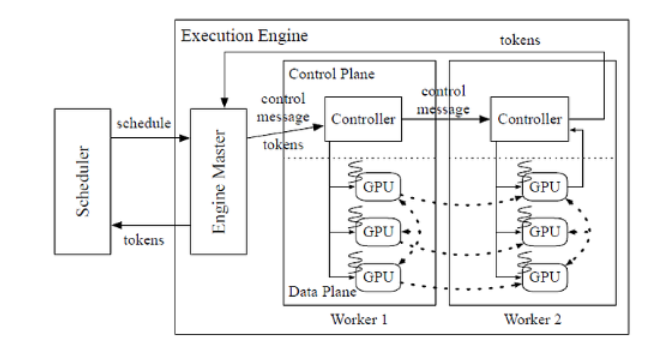

- Distributed Architecture : ORCA combines 2 techniques of model parallelism for transformer models, which are intra-layer and inter-layer parallelism. Intra-layer parallelism divides the matrix multiplication and their parameters across multiple GPUs. On the other hand, inter-parallelism divides the transformer layers across multiple GPUs. The ORCA execution tool facilitates distributed execution by assigning each worker to a different machine responsible for managing one or more CPU threads controlling the GPUs. Each GPU controls an inter-layer partition of the model, and the number of threads is determined by the level of parallelism in the layer. When a single iteration of the model is scheduled for a batch request, the tool provides the first worker with the necessary information. Then, this worker sends a message to the next worker without waiting for its GPU tasks to complete. The final worker ensures that its GPU tasks are completed before collecting the output tokens and sending them back to the tool server. This method allows for efficient distributed execution across multiple machines and GPUs.

- Scheduling algorithm : ORCA’s Scheduler is designed to efficiently manage the request process by selecting a limited number of requests based on the time they arrive. When scheduling the first request, it will allocate memory slots to store the necessary KV. The scheduling process involves selecting 1 batch request from the group in which the select function ensures that the chosen requests are prioritized according to their arrival time. The scheduler also checks if there is enough memory for the new requests based on the maximum number of tokens required. The ORCA scheduler also optimizes the execution of workers by placing tasks into multiple batches. Instead of waiting for each batch to complete before scheduling the next batch, the scheduler ensures that the number of batches running in parallel matches the number of workers.

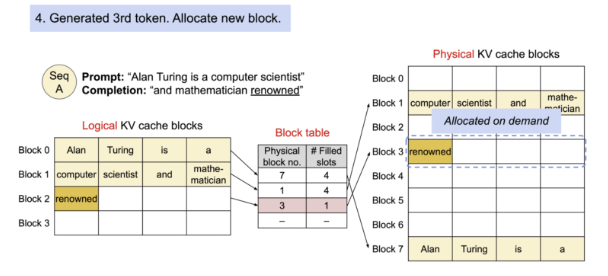

3. Paged Attention

- Research shows that current systems are wasting 60-80% of KV-Cache memory. Therefore, there needs to be a method to improve this memory waste to use less GPU to achieve the same output.

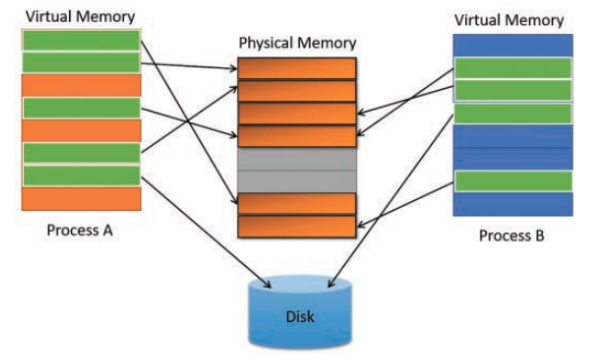

- Paged Attention has been inspired by virtual memory paging of operating systems to manage and allocate computer memory segments. It allows for more efficient use of memory and reduces waste.

3.1 KV-Cache

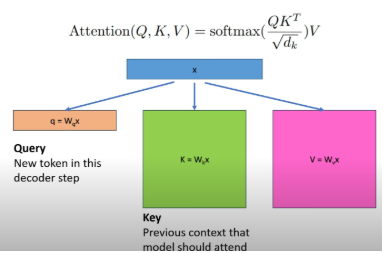

- The KV cache plays a crucial role in processing and generating text through matrix operations using the self-attention mechanism that identifies the current relevant part of the text related to the generated vectors. For each generated token, we will need to perform matrix multiplication of the Q, K, V values, leading to a large computational demand.

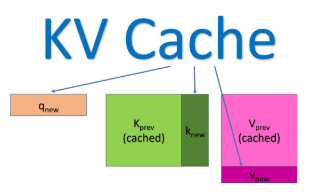

- Therefore, instead of recalculating the values of these KV pairs for each time a new token is generated, we will store the KV matrix to be reused in subsequent calculations. The initial cache is empty and we need to calculate memory beforehand to allocate for the entire sequence to start generating new tokens. However, the implementation of such traditional KV cache leads to significant waste as most of it is not used.

3.2 Optimize KV cache with Paged Attention

- The KV cache storage systems store in continuous memory space. However, it causes significant waste when it leads to certain issues:

- Fragmentation in: Memory is allocated at maximum right when the first token is generated but is never fully utilized because the system does not know how many tokens the model will generate. Reservation : To ensure the token generation process is not interrupted, the system will exclusively allocate all the memory during the token generation process. Even if only a part of that allocated memory is used, the rest remains available but cannot be accessed by other requests.

- External fragmentation: External fragmentation occurs when fixed-size memory blocks do not match the new memory request, leading to unused gaps between them that cannot be allocated to other processes.

- The result leads to about 60-80% of the KV cache memory allocated but not used. Therefore, Paged Attention applies memory paging techniques to manage the KV cache more efficiently. It allocates blocks dynamically, allowing the system to store continuous blocks in arbitrary parts of the shared memory space.

- Paged Attention removes external fragmentation where the gaps between fixed memory blocks are not used and minimizes fragmentation while memory is allocated beyond the actual demand. The use of Paged Attention has helped reduce KV Cache memory waste from 60-80% down to about 4% of memory, enabling inference with a larger batch size.

- In addition, Paged attention has a few other useful features such as parallel sampling/decoding. This method helps generate multiple output tokens at the same time with the same prompt. It allows for multiple responses and optimal response choices. Produces outputs with mixed decode strategies like top-p, top-k,..

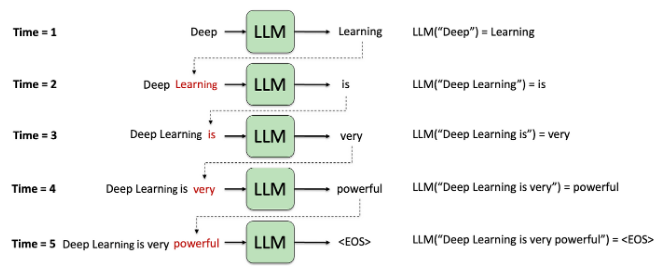

4. Radix Attention

4.1 The issue of redundancy in prefix processing

- During the serving process of large language models (LLM), after each inference, the system often frees the entire KV cache – including intermediate representations computed during the prefill stage. This leads to an important limitation: different requests, even if they share the same input (prefix), cannot reuse the computed results, causing redundant computations and reducing processing efficiency.

- This challenge is even more evident in systems that need to process batch queries with similar content (e.g., chat, context-based search). To address this, an effective approach has been proposed: using radix tree data structures to store and retrieve KV cache based on prefix.

4.2 Radix Attention

- Radix Attention is a technique developed to optimize the reuse of KV cache between queries with similar input. This method uses a radix tree to systematically organize and manage KV cache: each node in the tree represents a sequence of tokens, accompanied by corresponding KV cache vectors.

- When a new query arrives, the system will search in the radix tree to determine the longest prefix that matches the input token string. If found, the corresponding KV cache will be reused, helping to minimize computation in the prefill stage.

- Instead of removing KV cache after a request has ended, the system will store them in a radix tree and map them according to the corresponding token strings. For parts that are no longer in use, Radix Attention applies a removal strategy based on the LRU (Least Recently Used) algorithm to free up memory intelligently and efficiently.

- Thanks to the ability to reuse KV cache by prefix, Radix Attention is particularly suitable for applications with a large number of consecutive or parallel queries with similar content. However, in cases where the queries have diverse input contexts, do not share a prefix, or require extremely low latency and optimal memory management, the application of this method needs to be carefully considered.

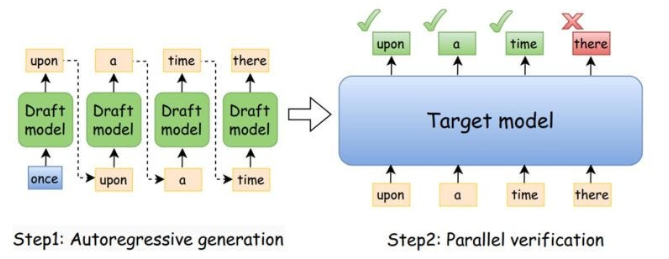

5. Speculative Decoding

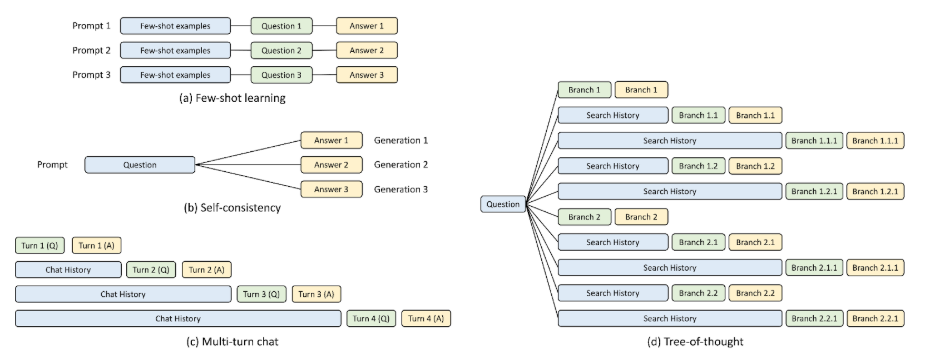

With the growing demand for creating real-time or near real-time documents from models while the size of models is increasing exponentially, containing hundreds of billions of parameters. This leads to the necessity of having an efficient inference strategy to meet the demand for both speed and cost-effectiveness. Speculative has skillfully used 2 models simultaneously with a smaller model distillation used to generate tokens and 1 target model to validate the generated tokens to accomplish this.

5.1 Traditional decoding

With traditional decoding, with input being the context generated in the previous step or prompt, the model will use it to predict the probability distribution. Then, a token will be chosen based on methods such as sampling or greedy search,… And in the case where we need to generate n tokens, the model will need to run forward n times, leading to an increased cost for each token generated as the tokens behind will depend on the tokens in front.

5.2 Speculative decoding

– Speculative decoding appears to solve this problem. It cleverly combines the use of a model much smaller than the target model to generate tokens sequentially much faster than the target model. The tokens generated by this model will then be fed into the target model for validation and may only require one single instance.After training the target model and the draft model, which is the distillation model of the target model, we want to deploy this target model on the system with speculation decoding will work as follows:

– Model draft: Create a K token set based on the input prompt.

– Model target: Perform 1 forward pass for the proposed K tokens validationAccept or reject: If the K token is accepted, they will be returned to the user. If not, they will revert to the last accepted token and perform token generation with the target model until stopped.With this method, we have reduced the number of runs with the target model that has a large size and high cost.

– The advantage of this method is that it can reduce the number of inferences with large target models that are slow and costly. However, the quality depends on the draft model. If the draft model is of poor quality, it will lead to both slow response times and high costs.

6. Quantization

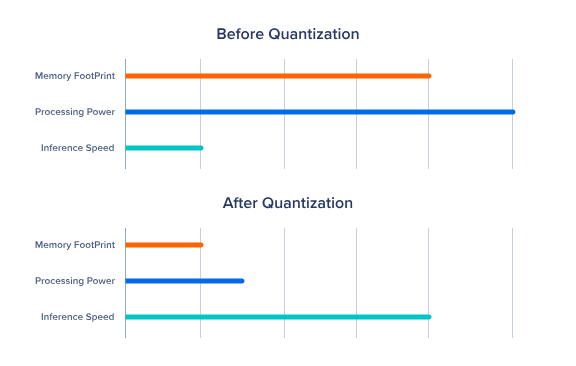

Currently, LLM models with sizes of hundreds of billions of parameters require large computational resources and memory, making deployment difficult. Typically, the weights of models are trained in 32-bit format with high precision. However, this format requires large memory as each 1B parameter needs 4GB VRAM to load the model. Therefore, the quantization technique compresses the model weights to 16-bit, 8-bit, or 4-bit formats to reduce model size, speed up the inference process while still maintaining acceptable performance.

6.1 Benefits from Quantumization

- The size of the model is smaller: Quantization significantly reduces the storage size needed for the model, making storage and deployment easier.

- Reduce memory usage: During the inference process, quantum models require less memory, allowing them to run on resource-limited devices.Faster inference: Hardware like NVIDIA is optimized for low precision computations like 4 bits. Running quantum models on these devices helps increase computation speed regarding inference time.

6.2 Some strategies for quantumization

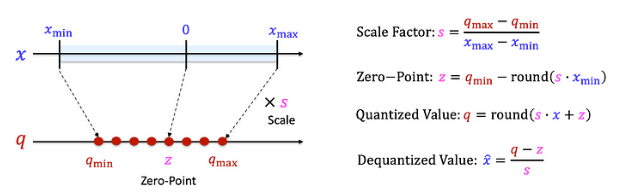

Linear quantization: Linear quantization is a technique for optimizing deep learning models by converting weight values from floating-point format (float32) to a lower-precision integer format (typically int8 or FP16) using linear mapping, thereby significantly reducing model size and computational cost while maintaining acceptable prediction performance. This method maps a range of values in storage space like FP32 to a set of values in lower bit storage space like INT8 through scale and zero-point, helping to accelerate inference speed on hardware such as CPU, GPU, TPU, or NPU, especially useful when deployed on edge devices, mobile phones, or resource-constrained embedded systems. As a result, the model becomes lighter, consumes less power while ensuring near-equivalent accuracy, opening possibilities for wide-ranging applications in computer vision, natural language processing, and mobile AI.

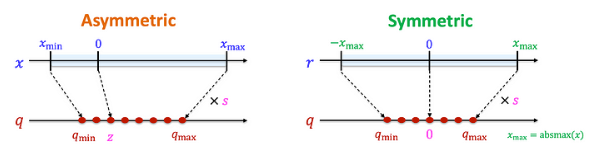

- Asymmetric quantization: It is a technique for converting the weight of or the activation of a model to a storage space with fewer bits where the zero-point does not have to lie in the middle of the value range in the new space like linear quantization. That is, we will redefine the mapping range according to the actual minimum and maximum values of the data. Thus, the entire bit range can be utilized more efficiently, especially when the data is skewed to one side, helping to minimize quantization error more than symmetric linear quantization.

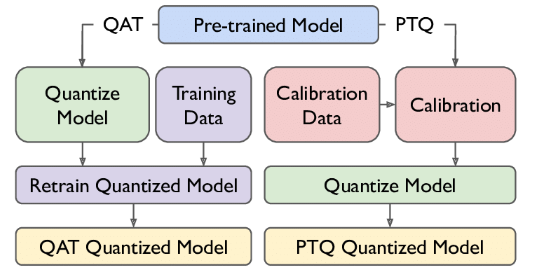

- PTQ: No need to retrain the model while quantization is applied directly to pre-trained models. PTQ analyzes the weight distribution of the model to determine the quantization parameters and converts the weights to a new space that stores fewer bits. While it is quick and easy, it may reduce the performance of the model.QAT: It is a technique that combines converting weight into the LLM training process. By simulating lower accuracy during the training process, models can learn how to adapt to quantization noise during training. However, this method requires a lot of computation and is costly, along with a reduction in accuracy if there is no mechanism to handle the model’s sensitive points during training, such as weight gradient.

- Mixed precision quantization : Using different mixed precision for different parts of the model balancing between performance and accuracy. This method is widely used and brings many expected results.

Quantization techniques bring many benefits such as reducing model size and increasing inference speed. However, the quantization process poses many challenges such as accuracy degradation affecting the results of the model and the complexity during deployment requiring the implementer to have in-depth knowledge of the techniques and characteristics of the model.

Conclusion

The deployment of LLM models in real-world environments presents numerous comprehensive challenges, not only from a technical perspective but also related to the issues of operations and resource optimization. In the context of increasingly expanding data scales along with high expectations from users, requirements for low latency, flexible scalability, and reasonable operational costs are becoming increasingly urgent. Therefore, to build systems serving LLM efficiently, there needs to be close and flexible coordination among various optimization techniques to both effectively meet user demands and ensure long-term cost and performance efficiency.

See more: