Big data analytics has moved from buzzword to backbone of digital business. Across industries, leaders no longer ask whether they should invest in big data analytics but how to operationalize it at scale, embed it into daily decisions, and link it directly to revenue, efficiency, and risk outcomes.

In simple terms, big data analytics is the disciplined process of collecting, processing, and analyzing massive, varied, and fast moving datasets to uncover patterns, trends, and correlations that humans alone could never see. When done well, big data analytics turns customer clicks, sensor readings, transactions, and social interactions into a continuous stream of strategic insight that drives competitive advantage.

This article explains what big data analytics is, how it works end to end, the main types of analytics, real world use cases, tools, skills, and emerging trends while keeping a practical focus on how organizations can implement big data analytics for measurable business value.

What is big data analytics

Big data analytics applies statistical methods, machine learning, and AI techniques to very large collections of structured, semi structured, and unstructured data. The goal is to generate insights that support timely and informed decisions.

The discipline is often described in terms of five key characteristics.

- Volume refers to the scale of information, from terabytes to petabytes, generated by applications, connected devices, and enterprise systems.

- Velocity captures the speed at which data arrives, including continuous streams in real time.

- Variety covers the many formats involved, such as tables, text, images, video, logs, and sensor readings.

- Veracity relates to data quality, noise, and uncertainty.

- Value focuses on the outcomes that organizations obtain when they turn raw inputs into improvements in performance or innovation.

Modern platforms that support big data analytics bring together distributed storage, parallel processing, and advanced algorithms. This combination allows teams to move from simple reporting toward use cases such as dynamic pricing, fraud detection, and predictive maintenance, where timeliness and scale both matter.

How big data analytics works from raw data to insight

Although architectures vary, most initiatives follow a similar path from initial data capture to ongoing optimization.

Data collection and ingestion

Organizations collect information from many internal and external systems. Application and web logs record user interactions and system behavior. Customer and resource management systems store structured records of transactions and relationships. Social channels, reviews, and service transcripts hold unstructured feedback and sentiment. Connected devices and industrial equipment produce continuous telemetry and status signals. Financial systems create detailed traces of payments, invoices, and account activity.

Data may arrive as continuous streams or as periodic batches and is often directed into a central repository such as a data lake, warehouse, or lakehouse that can scale horizontally across servers or cloud resources.

Data storage and organization

The storage layer must accommodate both structured tables and unstructured files. Warehouses store curated, modeled data that is ready for reporting and business intelligence. Lakes hold raw files such as JSON, CSV, and binary formats on low cost, scalable storage so that nothing is lost during early stages. Lakehouse approaches combine strengths from both, offering governance and performance while still allowing flexible ingestion.

Technologies such as distributed file systems, cloud object storage, and NoSQL databases play a central role here. They provide the resilience and scalability needed for large analytical workloads without being limited by the capacity of a single machine.

Data processing and transformation

Before advanced analysis can begin, data must be processed. Two main patterns are common. Batch processing handles large collections at scheduled intervals and is well suited to historical reporting and heavy transformations. Stream processing reacts to events as they arrive and is required when use cases need responses in seconds or less, such as fraud checks or personalization.

Frameworks like Apache Spark, Flink, and Kafka Streams distribute work across many nodes. They handle joins, aggregations, and feature engineering at a scale and speed that would be impractical with traditional tools.

Data cleaning and quality management

Large volumes of information amplify quality issues. Records often contain duplicates, missing values, inconsistent formats, or conflicting identifiers. Without systematic cleaning and monitoring, results quickly become unreliable.

Data teams therefore invest in rules for validation, anomaly detection on incoming feeds, reference data management, and standardized taxonomies. Automated checks and observability help detect schema changes or upstream problems before they affect downstream models and dashboards.

Analysis, modeling, and visualization

Once data is prepared, analysts and data scientists apply a range of methods. These include data mining for pattern discovery, clustering and segmentation, predictive models such as regression and classification, recommendation algorithms, deep learning for media and sequences, and time series approaches for forecasting and anomaly detection.

Visualization and business intelligence tools then present the results in a way that decision makers can use. Dashboards, interactive reports, and alerting systems connect analytical outputs to day to day work in marketing, operations, finance, and other functions.

Operationalization and continuous improvement

Analytical models and rules provide value only when they are integrated into operational systems. Common patterns include real time scoring services consumed by applications, decision engines that combine policy and model outputs, and scheduled processes that refresh forecasts or risk scores.

Performance monitoring closes the loop. Teams track accuracy, latency, adoption, and business impact, then retrain or adjust models as behavior, markets, and regulations change.

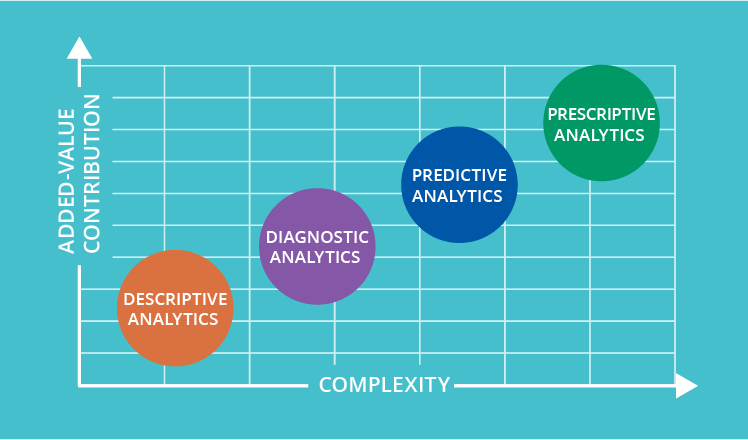

Core types of big data analytics

Descriptive analytics

Descriptive work answers the question of what happened. It summarizes historical performance using metrics, trends, and distributions. Examples include retention dashboards, channel performance reports, and utilization views in logistics or manufacturing.

By consolidating and visualizing detailed records at scale, descriptive analytics creates a common factual base for conversations about performance across departments.

Diagnostic analytics

Diagnostic analysis focuses on why an outcome occurred. It explores relationships and potential causes by segmenting customers, products, or regions and by correlating behavior with results.

Techniques such as cohort analysis, multivariate analysis, and controlled comparisons help identify drivers of churn, failure, or success. The ability to drill into very large datasets allows teams to identify interactions and patterns that would otherwise remain hidden.

Predictive analytics

Predictive analytics estimates what is likely to happen in the future. It uses historical data and statistical or machine learning models to forecast outcomes such as demand, probability of purchase, likelihood of churn, default risk, or expected time to failure for equipment.

The large scale and variety of data used in these models improves their accuracy. Signals from behavior, context, and history can be combined into rich feature sets that improve predictive power.

Prescriptive analytics

Prescriptive work concentrates on what action should be taken. It builds on forecasts and simulations to recommend choices such as price levels, promotion strategies, staffing plans, or routing decisions.

Optimization algorithms evaluate many scenarios under constraints and suggest actions that maximize or balance objectives like profit, service level, or risk exposure. The prescriptive layer is where analytics moves closest to automated decision making.

Other specialized approaches

Organizations also apply spatial analysis for location based questions, natural language processing to text, and real time analytics on continuous streams. These specialized branches extend analytical coverage beyond traditional tabular data.

Benefits of Big Data Analytics for Modern Organizations

When strategically implemented, big data analytics delivers benefits that go far beyond reporting. The impact spans revenue growth, cost optimization, risk reduction, and innovation across the enterprise.

Smarter, Faster Decisions

Big data analytics gives decision makers near real time visibility into performance, customer behavior, and operational risk. Instead of relying on intuition or outdated reports, leaders use dashboards and predictive models based on fresh data that reflects current market conditions. That agility is critical in volatile markets where competitive windows close quickly. Organizations that operationalize big data analytics gain decision velocity advantages that translate directly into market share and profitability improvements.

Revenue Uplift and Personalization

Big data analytics underpins personalized recommendations, dynamic pricing, and micro segmented campaigns that drive revenue growth. Retailers and e commerce platforms use big data analytics to tailor product recommendations to individual browsing and purchase history. Streaming platforms leverage big data analytics to suggest content that matches viewer preferences and behavior patterns. Financial services firms use big data analytics to personalize product offers to individual risk profiles and life stages. These personalized experiences increase conversion rates, reduce abandonment, and lift customer lifetime value substantially.

Operational Efficiency and Cost Savings

By analyzing end to end process data, organizations use big data analytics to identify bottlenecks and waste in supply chains that inflate costs. They optimize inventory, staffing, and asset utilization by using demand forecasts and resource planning models built on big data analytics. They reduce unplanned downtime via predictive maintenance that alerts maintenance teams to potential failures before critical assets break down. These improvements compound to generate significant cost savings and productivity gains that flow directly to profitability.

Risk Management and Fraud Detection

Big data analytics is central to modern risk and security functions across financial services, insurance, and online retail. Financial institutions scan transactions at scale using big data analytics to detect suspicious patterns indicative of fraud. Insurers use big data analytics to identify applicants with elevated risk profiles that might not be apparent from traditional underwriting. Online businesses continuously scan behavior and network activity for anomalies using machine learning powered big data analytics. These systems flag suspicious events in milliseconds, enabling rapid response and loss prevention that protects revenue and brand reputation.

Innovation and New Business Models

Perhaps the most strategic benefit is the ability to experiment and innovate at scale. With robust big data analytics capabilities, organizations can test new products, features, or pricing strategies using real time data and A/B testing. They can create data driven services such as usage based insurance or predictive maintenance offerings that generate new revenue streams. They can monetize aggregated and anonymized data as a product sold to other businesses.

Key Tools and Technologies for Big Data Analytics

The technology landscape for big data analytics is broad, but it can be grouped into several functional layers that work together to enable end to end analytics workflows.

Data Storage and Management Infrastructure

Hadoop and HDFS provide distributed storage for large, mixed format datasets that enabled the big data revolution by making it economical to store and process petabytes of data. Cloud object storage offers highly scalable storage foundations for data lakes and lakehouses that scale elastically with demand. NoSQL databases including document, key value, and wide column stores provide optimized performance for flexible schemas and large scale. Data warehouses and lakehouses provide columnar, MPP databases and unified platforms designed specifically for analytical workloads rather than transactional operations.

These platforms provide the backbone that enables big data analytics without being constrained by traditional database limits on volume, velocity, or variety.

Data Processing and Computation Engines

Apache Spark stands as the dominant in memory, distributed processing engine for batch and streaming big data analytics, offering APIs in Python, Scala, SQL, and R. Apache Flink and Kafka Streams provide low latency stream processing for real time analytics use cases where sub second latency is critical. MapReduce and YARN form the foundational components in some Hadoop based big data analytics stacks, though Spark has increasingly replaced MapReduce for most modern workloads.

These frameworks enable parallel execution of queries, transformations, and machine learning workloads across clusters of commodity hardware, making big data analytics economically feasible at massive scale.

Analytics, Machine Learning, and AI Platforms

Python and R represent the core programming languages for data science and big data analytics, offering rich ecosystems of libraries and tools for statistical analysis and machine learning. Machine learning frameworks including TensorFlow, PyTorch, and scikit learn enable building models on top of big data platforms for predictions at scale. SQL engines for big data increasingly democratize analytics by making distributed data accessible through familiar SQL interfaces rather than requiring custom code.

As AI advances, these tools increasingly integrate with data platforms, pushing AI powered big data analytics deeper into enterprise workflows and enabling non technical users to leverage advanced analytics.

Visualization and Business Intelligence Tools

Visualization platforms like Tableau, Power BI, and Looker enable interactive dashboards and storytelling that make big data analytics results accessible to business stakeholders. Embedded analytics components built directly into business applications make big data analytics results available in context where users already work. These tools are where most stakeholders actually experience big data analytics through charts, tables, and narratives that turn complex outputs into clear decisions.

Skills Needed for Big Data Analytics

Because big data analytics is both technical and business driven, the skill set required is inherently cross functional. Critical capabilities span multiple domains and require integration across specialized expertise.

Technical Skills for Big Data Implementation

Programming proficiency in Python, R, and often Scala or Java is essential for large scale processing and model development. SQL and distributed query engines are required for working with data warehouses and lakehouses at scale. Data engineering skills in building robust data pipelines, ETL and ELT processes, and stream processing infrastructure underpin successful big data analytics implementations. Machine learning and statistics expertise enables designing, training, evaluating, and deploying models that generate accurate predictions. Cloud and DevOps knowledge helps teams work with managed big data analytics services and implement automated deployment pipelines.

Data and Domain Knowledge Requirements

Data modeling and architecture skills help teams design schemas and storage layouts suited to big data analytics workloads and query patterns. Data governance and security expertise ensures compliance with privacy regulations, manages access control, and protects sensitive data. Domain understanding in marketing, operations, finance, healthcare, or other business verticals enables teams to interpret results of big data analytics in proper context and identify high impact use cases.

Business and Communication Skills

Storytelling with data translates big data analytics outputs into narratives that drive action and change behavior. Experiment design skills enable teams to design A/B tests and pilots that validate insights with statistical rigor. Stakeholder management brings alignment between data initiatives and strategic priorities while building organizational support for big data analytics investments.

Effective big data analytics teams combine all three dimensions spanning technical, data, and business rather than relying solely on isolated data scientists working disconnected from business outcomes.

Top Data Sources and Databases for Big Data Analytics

Successful big data analytics initiatives start with understanding what data is available and how it should be stored and organized across systems.

Common Data Sources Across Industries

Customer interaction data including web logs, app events, marketing campaigns, and support tickets reveals how customers engage with products and services. Operational data from sensor readings, manufacturing logs, supply chain events, and fleet telemetry provides insight into production efficiency and asset performance. Financial and transactional data encompassing payments, invoices, account activity, and billing events drives revenue and profitability analysis. External and open data including weather, demographics, macroeconomic indicators, benchmarks, and social media provides contextual signals that enhance predictive power.

Combining these sources allows big data analytics to generate richer, more accurate insights than any single system alone and reveals cross domain patterns invisible in isolated datasets.

Databases Best Suited for Big Data Workloads

Distributed NoSQL databases excel at storing large volumes of semi structured or unstructured data while supporting flexible schemas and horizontal scale. They enable organizations to store diverse data formats without rigid predefined schemas. Columnar data warehouses are optimized for analytical queries and aggregations on structured data, a core part of big data analytics workflows. They achieve superior compression and query performance for fact table scans. Time series databases handle high velocity sensor and event data, essential for monitoring and real time analytics applications where timestamps and sequential events drive insights.

The choice of database depends on the nature of the data and the requirements of the big data analytics workloads including latency needs, query patterns, and consistency requirements.

Big Data Analytics Versus AI: How They Relate

A recurring question is whether big data analytics is the same as AI. They are distinct but deeply intertwined in modern systems.

Big data analytics represents a broader discipline focused on extracting insights from large, complex datasets using a spectrum of methods from basic aggregation to advanced modeling.

Artificial intelligence focuses on building systems that can perform tasks that typically require human intelligence, such as perception, reasoning, and decision making.

Machine learning and deep learning key branches of AI are integral techniques inside modern big data analytics workflows. Conversely, most AI systems require large amounts of data and therefore rely on big data analytics platforms for training and evaluation. In practice, organizations pursue AI driven big data analytics where predictive and prescriptive models are trained on big data and then deployed into operations to automate or augment decisions at scale.

Challenges and Pitfalls in Big Data Analytics

While the promise of big data analytics is compelling, many initiatives stumble due to recurring challenges that require proactive management and strategic attention.

Time to Insight and Technical Complexity

Integrating disparate systems, building data pipelines, and maintaining clusters or cloud services is technically complex and resource intensive. Without strong architecture and automation, big data analytics can become slow and brittle, eroding its value proposition. Organizations often underestimate the engineering effort required to move from proof of concept to production at scale. The fastest moving competitors invest heavily in platform engineering and automation to accelerate time to insight from months to weeks.

Data Quality and Governance Challenges

Scaling data volumes multiplies quality and governance issues exponentially. Inconsistent definitions, missing values, and ungoverned access undermine big data analytics outcomes and expose organizations to compliance risk. As data moves through multiple systems and transformations, errors compound and propagate downstream. Without disciplined governance, big data initiatives become trust liabilities rather than strategic assets.

Security and Privacy Considerations

Sensitive personal and financial information is often central to big data analytics initiatives. Misconfigurations, weak access controls, or lack of anonymization create substantial legal and reputational risk if breaches occur. Privacy regulations like GDPR and CCPA impose strict requirements on how personal data can be collected, stored, and analyzed. Privacy preserving techniques and strong security controls are non negotiable foundations for sustainable big data analytics programs.

Skills Gaps and Organizational Silos

Even with mature tools, big data analytics requires specialized skills that are in high demand and scarce in the job market. At the same time, data teams often sit apart from business units, creating gaps between insight and execution. Insights generated by data science teams languish in reports if they are not actively translated into business action. Successful organizations deliberately invest in talent, training, and cross functional collaboration to bridge technical and business perspectives.

Technology Sprawl and Architectural Fragmentation

The big data analytics ecosystem evolves quickly with new tools, platforms, and frameworks emerging constantly. It is easy to accumulate overlapping tools and platforms without a coherent architecture. This increases cost and complexity while reducing reliability and maintainability. A strategic, composable approach to tooling helps avoid this trap by establishing clear interfaces between components and avoiding vendor lock in through open standards and APIs.

Emerging Trends in Big Data Analytics

Looking ahead, several trends are shaping the next generation of big data analytics and its impact on business.

AI Native and Agentic Analytics Capabilities

New platforms embed generative AI and agentic capabilities directly into big data analytics workflows, democratizing analytics to non technical users. These systems can automatically generate SQL queries, code, and dashboards from natural language prompts. They orchestrate multi step analyses across data sources and tools, combining insights that no single analyst could manually combine. They provide explanations and narratives for findings, boosting trust and adoption by making black box models more interpretable. This convergence of AI and big data analytics is lowering the barrier to entry for non technical users while increasing the sophistication of what expert users can accomplish.

Real Time and Edge Analytics at the Data Source

As IoT and streaming data sources proliferate, organizations are shifting from batch centric to real time big data analytics workflows. Edge computing brings processing closer to data sources, reducing latency and enabling new use cases. Real time anomaly detection on machines and networks detects problems as they emerge. Instant personalization in apps and digital experiences happens in milliseconds based on current user behavior. Autonomous systems respond to sensor data without human intervention, making decisions at machine speed rather than human speed. This shift from periodic batch to continuous real time analytics fundamentally changes how organizations respond to opportunities and threats.

Data Products and Composable Analytics Architectures

Leading enterprises are structuring big data analytics capabilities as reusable data products, stable documented assets that provide high quality data and insights to multiple teams across the organization. Composable architectures built on APIs and modular services make big data analytics more scalable and maintainable. Rather than each team building isolated analytics pipelines, organizations create shared data infrastructure that serves multiple stakeholders. This approach reduces duplication, accelerates time to insight, and improves data quality through centralized curation and governance.

Responsible and Explainable Analytics Systems

As big data analytics influences credit decisions, hiring, healthcare, and policing, scrutiny over bias, fairness, and transparency is intensifying. Organizations are increasingly investing in model explainability and auditability to understand how predictions are made. They conduct bias detection and mitigation in big data analytics pipelines to identify and correct systematic errors. They implement stronger governance for AI driven analytics use cases to ensure decisions align with ethical principles. These practices are shifting from optional enhancements to core requirements for sustainable big data analytics programs in regulated industries.

Putting Big Data Analytics Into Practice: Strategic Recommendations

Start from business outcomes rather than available tools and technologies. Define clear use cases such as churn reduction, fraud detection, or predictive maintenance, then design big data analytics capabilities that directly support those outcomes. This ensures investments generate measurable business value rather than creating impressive technical systems that gather dust unused.

Invest in data foundations as the single most important enabler of effective big data analytics. High quality, well governed, and discoverable data is more valuable than sophisticated algorithms applied to poor data. Shortcuts on data quality lead to long term friction and erode trust in analytics outputs. Build strong data stewardship practices, implement rigorous quality checks, and maintain clear lineage and documentation of data assets.

Adopt a lakehouse or unified analytics architecture to minimize fragmentation across warehouses, lakes, and machine learning platforms. Fragmented architectures create silos, complicate governance, and force expensive replication of data across systems. A unified architecture with clear layers simplifies governance and accelerates big data analytics delivery by enabling single sources of truth.

Democratize analytics responsibly by combining self service tools with training, guardrails, and monitoring so that more people can leverage big data analytics without compromising quality or security. Not every insight requires a data scientist, but democratization without governance creates sprawl and risk. Establish clear policies on what data can be accessed, how it can be used, and how models should be validated before deployment.

Treat analytics models as products that require ongoing monitoring, maintenance, and iteration. Monitor model performance in production to detect degradation before it impacts decisions. Collect feedback from business stakeholders on whether predictions prove accurate and useful. Iterate based on changing behavior and business priorities rather than treating models as static artifacts built once and forgotten. This product mindset transforms big data analytics from academic exercise to business capability.

About HBLAB

HBLAB is a software development partner that helps organizations turn data into business outcomes by building the engineering foundations behind modern big data analytics programs, including scalable data platforms, reliable pipelines, and production ready AI features.

With 10 plus years of experience and a team of 630 plus professionals, HBLAB can staff end to end delivery across data engineering, backend development, cloud enablement, and analytics integration, so teams can move from prototypes to dependable systems without stalling on hiring.

Quality and repeatability matter in data work, especially when pipelines and models must run continuously. HBLAB holds CMMI Level 3 certification, supporting mature processes that help reduce rework and improve delivery consistency for complex analytics initiatives.

Since 2017, HBLAB has also focused on AI powered solutions, which is useful when big data analytics requirements expand into forecasting, anomaly detection, personalization, and automation, and when teams need practical guidance on how to operationalize models.

HBLAB offers flexible engagement models including offshore, onsite, and dedicated teams, with cost efficient services often positioned around 30 percent lower cost.

👉 Looking for a partner to modernize your data stack and ship analytics faster with confidence?

CONTACT US FOR A FREE CONSULTATION

FAQ

1. What is big data analytics?

Big data analytics is the systematic processing and analysis of very large and complex datasets to extract insights, uncover patterns, and support data informed decisions.

2. What are the 4 types of big data analytics?

The four common types are descriptive, diagnostic, predictive, and prescriptive analytics, which map to what happened, why it happened, what will likely happen, and what to do next.

3. What is the salary of big data analytics?

Pay varies by role and country, so it is usually clearer to anchor on job titles. In the US, Built In lists an average Data Engineer salary around $125,808 and an average Big Data Engineer salary around $151,131.

4. Is big data analytics easy?

It is not “easy” at first because it mixes data engineering, statistics, and business thinking. It becomes manageable when learning is structured around real projects like building a pipeline, validating data quality, and answering one concrete business question at a time.

5. Can you make $500,000 as a data engineer?

It can happen, but it is not typical and is usually tied to senior roles where total compensation includes equity and bonuses. Built In’s pay ranges show many roles top out far below that, and ZipRecruiter notes there are not many “Data Engineer 500K” jobs.

6. Will AI replace big data?

AI will not replace big data because AI systems depend on large volumes of data and strong data infrastructure to train and run reliably. The bigger shift is that AI will automate parts of analytics work, while organizations still need engineers and governance to make data usable and trustworthy.

7. What is the 30% rule in AI?

There is no single universal definition, but it is commonly used as a practical target such as aiming for about 30% automation, time savings, or measurable throughput improvement in a workflow as an early, safer goal.

Some sources also use “30% rule” to describe training data or quality thresholds, which adds to the confusion.

8. Is a data analyst a dead end job?

It is not a dead end if it is treated as a foundation role that leads to analytics engineering, product analytics, data science, BI ownership, or domain leadership. Many career paths in data expand by adding SQL depth, stakeholder ownership, and experimentation skills, rather than only doing reporting.

9. Which 3 jobs will survive AI?

No job is guaranteed, but roles tend to be more resilient when they combine human judgment, messy real world context, and accountability. Based on the World Economic Forum’s emphasis on large scale job transformation and upskilling needs, strong candidates include healthcare roles, skilled trades, and AI or data engineering roles that build and run the systems.

10. What job pays $400,000 a year without a degree?

This is rare, but some high performing store manager roles can reach very high total compensation even without a college degree, such as the Walmart example reported via the Wall Street Journal and covered by Yahoo Finance.

11. What jobs will be gone by 2030?

It is more accurate to say tasks will be automated rather than entire job categories disappearing overnight. The World Economic Forum expects major job disruption by 2030 with millions of roles displaced and created, which implies routine, repetitive, and highly standardized work is most exposed unless it evolves.

12. What is the 10 20 70 rule for AI?

The 10 20 70 rule is a change management heuristic that says AI success depends roughly 10% on algorithms, 20% on data and technology, and 70% on people and process change.

READ MORE:

– AI and Machine Learning Trends 2026: The Solid Basis for Enterprise Transformation

– Automatic Data Processing: The Complete Guide to Transforming Business Operations in 2026

– Data-Driven Business Strategy: The Key to Unleashing Breakthrough Growth