Today’s organizations are deploying AI agent development to create systems that think, adapt, and act with genuine autonomy. These intelligent agents are fundamentally reshaping how businesses operate, transforming everything from customer service to supply chain management to knowledge work itself.

Introduction: From Automation to True Intelligence

Unlike traditional software that executes pre-programmed instructions, AI agents represent a fundamental paradigm shift. They perceive their environment, analyze complex information, and make decisions independently. This shift carries profound implications. A chatbot answers questions. An AI agent researches, plans, and executes a multi-step solution without waiting for human approval at each stage.

This comprehensive guide walks you through AI agent development from concept to deployment, whether you are an individual developer building your first agent or a chief executive officer evaluating AI agent development solutions for enterprise transformation.

What is an AI Agent?

An AI agent is a software system that autonomously perceives its environment, analyzes information, and executes actions to achieve predefined goals. The critical distinction lies in autonomy. Unlike traditional applications that respond passively to user commands, an AI agent proactively determines what actions to take based on its objectives and the data it encounters.

Consider a practical example. A conventional customer service system waits for a customer to submit a query, then searches a knowledge base and returns a canned response. An AI agent, by contrast, can initiate contact, analyze customer sentiment, research their history across multiple systems, identify the optimal resolution path, and execute it while simultaneously learning from the outcome to improve future interactions.

>> READ MORE: Agentic AI In-Depth Report 2025: The Most Comprehensive Business Blueprint

The Three Core Capabilities of Modern AI Agents

Context Analysis and Perception

An AI agent continuously observes and interprets its environment. This goes far beyond simple text matching.

Modern agents process multiple data types simultaneously: customer emails and chat messages, voice recordings with emotional undertones, transaction data revealing patterns, and real-time sensor information. They extract meaning from this raw information, understanding intent rather than merely matching keywords.

A healthcare agent, for example, does not simply identify the word “pain” in a patient message. Instead, it comprehensively understands severity, location, duration, and relevant medical history to formulate an appropriate clinical response.

Autonomous Decision Making

Once an agent understands its environment, it must determine what to do. This involves evaluating multiple options, weighing trade-offs between competing objectives, and selecting the action most likely to achieve the goal.

An AI agent development system for logistics optimization does not simply follow a predetermined route. Instead, it continuously evaluates traffic patterns, weather conditions, package priorities, and vehicle capacity to dynamically adjust routes in real time, balancing speed with fuel efficiency and delivery window requirements.

Proactive Task Execution and Integration

The final capability distinguishes agents from passive tools. Agents do not simply recommend actions. They execute them. They trigger workflows, update databases, send notifications, call external APIs, and orchestrate complex sequences of operations across disconnected systems. A recruitment agent can post job listings, screen résumés, schedule interviews, and update an applicant tracking system without human intervention, freeing recruiters to focus on relationship-building and final hiring decisions.

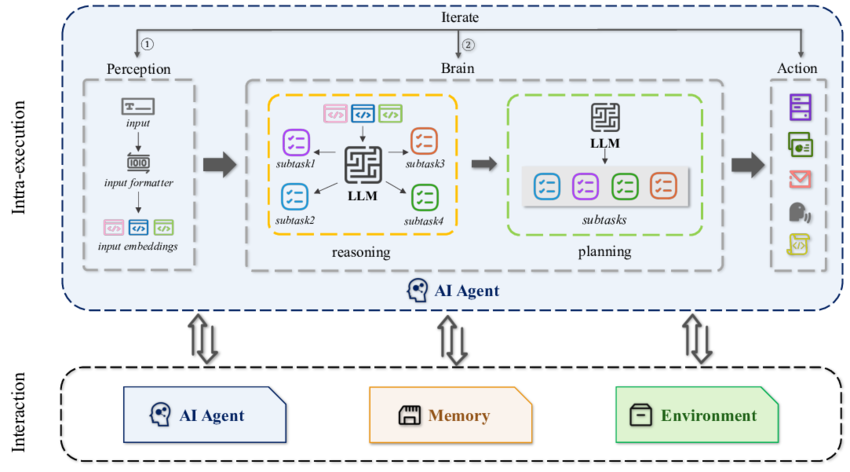

The Architecture of Intelligent Agents: Core Components Explained

To build effective AI agents, you must understand their underlying architecture. Every functional agent shares common components, though how these components interact varies significantly based on the use case.

The Perception Module: Sensing the Environment

An agent’s perception module serves as its connection to the world. This can take multiple forms depending on the agent’s purpose:

- A customer service agent perceives through chat messages, emails, and transaction data

- A robotic agent perceives through cameras, proximity sensors, and motion detectors

- A supply chain agent perceives through inventory systems, demand forecasts, and supplier feeds

The sophistication of perception directly impacts agent effectiveness. A basic system simply receives structured data. Advanced perception involves natural language processing to extract meaning from unstructured text, computer vision to interpret images and video, sentiment analysis to detect emotional content in communications, and anomaly detection to identify unusual patterns that warrant attention.

The Reasoning Engine: The LLM Brain

At the core of modern AI agent development sits the reasoning engine, typically powered by a large language model (LLM) such as GPT-4, Claude, or Gemini.

The LLM does not simply generate text. It serves as the agent’s cognitive center, interpreting perceived information, reasoning about appropriate responses, and planning action sequences.

The LLM evaluates context, considers constraints, weighs trade-offs, and generates decisions. In complex scenarios, the LLM can employ sophisticated reasoning patterns like Chain of Thought (breaking complex problems into steps) or Tree of Thought (exploring multiple solution branches simultaneously).

For AI agent development, model selection is critical. Different LLMs have different strengths:

- GPT models excel at natural language understanding and generation

- Claude prioritizes safety and careful reasoning

- Gemini offers tight integration with Google’s ecosystem

- DeepSeek provides cost-effective alternatives for price-sensitive applications

The optimal choice depends on your specific requirements, budget constraints, and integration needs.

The Knowledge Base: Access to Domain Expertise

An LLM’s training data has a knowledge cutoff. Your organization’s specific information, proprietary methods, customer data, internal policies, and recent decisions are not in that training data. The knowledge base bridges this gap.

Modern AI agent development leverages retrieval-augmented generation (RAG) to keep agents current. Instead of retraining models continuously, RAG systems maintain searchable databases of organizational knowledge. When an agent needs specific information, it queries these databases to retrieve relevant context, which it then incorporates into its reasoning. This approach allows agents to reference real-time data while maintaining the generalization capabilities of large language models.

Knowledge bases can include:

- Documents and internal wikis

- Databases and structured records

- Search indices and content repositories

- APIs returning real-time information

- External data sources and feeds

The Tool Integration Layer: Connection to Action

Reasoning without action is philosophical contemplation, not agency. An agent must connect to external systems to execute decisions. The tool integration layer provides these connections.

Tools take multiple forms:

- Function calls that invoke code performing specific operations

- API integrations enabling interaction with external services such as payment processors and database queries

- Webhook connections that trigger automated workflows in external systems

- Direct integrations with enterprise platforms like Salesforce, HubSpot, or Microsoft Teams

For AI agent development, tool selection significantly impacts agent capability. An agent with access to only basic information retrieval tools is fundamentally limited compared to one that can also execute transactions, trigger workflows, and coordinate with other systems.

The Memory System: Learning from Experience

Effective agents do not treat every interaction as isolated. They maintain memory, both short-term context for current conversations and long-term understanding of patterns. Memory systems in AI agent development take several forms.

Short-term memory maintains context during active interactions. If a customer describes a problem, provides additional details, and then asks for a solution, the agent’s short-term memory chains these interactions together coherently. Long-term memory captures learning from previous interactions.

An agent handling customer support learns which resolutions work best for specific problem types, which customers typically require additional hand-holding, and which issues frequently escalate.

Advanced memory systems use vector databases to store semantic information. Rather than storing raw conversation transcripts, they store meaningful summaries of interactions, indexed in ways that allow rapid retrieval of similar past situations.

This enables agents to apply lessons from previous interactions to new scenarios without explicitly programming every rule.

Types of AI Agents

Not all agents are built the same. AI agent development encompasses multiple agent types, each suited to different scenarios.

Reactive Agents

Reactive agents operate on straightforward decision rules. When condition X is detected, action Y occurs. No complex reasoning, no learning, no memory beyond the current moment.

A reactive agent monitoring security might immediately block flagged transactions, trigger access denials for unauthorized login attempts, or send alerts when systems exceed performance thresholds.

These agents excel at scenarios where the appropriate response to any given situation is clear and consistent.

In AI agent development, reactive agents form the foundation of safety systems. Their simplicity makes them predictable and reliable. However, their limitations are equally clear. They cannot handle novel situations, adapt to changing conditions, or consider context beyond predefined rules.

Deliberative Agents

Deliberative agents engage in reasoning but within defined parameters. They evaluate situations, consider options, and select actions, but their decision-making follows explicit business rules and constraints.

Consider a loan approval agent. It does not simply approve all applications or reject all applications. Instead, it evaluates applications against explicit criteria including credit score thresholds, debt-to-income ratios, collateral valuation, and regulatory requirements. Within these constraints, it makes autonomous decisions, learning to improve over time but always operating within guardrails.

This type of AI agent development dominates enterprise applications because it balances autonomy with control. Organizations need agents that can make decisions without human intervention but cannot take actions that violate regulatory requirements or business policies.

Learning Agents

Learning agents represent the frontier of AI agent development. They do not simply execute preprogrammed logic or apply fixed decision rules. Instead, they observe outcomes of their decisions, extract insights from these outcomes, and continuously improve their future behavior.

A learning agent might start with general guidelines about how to respond to customer complaints. Over time, it observes which responses lead to resolution and satisfaction, which approaches tend toward escalation, and which factors predict successful outcomes.

It gradually shifts its behavior toward more effective approaches, learning that certain customer segments respond better to empathetic language, that some issues benefit from immediate solutions while others improve with structured problem-solving, and that escalation thresholds vary by situation type.

Implementing learning agents requires sophisticated infrastructure. You need robust feedback mechanisms to evaluate agent decisions, continuous retraining pipelines to incorporate new learning, and monitoring systems to ensure the agent is not optimizing toward unintended objectives.

Multi-Agent System

Often, complex problems require multiple specialized agents. A multi-agent system coordinates multiple agents, each with distinct responsibilities, toward a common objective.

In a supply chain context, one agent might monitor demand forecasts, another manages inventory levels, another handles supplier communications, and another optimizes transportation. These agents share information and coordinate actions.

The demand agent triggers the inventory agent to increase stock levels, which prompts the supplier agent to place orders, which the transportation agent then schedules delivery for.

Multi-agent AI agent development introduces complexity but enables handling of problems too large or multifaceted for single agents.

The challenge lies in orchestration, ensuring agents communicate effectively, coordinate action without conflicting, and collectively achieve objectives that no single agent could accomplish alone.

The AI Agent Development Process: From Concept to Production

Building production-ready AI agents requires a structured approach. Whether you are developing your first agent or scaling an enterprise fleet, this process provides the roadmap.

Phase 1: Define Your Objectives and Scope

Every AI agent development project begins with clarity about purpose.

What specific problem will the agent solve?

What are the success metrics?

Who will benefit, and how will they measure value?

This phase requires business and technical stakeholders to align. Executives care about revenue impact, cost reduction, and competitive advantage. Technical teams care about feasibility, integration complexity, and resource requirements.

The objective definition must bridge these perspectives.

Ask yourself:

- What specific decisions does the agent need to make?

- What actions will it take?

- What information does it need to access?

- What constraints must it respect?

- What outcomes constitute success?

Phase 2: Design the Agent Architecture

With clear objectives, you design the agent’s architecture. This involves several components.

First, define the data landscape.

What information sources will the agent access?

Customer data, transactional records, external APIs, real-time streams?

How will you ensure data quality and maintain security?

Second, identify required tool integrations.

What systems will the agent interact with?

What actions will it execute?

- A sales agent might need access to CRM systems, email platforms, and calendar applications.

- A supply chain agent needs inventory systems, forecasting tools, and vendor platforms.

Third, establish constraints and guardrails.

What decisions can the agent make autonomously, and which require human approval?

What regulatory or compliance requirements must it respect?

How do you prevent the agent from taking unintended actions?

Fourth, select your LLM.

This decision impacts agent capabilities, costs, and integration complexity.

Do you need cutting-edge reasoning for complex scenarios, or will a cost-optimized model suffice?

Do you require specific language support, technical domain expertise, or safety features?

Phase 3: Select Your Development Framework and Tools

AI agent development does not require building from scratch. Established frameworks dramatically accelerate development and reduce error risks.

LangChain provides the foundation for most AI agent development projects. It handles model integration, tool management, memory systems, and workflow orchestration. If you are uncertain where to start, LangChain is almost always the right initial choice.

LangGraph extends LangChain for complex, stateful workflows. If your agent needs to maintain sophisticated state across extended interactions or coordinate multi-step processes, LangGraph provides cleaner abstractions than basic LangChain.

CrewAI excels at multi-agent systems. If your project involves multiple specialized agents coordinating toward common goals, CrewAI’s role-based agent definitions and task orchestration simplify development significantly.

AutoGen (Microsoft) emphasizes multi-agent conversation and reasoning. It is particularly strong for scenarios requiring agents to debate approaches, evaluate options, and collectively solve problems.

Vertex AI Agent Builder and AWS Bedrock AgentCore integrate AI agent development directly into cloud platforms. If you are already committed to Google Cloud or AWS, these platforms streamline deployment and offer enterprise features like audit logging and compliance frameworks.

Phase 4: Implement Core Agent Logic

Implementation involves several concurrent workstreams.

Model Configuration:

- Set up your chosen LLM, configure API keys, and test connectivity.

- Define system prompts that shape agent behavior, specifying the agent’s role, communication style, decision-making approach, and constraints.

Tool Integration:

- Implement connections to required external systems. This involves API authentication, error handling, and validation that tools function correctly.

- Test each tool individually before integrating into the agent.

Knowledge Base Development:

- Prepare your knowledge base.

- Document organizational policies, product information, processes, and domain expertise.

- Structure this knowledge to enable rapid retrieval.

- Test that RAG systems can find relevant information quickly.

Memory System Setup:

- Configure short-term and long-term memory.

- Decide what information to retain, how long to retain it, and how to organize it for rapid retrieval.

Prompt Engineering:

Develop and refine system prompts. This is an iterative process. You test initial prompts, observe agent behavior, identify failures or suboptimal decisions, and refine prompts to address these issues.

Effective prompt engineering is as much art as science. It requires understanding how language models interpret instructions and how subtle wording changes influence behavior.

Phase 5: Test Extensively in Controlled Environments

Never deploy agents directly to production. Extensive testing in simulated environments identifies issues before real-world impact.

Functional Testing:

- Does the agent execute its core capabilities correctly?

- Can it access required information?

- Do tools fire appropriately?

- Does it handle edge cases?

Behavioral Testing:

- How does the agent behave in realistic scenarios?

- Does it make sound decisions?

- Does it escalate appropriately?

- Does it maintain consistency across similar situations?

Stress Testing:

- How does the agent handle high volumes?

- Do response times remain acceptable?

- Do systems scale appropriately?

Safety Testing:

This is critical for AI agent development. Try to get the agent to do things it should not.

- Can you manipulate it into accessing restricted information?

- Can you trigger it to violate policies?

- Can you make it generate harmful content?

- These tests identify vulnerabilities before deployment.

User Testing:

Have representative users interact with the agent. Observe where they struggle, what confuses them, and what works intuitively. Real users often reveal issues that development teams miss.

Phase 6: Deploy with Monitoring and Feedback Systems

Production deployment marks the beginning rather than the end of the AI agent development journey. Agents must be continuously monitored and improved.

Deploy gradually if possible. Launch with a limited user segment, monitor performance closely, and expand only when you are confident the agent is functioning correctly.

This staged rollout identifies issues affecting small populations before they impact everyone.

Establish comprehensive monitoring.

Track agent performance metrics including success rates, error rates, execution times, and user satisfaction.

Monitor system health including API latency, error logs, and resource consumption.

Set up alerts that notify teams of problems in real time.

Implement robust feedback mechanisms. Users often interact with agents and immediately know whether the response was helpful. Capture this feedback systematically. Combine it with objective metrics to identify which agent behaviors work and which require improvement.

Phase 7: Continuous Improvement and Iteration

AI agent development is iterative. As agents operate in production, you learn what works and what does not. Use these insights to improve continuously.

Analyze failure cases.

- When an agent makes a poor decision, why did that happen?

- Was the reasoning flawed?

- Did it lack relevant information?

- Were the tools inadequate?

Retrain when necessary. As your organization’s data, policies, and processes evolve, agents must adapt. This might involve simple prompt engineering adjustments or full model retraining with new data.

Expand agent capabilities systematically. Once core functionality is stable, consider expanding what the agent can do. Add new tool integrations, expand knowledge bases, or adjust decision rules.

DOWNLOAD IN-DEPTH AI DOCUMENTS

Industry Applications: Real World AI Agent Development

Different industries are deploying AI agents to solve industry-specific problems.

Financial Services and Risk Management

Banks use AI agent development for fraud detection, credit decisions, compliance monitoring, and customer service. Fraud agents analyze transaction patterns in real time, flagging suspicious activity and blocking unauthorized transactions. Credit agents evaluate loan applications, assessing risk and making approval decisions within regulatory constraints.

The regulatory environment is complex. Agents must maintain audit trails of decisions, explain their reasoning, and never violate regulatory requirements. This pushes financial services agents toward deliberative designs with explicit constraints rather than pure learning approaches.

Healthcare and Life Sciences

Healthcare organizations deploy agents for patient triage, clinical decision support, administrative efficiency, and research acceleration. Triage agents assess symptoms and urgency, routing patients appropriately. Clinical decision support agents recommend treatments, medication adjustments, and diagnostic procedures based on patient data and medical literature.

Privacy and safety are paramount. Healthcare agents must comply with HIPAA and other privacy regulations, maintain data security, never provide medical advice beyond their scope, and escalate complex cases to physicians. This environment requires agents that respect clear boundaries.

Manufacturing and Logistics

Manufacturing plants use AI agent development for predictive maintenance, production optimization, and quality control. Maintenance agents monitor equipment sensors, predict failures before they occur, and schedule maintenance during production downtime. Production agents optimize scheduling, routing, and resource allocation to meet demand while minimizing costs.

Logistics agents optimize routes, manage inventory, coordinate with suppliers, and track shipments. They balance competing objectives including speed, cost, and sustainability, making dynamic decisions as conditions change.

Retail and E-Commerce

Retail organizations deploy agents for demand forecasting, inventory management, personalized customer experience, and pricing optimization. Agents continuously analyze sales data, adjust inventory levels, recommend products to customers, and optimize prices based on demand and competition.

These agents operate in high-volume environments. Performance is critical. Any delay impacts customer experience. Agents must be fast, reliable, and responsive to rapidly changing conditions.

Government and Public Sector

Government agencies use agents for permit processing, benefit determination, information access, and operational efficiency. Agents dramatically reduce processing times for routine applications while freeing humans to handle complex cases.

These agents must be transparent and explainable. When an agent denies a benefit or rejects a permit application, the citizen must understand why. This pushes toward deliberative designs with explicit decision rules.

Getting Started

Whether you are an individual developer or leading an enterprise initiative, here is how to start.

For Individual Developers

Start small. Choose a simple use case such as customer support for a single product category, analysis of specific data, or automation of a single workflow. This bounded scope makes AI agent development manageable.

Choose your framework early. For most projects, LangChain is the right starting point. Set up your development environment, select a Python version, and install required packages.

Select your LLM. OpenAI’s API is the most common starting point, offering straightforward use and well-documented resources. Get an API key and test basic model integration.

Build a simple prototype. Create a basic agent that takes user input, processes it, and returns output. Do not worry about sophistication at this stage. Prove the concept works.

Add complexity incrementally. Integrate tools one at a time. Add knowledge base access. Improve prompts. Each iteration should add capability while maintaining functionality.

For Enterprise Teams

Start with a clear business case. Which process would benefit most from automation? What is the expected return on investment? Get stakeholder alignment around objectives and success metrics.

Assemble a cross-functional team. You need technical expertise (engineers, data scientists), domain expertise (people who understand the process), and business sponsorship. Lacking any of these perspectives leads to misdirected effort.

Invest in infrastructure. Ensure your organization has LLM access, appropriate cloud platforms, monitoring tools, and security frameworks. Do not defer infrastructure decisions. They affect everything that follows.

Start with a pilot. Choose a bounded use case, build a prototype, validate the approach, and measure results. Successful pilots become proof points that justify larger investments. Failed pilots teach valuable lessons about what does not work, often more valuable than successes.

The Future of AI Agent Development

Multi-Agent Systems and Swarms

Organizations will increasingly deploy fleets of specialized agents coordinating toward common objectives. These swarms will tackle problems too complex for single agents.

Embodied Agents in Physical Spaces

Agents will increasingly control physical robots, drones, and autonomous systems. The boundary between digital and physical agency will blur.

Autonomous Enterprises

The ultimate vision involves organizations where AI agents handle most operational decisions and execution, with humans providing strategic direction and oversight. This future remains aspirational but increasingly plausible.

Fusion with Generative Capabilities

Agents will increasingly incorporate generative capabilities such as creating content, designing solutions, and generating code. This combination of autonomous action with creative generation unlocks possibilities we are only beginning to explore.

About HBLAB

AI agents are increasingly defined by their ability to perceive context, reason, and take actions across connected systems, which makes production readiness, security, and integration quality as important as model performance. HBLAB is a trusted software development partner for organizations that want to move from prototypes to dependable AI Agent Development at scale, whether the goal is to build internal agent capabilities or to deliver customer facing agent services.

With 10+ years of experience and a team of 630+ professionals, HBLAB supports end to end delivery across product engineering and team augmentation, aligning engineering execution with real business workflows. HBLAB holds CMMI Level 3 certification, enabling consistent, high quality processes for complex enterprise projects.

Since 2017, HBLAB has been building AI powered solutions and can support practical initiatives such as LLM integration, agent architecture design, tool and API integration, retrieval augmented knowledge systems, and production monitoring strategies.

Engagement is flexible through offshore, onsite, or dedicated team models, with cost efficient delivery that is often 30% lower cost while maintaining professional standards.

👉 Looking for a partner to design, build, or scale AI Agent Development responsibly?

Contact HBLAB for a free consultation

Conclusion: Your AI Agent Development Journey Begins Now

AI agent development is no longer experimental. It is production reality. Organizations worldwide are deploying agents that analyze information, make decisions, and execute actions with increasing autonomy.

The technology is accessible. Frameworks like LangChain, platforms like Google Vertex AI and AWS Bedrock, and models from OpenAI, Anthropic, and Google eliminate barriers that once restricted AI agent development to well-resourced technology companies.

The business case is clear. Organizations deploying AI agents report significant improvements in efficiency, decision quality, customer experience, and competitive position.

The time to start is now. Whether you are an individual developer building your first agent or a chief executive officer evaluating enterprise transformation, begin with clear objectives, choose appropriate tools, and start small. Learn by doing. Each agent you build teaches you lessons that make subsequent agents better.

The future belongs to organizations that master AI agent development.

Start your journey today!

People also ask

What is AI agent development?

AI Agent Development is the end to end process of defining an agent’s goal, designing its architecture (reasoning, memory, tools, safety), implementing integrations, and preparing it for real use. In practice, AI Agent Development includes evaluation, monitoring, and iteration, not only building a demo.

What are AI agents designed to do?

AI agents are designed to achieve goals by selecting and executing actions, often by calling external tools such as search, databases, or business systems. AI Agent Development focuses on making that goal completion consistent, safe, and measurable.

Is ChatGPT an AI agent?

ChatGPT is generally categorized as an AI assistant because it typically responds to user prompts, while an AI agent is structured to pursue goals and take actions via tools with more autonomy. Many teams use ChatGPT style models inside AI Agent Development by adding tool use, memory, and guardrails.

What are the 7 types of AI agents?

There is no single global standard list, but a commonly used “seven type” breakdown for AI Agent Development discussions is:

-

Simple reflex agents

-

Model based reflex agents

-

Goal based agents

-

Utility based agents

-

Learning agents

-

Multi agent systems

-

Hybrid agents (mixing approaches)

What are the 5 types of agents in AI?

A common “five type” version used in AI Agent Development is: simple reflex, model based reflex, goal based, utility based, learning agents.

What are the 4 types of agents?

A practical “four type” simplification used in AI Agent Development planning is: reactive, goal driven, utility optimizing, and learning agents.

Who are the big 4 AI agents?

“Big 4 AI agents” is not a standardized term in AI Agent Development. It usually refers informally to major ecosystems or platforms enabling agent building (for example, leading model providers or major enterprise platforms), so it is best to define which “big 4” your audience means before comparing.

What are tools in AI agents?

In AI Agent Development, “tools” are callable functions or integrations that let an agent do work beyond text generation, such as retrieving data, writing to systems, sending messages, or triggering workflows. Tool design is central to reliable AI Agent Development because it determines what the agent can safely do.

What are the main components of an AI agent?

A clean AI Agent Development architecture typically includes: perception or inputs, a reasoning engine, memory, knowledge retrieval, tool execution, and safety or policy controls. In enterprise AI Agent Development, observability and access control are also essential.

What skills are needed to build AI agents?

AI Agent Development commonly requires a mix of:

-

Product and workflow understanding (clear goals and success criteria)

-

LLM prompting and evaluation

-

Software engineering (APIs, testing, reliability)

-

Data and retrieval basics (knowledge bases, search, RAG)

-

Security and governance (permissions, auditability)

What is the future of AI agents?

The future of AI Agent Development is broader agent adoption inside business software, where agents execute multi step workflows, coordinate with other agents, and operate under stricter governance. As that happens, AI Agent Development will increasingly look like a production engineering practice, not only model experimentation.

What are the 7 main areas of AI?

A common high level grouping relevant to AI Agent Development is machine learning, natural language processing, computer vision, robotics, planning, knowledge reasoning, and human AI interaction.

What are the 12 steps of AI?

There is no universal “12 steps,” but a practical AI Agent Development lifecycle can be framed as: problem definition, data readiness, model choice, prompting or tuning, retrieval design, tool integration, safety design, evaluation, prototype, production rollout, monitoring, iteration.

What are the 5 rules of AI?

There is no single universal set, but many AI Agent Development teams align on five governance principles: safety, privacy, transparency, accountability, and continuous monitoring.

Can AI agents replace developers?

AI agents can automate portions of development work, but AI Agent Development still needs human engineers for architecture, security responsibility, product decisions, and production ownership. AI Agent Development often increases the need for strong engineering fundamentals because tool execution and integrations raise real operational risk.

What is the salary of an AI agent?

An AI agent does not have a salary. Compensation applies to AI Agent Development roles such as AI engineer, ML engineer, data engineer, and platform engineer, and varies by region and seniority.

What are the 5 applications of AI?

Five widely used application areas that frequently motivate AI Agent Development are customer support automation, marketing and personalization, analytics and decision support, operations and workflow automation, and fraud or risk detection.

READ MORE:

– AI in Supply Chain (2025): Unleashing a New Era of Smart Logistics

– Agentic Reasoning AI Doctor: 5 Extraordinary Innovations Redefining Modern Medicine